Fine-Tuning Pretrained Language Models ,Weight Initializations, Data Orders, and Early

当前的预训练模型如BERT都分为两个训练阶段:预训练和微调。但很多人不知道的是,在微调阶段,随机种子不同会导致最终效果呈现巨大差异。本文探究了在微调时参数初始化、训练顺序和early stopping对最终效果的影响,发现:参数初始化和训练顺序在最终结果上方差巨大,而early stopping可以节省训练开销。这启示我们去进一步探索更健壮、稳定的微调方式。

论文地址: https://arxiv.org/pdf/2002.06305.pdf

介绍

当前所有的预训练模型都分为两个训练阶段:预训练和微调。预训练就是在大规模的无标注文本上,用掩码语言模型(MLM)或其他方式无监督训练。微调,就是在特定任务的数据集上训练,比如文本分类。

然而,尽管微调使用的参数量仅占总参数量的极小一部分(约0.0006%),但是,如果对参数初始化使用不同的随机数种子,或者是用不同的数据训练顺序,其会对结果产生非常大的影响——一些随机数直接导致模型无法收敛,一些随机数会取得很好的效果。

比如,下图是在BERT上实验多次取得的结果,远远高于之前BERT的结果,甚至逼近更先进的预训练模型。似乎微调阶段会对最终效 ...

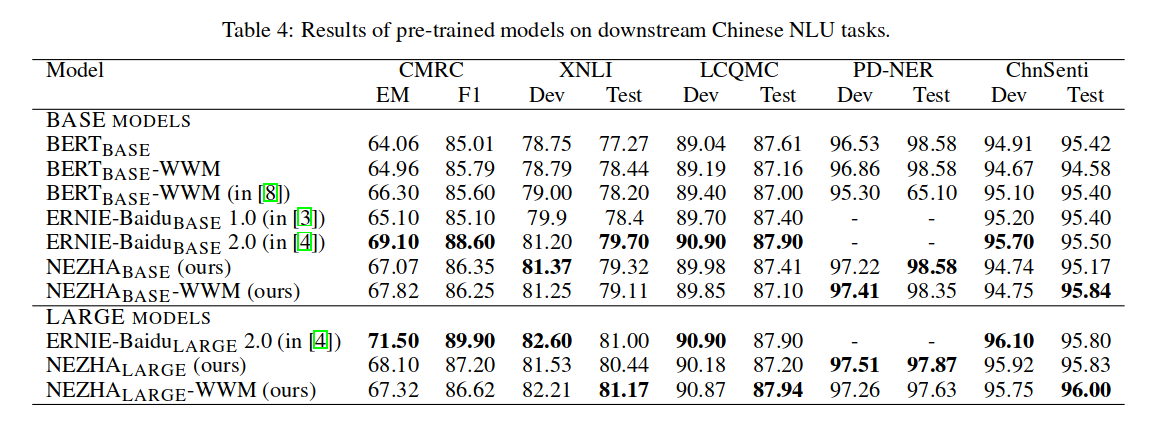

NEZHA-Neural Contextualized Representation for Chinese Language Understanding

预训练模型在捕捉深度语境表征方面的成功是有目共睹的。本文提出一个面向中文NLU任务的模型,名为NEZHA(NEural contextualiZed representation for CHinese lAnguage understanding,面向中文理解的神经语境表征模型,哪吒)。NEZHA相较于BERT有如下改进:

函数式相对位置编码

全词覆盖

混合精度训练

训练过程中使用 LAMB 优化器

前两者是模型改进,后两者是训练优化。

NEZHA在以下数个中文自然处理任务上取得了SOTA的结果:

命名实体识别(人民日报NER数据集)

句子相似匹配(LCQMC,口语化描述的语义相似度匹配)

中文情感分类(ChnSenti)

中文自然语言推断(XNLI)

介绍

预训练模型大部分是基于英文语料(BooksCorpus和English Wikipedia),中文的预训练模型目前现有如下几个:Google的中文BERT(只有base版)、ERNIE-Baidu、BERT-WWM(科大讯飞)。这些模型都是基于Transformer,且在遮蔽语言模型(MLM)和下一句预测(NSP)这两 ...

pip下载python包,离线安装

pip download 和 pip install 有着相同的解析和下载过程,不同的是,pip install 会安装依赖项,而 pip download 会把所有已下载的依赖项保存到指定的目录 ( 默认是当前目录 ),此目录稍后可以作为值传递给 pip install --find-links 以便离线或锁定下载包安装。适合在离线服务器上继续安装python模块,主要步骤如下:

首先在联网的环境中安装相同python环境,可使用conda创建虚拟环境

1conda create -n envir_name python=3.7

在虚拟环境中进行pip download下载所依赖包

1pip download tensorflow==2.11.0 -i https://pypi.tuna.tsinghua.edu.cn/simple/ -d ./tensorflow2/

将下载的包的名字输出到 requirements.txt中

1ls tensorflow2 > requirements.txt

离线安装

1pip install --no-index --f ...

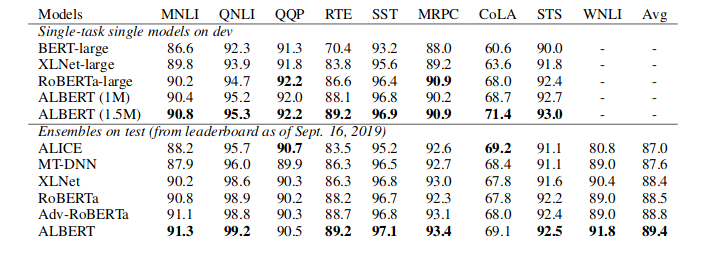

ALBERT-A Lite BERT For Self-Supervised Learning Of Language Representations

对于预训练模型来说,提升模型的大小能够提高下游任务的效果,然而如果进一步提升模型规模,势必会导致显存或者内存出现OOM的问题,长时间的训练也可能导致模型出现退化的情况。这次的新模型不再是简单的的升级,而是采用了全新的权重共享机制,反观其他升级版BERT模型,基本都是添加了更多的预训练任务,增大数据量等轻微的改动。ALBERT模型提出了两种减少内存的方法,同时提升了训练速度,其次改进了BERT中的NSP的预训练任务。

从ResNet论文可知,当模型的层次加深到一定程度后继续加深层深度会带来模型的效果下降,与之类似,如下图所示;

将BERT的hidden size增加,结果效果变差。因此,ALBERT模型三种手段来提升效果,又能降低模型参数量大小。

Embedding因式分解

从模型的角度来说,WordPiece embedding的参数学习的表示是上下文无关的,而隐藏层则学习的表示是上下文相关的。在之前的研究成果中表明,BERT及其类似的模型的好效果更多的来源于上下文信息的捕捉,因而,理论上来说隐藏层的表述包含的信息应该更多一些,隐藏层的大小应比embedding大,若让embed ...

2019达观杯信息提取第九名方案

2019达观杯信息提取第九名方案介绍以及代码地址

代码

代码地址: github

方案

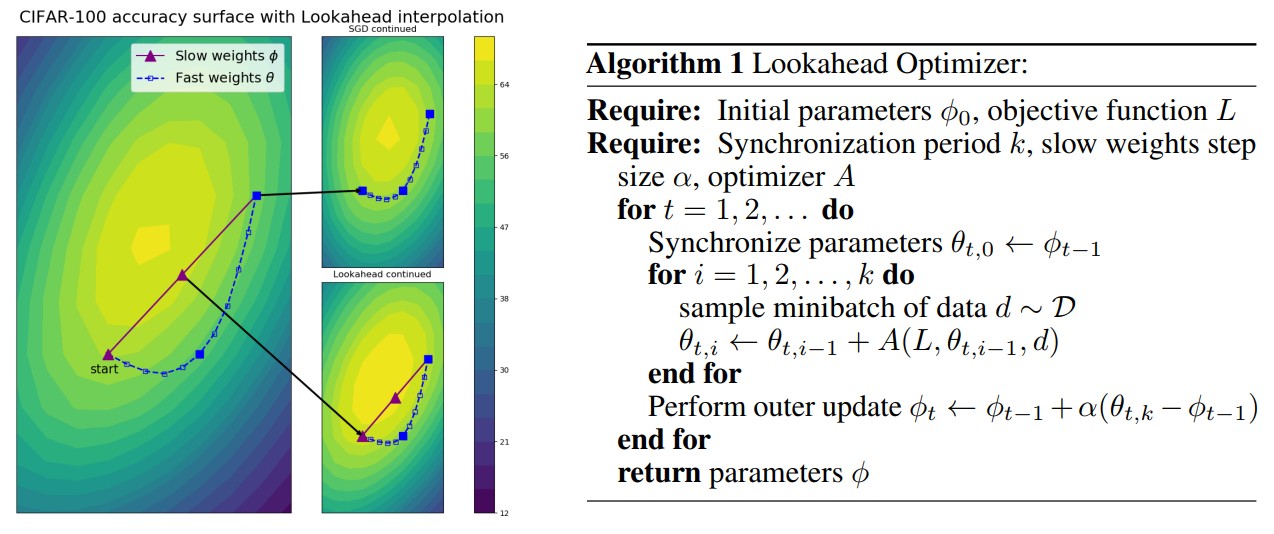

Lookahead Optimizer k steps forward, 1 step back

快来试试 Lookahead 最优化方法啊,调参少、收敛好、速度还快,大牛用了都说好。

最优化方法一直主导着模型的学习过程,没有最优化器模型也就没了灵魂。好的最优化方法一直是 ML 社区在积极探索的,它几乎对任何机器学习任务都会有极大的帮助。

从最开始的批量梯度下降,到后来的随机梯度下降,然后到 Adam 等一大帮基于适应性学习率的方法,最优化器已经走过了很多年。尽管目前 Adam 差不多已经是默认的最优化器了,但从 17 年开始就有各种研究表示 Adam 还是有一些缺陷的,甚至它的收敛效果在某些环境下比 SGD 还差。

为此,我们期待更好的标准优化器已经很多年了…

最近,来自多伦多大学向量学院的研究者发表了一篇论文,提出了一种新的优化算法——Lookahead。值得注意的是,该论文的最后作者 Jimmy Ba 也是原来 Adam 算法的作者,Hinton 老爷子也作为三作参与了该论文,所以作者阵容还是很强大的。

Lookahead 算法与已有的方法完全不同,它迭代地更新两组权重。直观来说,Lookahead 算法通过提前观察另一个优化器生成的「fast weights」序列,来选择 ...

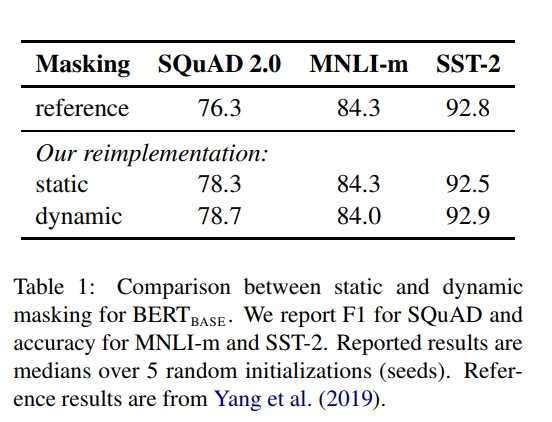

RoBERTa-A Robustly Optimized BERT Pretraining Approach

预训练的语言模型在这俩年取得了巨大的突破,但是如何比较各预训练模型的优劣显然是一个很有挑战的问题,主要是太烧钱了。本文研究了一些预训练参数和训练批大小对BERT模型的影响。发现,BERT远远没有被充分训练,用不同的预训练参数,都会对最后的结果产生不同的影响,并且最好的模型达到了SOTA。

介绍

预训练模型,例如 ELMo、GPT、BERT、XLM、XLNet 已经取得了重要进展,但要确定方法的哪些方面贡献最大是存在挑战的。训练在计算上是昂贵的,很难去做大量的实验,并且预训练过程中使用的数据量也不一致。

本文对 BERT 预训练过程进行了大量重复实验研究,其中包括对超参数调整效果和训练集大小的仔细评估。 发现 BERT 的训练不足,并提出了一种改进的方法来训练BERT模型(称之为RoBERTa),该模型可以匹敌或超过所有后 BERT 方法的性能。模型的修改很简单,它们包括:

训练模型更大,具有更大的训练批次,更多的数据

删除下一句预测的目标

对更长的序列进行训练

动态改变应用于训练数据的masking形式

总的来说,本文贡献如下

提出了一组重要的 BERT 设计选择和训练策略,并 ...

Pointer Networks

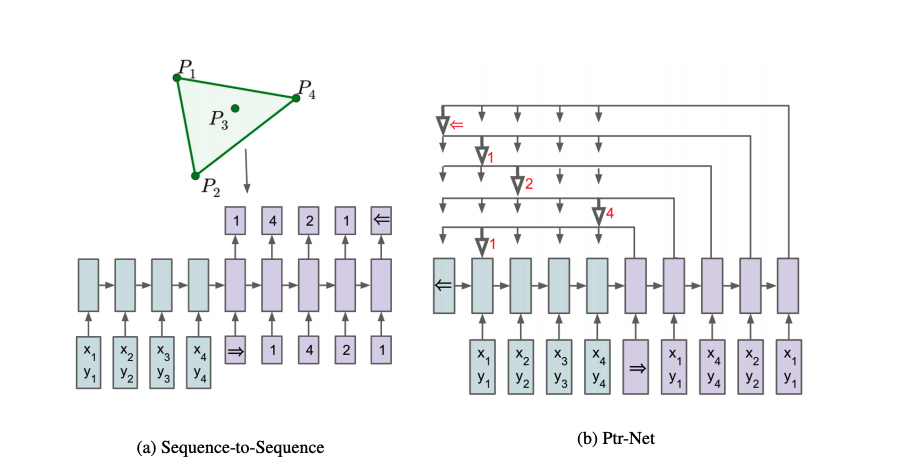

我们知道,Seq2Seq的出现很好地解开了以往的RNN模型要求输入与输出长度相等的约束,而其后的Attention机制(content-based)又很好地解决了长输入序列表示不充分的问题。尽管如此,这些模型仍旧要求输出的词汇表需要事先指定大小,因为在softmax层中,词汇表的长度会直接影响到模型的训练和运行速度。

Pointer Networks(指针网络)同样是Seq2Seq范式,它主要解决的是Seq2Seq Decoder端输出的词汇表大小不可变的问题。换句话说,传统的Seq2Seq无法解决输出序列的词汇表会随着输入序列长度的改变而改变的那些问题,某些问题的输出可能会严重依赖于输入,在本文中,作者通过计算几何学中三个经典的组合优化问题:凸包(Finding convex hulls)、三角剖分(comupting Delaunay triangulations)、TSP(Travelling Salesman Problem),来演示了作者提出的PtrNets模型。

论文地址: https://arxiv.org/pdf/1506.03134.pdf

上图,我们求解凸包问题 ...

MASS-Masked Sequence to Sequence Pre-training for Language Generation

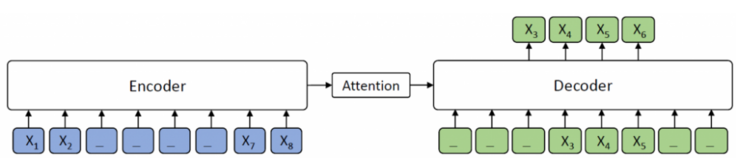

BERT通常只训练一个encoder用于自然语言理解,而GPT的语言模型通常是训练一个decoder。如果要将BERT或者GPT用于序列到序列的自然语言生成任务,通常只有分开预训练encoder和decoder,因此encoder-attention-decoder结构没有被联合训练,attention机制也不会被预训练,而decoder对encoder的attention机制在这类任务中非常重要,因此BERT和GPT在这类任务中只能达到次优效果。

如上图 所示,encoder将源序列 XXX 作为输入并将其转换为隐藏表示的序列,然后decoder通过attention机制从encoder中抽象出隐藏表示的序列信息,并自动生成目标序列文本 YYY。

本文针对文本生成任务, 在BERT的基础上,提出了Masked Sequence to Sequence pre-training(MASS),MASS采用encoder-decoder框架以预测一个句子片段,其中encoder端输入的句子被随机masked连续片段,而decoder端需要生成该masked的片段,通过这种方式MASS能够 ...

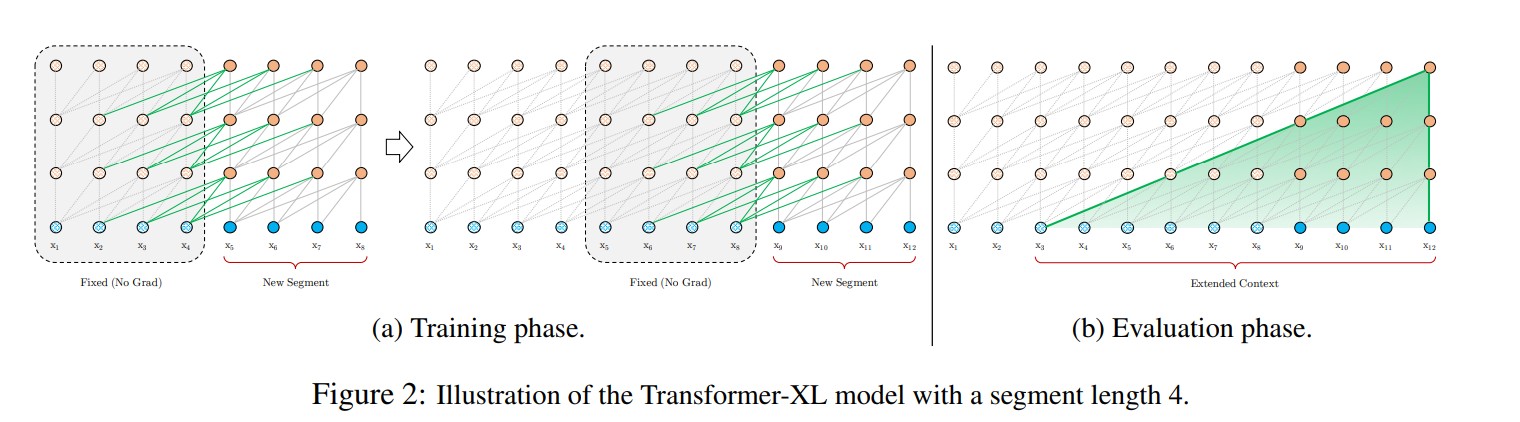

Transformer-XL Attentive Language Models Beyond a Fixed-Length Context

目前在自然语言处理领域,Transformer的编码能力超越了RNN,但是对长距离依赖的建模能力仍然不足。在基于LSTM的模型中,为了建模长距离依赖,提出了门控机制和梯度裁剪,目前可以编码的最长距离在200左右。在基于Transformer的模型中,允许词之间直接self-attention,能够更好地捕获长期依赖关系,但是还是有限制,本文将主要介绍Transformer-XL,并基于PyTorch框架从头实现Transformer-XL。

原始Transformer

细想一下,BERT在应用Transformer时,有一个参数sequence length,也就是BERT在训练和预测时,每次接受的输入是固定长度的。那么,怎么输入语料进行训练时最理想的呢?当然是将一个完整的段落一次性输入,进行特征提取了。但是现实是残酷的,这么大的Transformer,内存是消耗不起的。所以现有的做法是,对段落按照segment进行分隔。在训练时:

当输入segment序列比sequence length短时,就做padding。

当输入segment序列比sequence length长时就做切 ...