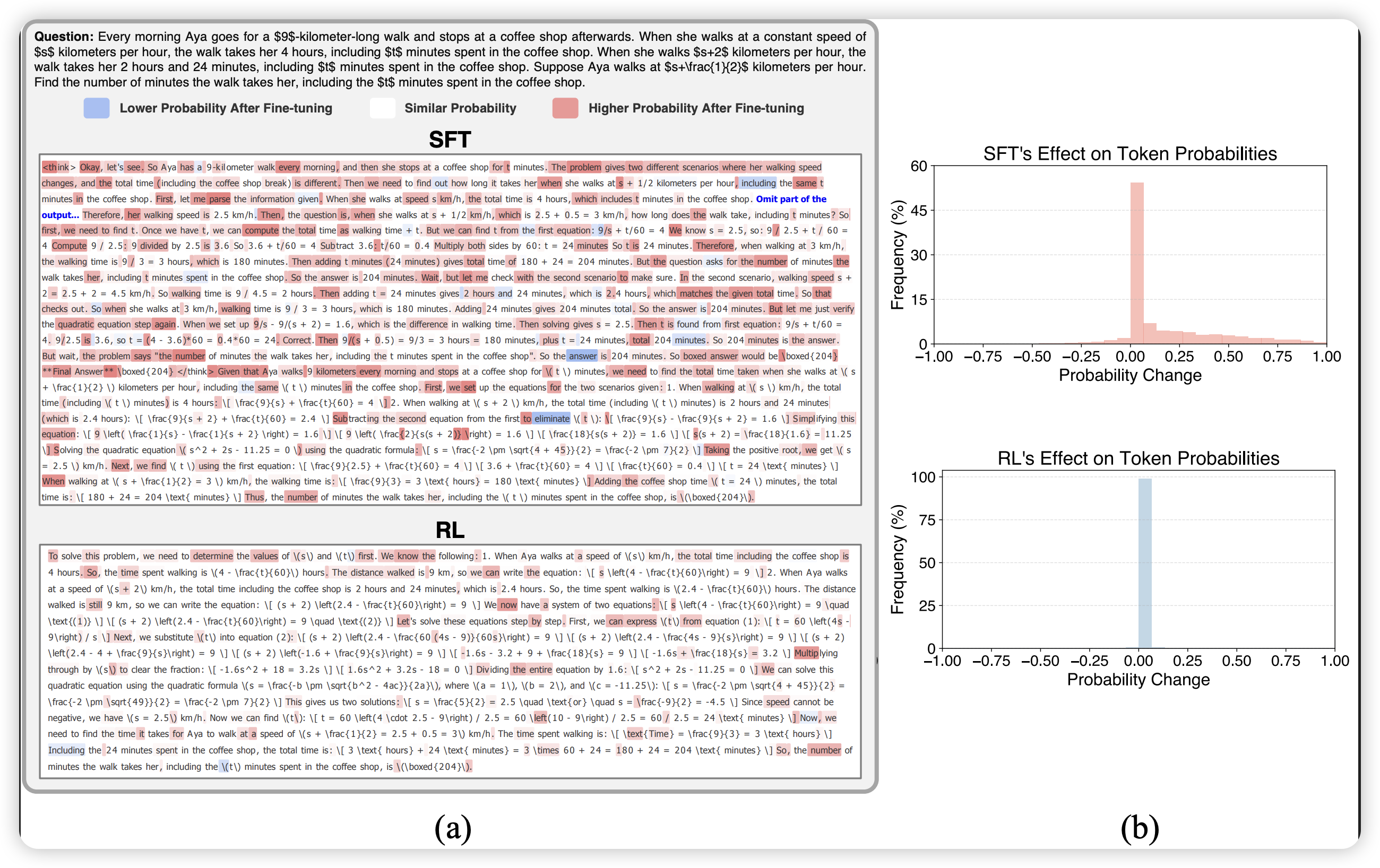

论文"Multi-Sample Dropout for Accelerated Training and Better Generalization"提出了一种Dropout的新用法,Multi-Sample Dropout可以加快训练速度以及产生更好的结果,本文我们将使用pytorch框架进行实验.

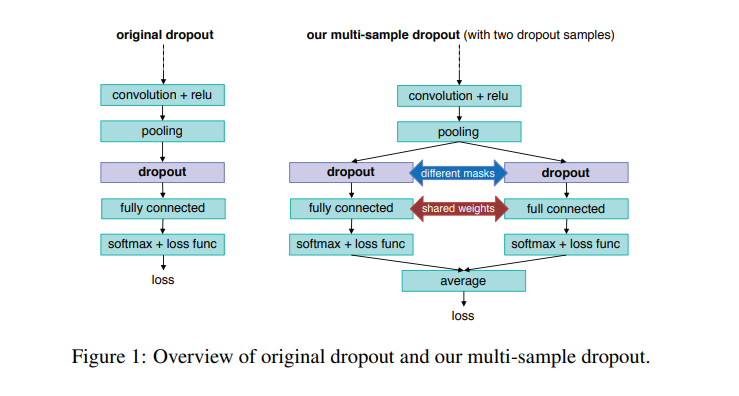

Dropout是一种简单但有效的正则化技术,能够使深度神经网络(DNN)产生更好的泛化效果,因此它广泛用于基于DNN的任务。在训练过程,Dropout随机丢弃一部分神经元以避免过拟合。Multi-Sample Dropout可以说是原始Dropout的一种增强版本.即多次丢弃一部分神经元,并求平均获得最终结果.整个结构如下所示:

实验

本节,我们将对Multi-Sample Dropout进行实验,使用数据主要是CIFAR10,模型为ResNet,优化器为Adma.文件说明:

1

2

3

4

5

| ├── nn.py # 模型文件

├── progressbar.py # 进度条文件

├── run.py #主程序

├── tools.py # 常用工具

├── trainingmonitor.py # 训练指标可视化

|

首先,我们先下载CIFAR10数据集并进行预处理,在Pytorch模块中已经包装好了数据获取,如下所示:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

| data = {

'train': datasets.CIFAR10(

root='./data', download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))]

)

),

'valid': datasets.CIFAR10(

root='./data', train=False, download=True,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))]

)

)

}

|

接着,我们定义模型结果,ResNet模型主体上保持不变,我们增加Multi-Sample Dropout模块,即:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| class ResNet(nn.Module):

def __init__(self, ResidualBlock, num_classes,dropout_num,dropout_p):

super(ResNet, self).__init__()

......

self.dropouts = nn.ModuleList([nn.Dropout(dropout_p) for _ in range(dropout_num)])

def forward(self, x,y = None,loss_fn = None):

......

feature = F.avg_pool2d(out, 4)

if len(self.dropouts) == 0:

out = feature.view(feature.size(0), -1)

out = self.fc(out)

if loss_fn is not None:

loss = loss_fn(out,y)

return out,loss

return out,None

else:

for i,dropout in enumerate(self.dropouts):

if i== 0:

out = dropout(feature)

out = out.view(out.size(0),-1)

out = self.fc(out)

if loss_fn is not None:

loss = loss_fn(out, y)

else:

temp_out = dropout(feature)

temp_out = temp_out.view(temp_out.size(0),-1)

out =out+ self.fc(temp_out)

if loss_fn is not None:

loss = loss+loss_fn(temp_out, y)

if loss_fn is not None:

return out / len(self.dropouts),loss / len(self.dropouts)

return out,None

|

备注: 这里实现与原文稍微不同,我们相对而言简单点.

分别运行以下命令:

1

2

3

4

|

python run.py --dropout_num=0 .

python run.py --dropout_num=1 .

python run.py --dropout_num=8

|

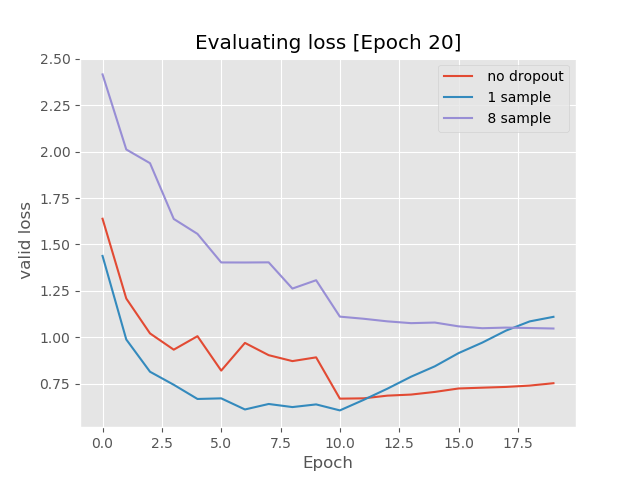

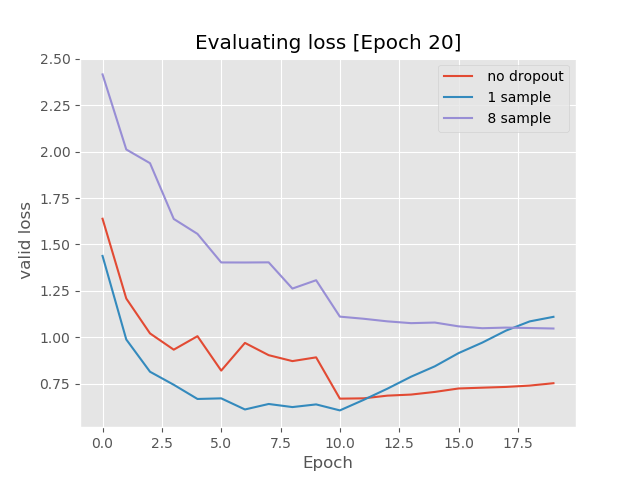

实验结果;

训练损失变化

验证精度变化

验证损失变化

从实验结果中来看,我们实验的简单版本在CIFAR10数据集上还是有点效果的,详情请大家看原始论文或者对应的代码.

论文地址: https://arxiv.org/abs/1905.09788

实验代码地址: github