LoRA:Low-Rank Adaptation of Large Language Models

LoRA是微软研究员引入的一项新技术,主要用于处理大模型微调的问题。目前超过数十亿以上参数的具有强能力的大模型 (例如 GPT-3) 通常在为了适应其下游任务的微调中会呈现出巨大开销。 LoRA 建议冻结预训练模型的权重并在每个 Transformer 块中注入可训练层 (秩-分解矩阵)。因为不需要为大多数模型权重计算梯度,所以大大减少了需要训练参数的数量并且降低了 GPU 的内存要求。研究人员发现,通过聚焦大模型的 Transformer 注意力块,使用 LoRA 进行的微调质量与全模型微调相当,同时速度更快且需要更少的计算。

论文地址: https://arxiv.org/pdf/2106.09685.pdf

论文代码: https://github.com/microsoft/LoRA

介绍

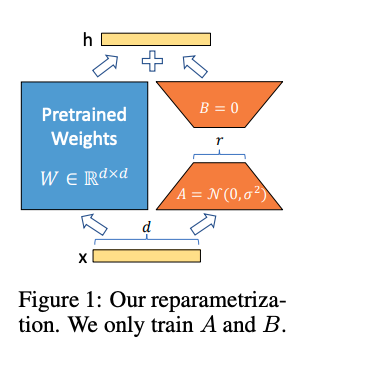

LoRA的主要思想是将预训练模型权重冻结,并将可训练的秩分解矩阵注入Transformer架构的每一层,大大减少了下游任务的可训练参数数量。具体来说,它将原始矩阵分解为两个矩阵的乘积,其中一个矩阵的秩比另一个矩阵的秩低。这时只需要运用低秩矩阵来进行运算,这样,可以减少模型参数数量,提高训 ...

Reinforcement Learning from Human Feedback (RLHF)详解

OpenAI 推出的 ChatGPT 对话模型掀起了新的 AI 热潮,它面对多种多样的问题对答如流,似乎已经打破了机器和人的边界。这一工作的背后是大型语言模型 (Large Language Model,LLM) 生成领域的新训练范式:RLHF (Reinforcement Learning from Human Feedback) ,即以强化学习方式依据人类反馈优化语言模型。

过去几年里各种 LLM 根据人类输入提示 (prompt) 生成多样化文本的能力令人印象深刻。然而,对生成结果的评估是主观和依赖上下文的,例如,我们希望模型生成一个有创意的故事、一段真实的信息性文本,或者是可执行的代码片段,这些结果难以用现有的基于规则的文本生成指标 (如 BLUE 和 ROUGE) 来衡量。除了评估指标,现有的模型通常以预测下一个单词的方式和简单的损失函数 (如交叉熵) 来建模,没有显式地引入人的偏好和主观意见。

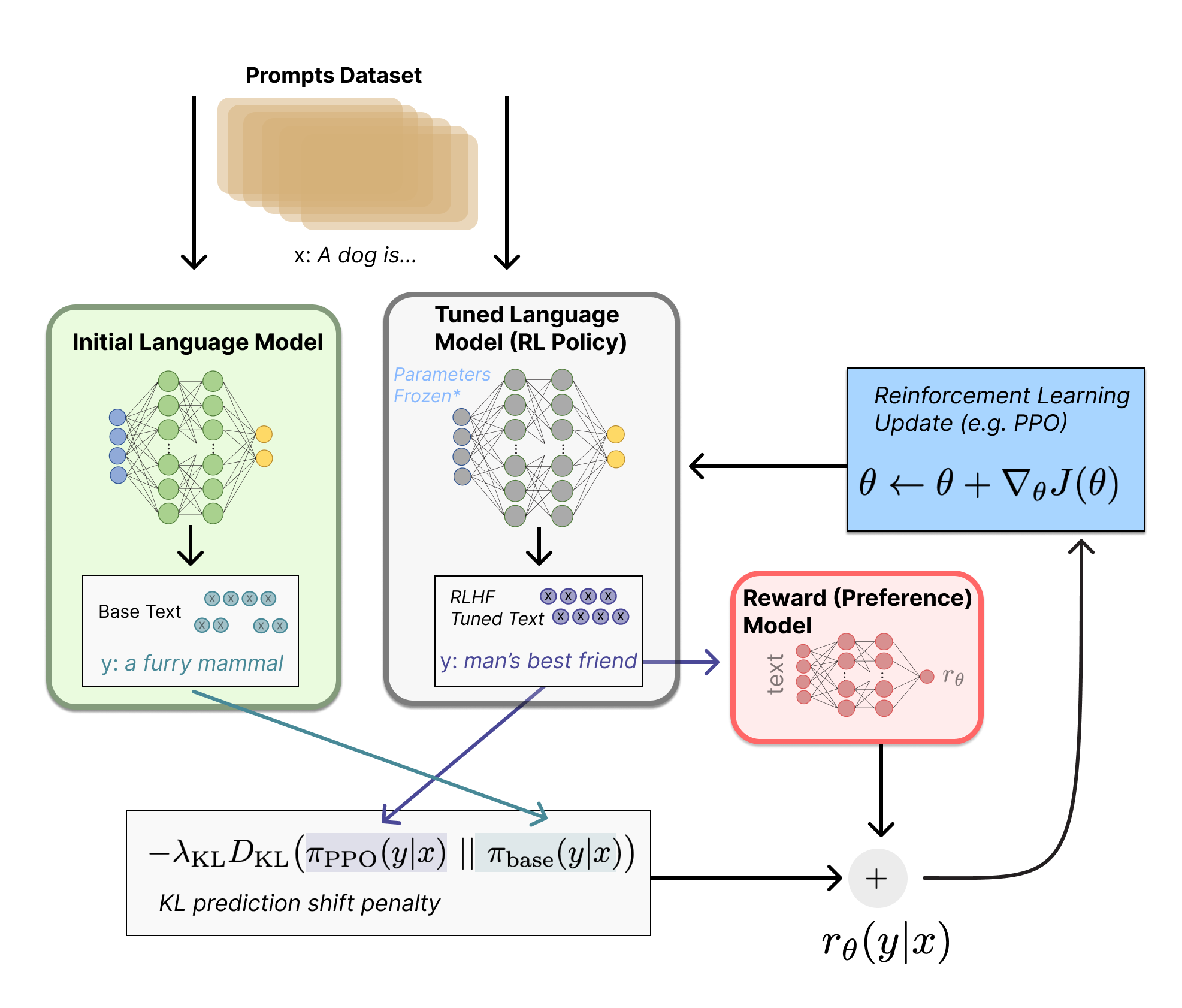

如果我们 用生成文本的人工反馈作为性能衡量标准,或者更进一步用该反馈作为损失来优化模型,那不是更好吗?这就是 RLHF 的思想:使用强化学习的方式直接优化带有人类反馈的语言模型。RL ...

多模态预训练模型综述

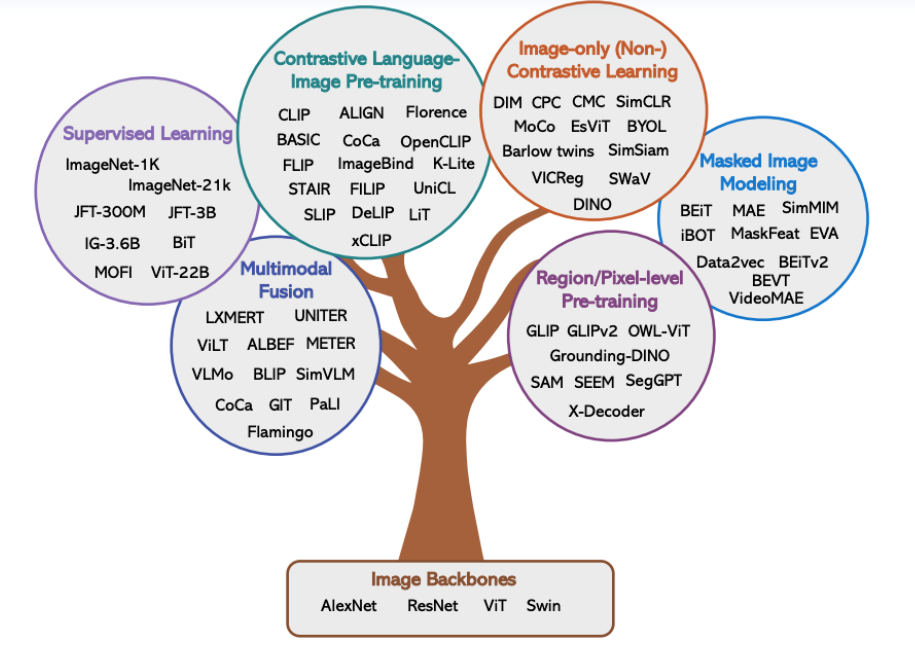

预训练模型在NLP和CV上取得巨大成功,学术届借鉴预训练模型–>下游任务finetune–>prompt训练–>人机指令alignment这套模式,利用多模态数据集训练一个大的多模态预训练模型(跨模态信息表示)来解决多模态域各种下游问题。

多模态预训练大模型主要包括以下4个方面:

1.多模态众原始输入图、文数据表示:将图像和文本编码为潜在表示,以保留其语义

2.多模态数据如何交互融合:设计一个优秀架构来交叉多模态信息之间的相互作用

3.多模态预训练大模型如何学习萃取有效知识:设计有效的训练任务来让模型萃取信息

4.多模态预训练大模型如何适配下游任务:训练好的预训练模型fintune适配下游任务

本篇文章主要参考:《A survey of Vision-Language Pre-trained Models》

前置任务:文本-图语料对准备

预培训数据集,预训练多模态大模型的第一步是构建大规模的图像文本对。我们将训练前数据集定义为D=(W,V),N,i=1D = {(W, V )},N,i=1D=(W,V),N,i=1,其中W和V分别表示文本和图像,N是图像-文本对的数 ...

通向AGI之路:大型语言模型(LLM)技术精要

ChatGPT出现后惊喜或惊醒了很多人。惊喜是因为没想到大型语言模型(LLM,Large Language Model)效果能好成这样;惊醒是顿悟到我们对LLM的认知及发展理念,距离世界最先进的想法,差得有点远。我属于既惊喜又惊醒的那一批,也是典型的中国人,中国人善于自我反思,于是开始反思,而这篇文章正是反思的结果。

实话实说,国内在LLM模型相关技术方面,此刻,距离最先进技术的差距进一步加大了。技术领先或技术差距这事情,我觉得要动态地以发展的眼光来看。在Bert出现之后的一到两年间,其实国内在这块的技术追赶速度还是很快的,也提出了一些很好的改进模型,差距拉开的分水岭应该是在 GPT 3.0出来之后,也就是2020年年中左右。在当时,其实只有很少的人觉察到:GPT 3.0它不仅仅是一项具体的技术,其实体现的是LLM应该往何处去的一个发展理念。自此之后,差距拉得越来越远,ChatGPT只是这种发展理念差异的一个自然结果。所以,我个人认为,抛开是否有财力做超大型LLM这个因素,如果单从技术角度看,差距主要来自于对LLM的认知以及未来应往何处去的发展理念的不同。

国内被国外技术甩得越来越远, ...

万字拆解,追溯ChatGPT各项能力的起源

最近,OpenAI的预训练模型ChatGPT给人工智能领域的研究人员留下了深刻的印象和启发。毫无疑问,它又强又聪明,且跟它说话很好玩,还会写代码。它在多个方面的能力远远超过了自然语言处理研究者们的预期。于是我们自然就有一个问题:ChatGPT 是怎么变得这么强的?它的各种强大的能力到底从何而来? 在这篇文章中,我们试图剖析 ChatGPT 的突现能力(Emergent Ability),追溯这些能力的来源,希望能够给出一个全面的技术路线图,来说明 GPT-3.5 模型系列以及相关的大型语言模型是如何一步步进化成目前的强大形态。

Emergent Ability: 突现能力,表示小模型没有,只在模型大到一定程度才会出现的能力

我们希望这篇文章能够促进大型语言模型的透明度,成为开源社区共同努力复现 GPT-3.5 的路线图。

致国内的同胞们:

在国际学术界看来,ChatGPT / GPT-3.5 是一种划时代的产物,它与之前常见的语言模型 (Bert/ Bart/ T5) 的区别,几乎是导弹与弓箭的区别,一定要引起最高程度的重视。

在我跟国际同行的交流中,国际上的主流学术机构 ...

Zwift单机版详细安装过程

Zwift单机版本的详细安装过程,如果文字版安装有困难,可直接B站搜索相关视频进行安装。

Window系统

首先下载所需软件,如下(点击下载):

python-3.11.4-amd64

zoffline-helper-master

zwift-offline-master

ZwiftSetup

详细安装教程:

1、安装ZwiftSetup ,★★★★★等update进度跑完,再退出zwift★★★★★,包括托盘区任务图标;(已经安装并更新至最新版忽略此步)

2、安装"python-3.11.1-amd64",勾选“Add python.exe to PATH"

3、解压缩 zwift-offline-master;

安装依赖包,使用命令 win+R , 输入CMD 按住ctrl+shift+回车 出现命令字符框,

CD 到 zwift-offline-master 文件夹目录下,然后输入下列命令:

pip install -r requirements.txt 回车

如果安装一遍不成功出现红字,重新执行命令再安装一次

4、进入zwi ...

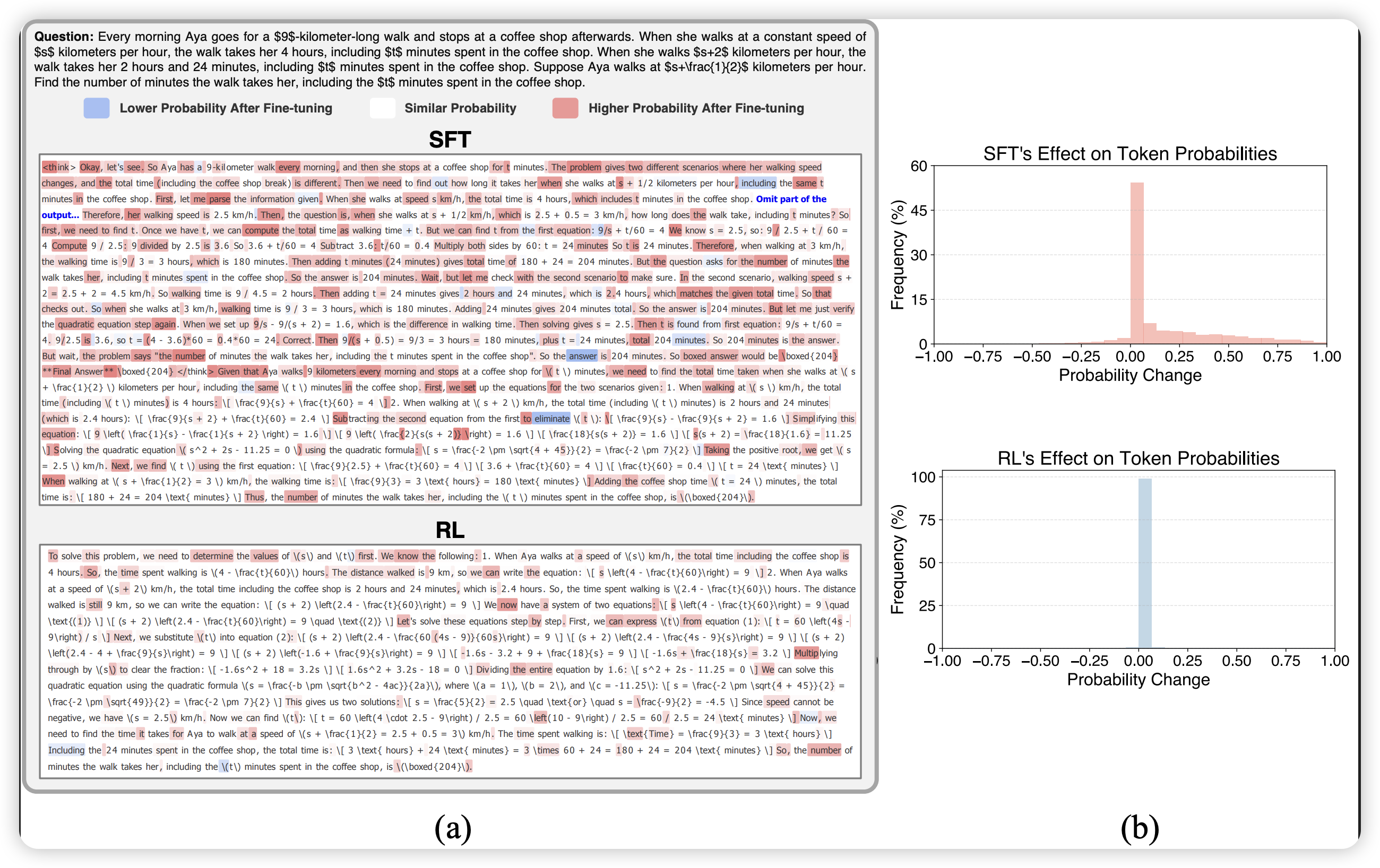

深入理解语言模型的突现能力

最近,人们对大型语言模型所展示的强大能力(例如思维链、便签本)产生了极大的兴趣,并开展了许多工作。我们将之统称为大模型的突现能力,这些能力可能只存在于大型模型中,而不存在于较小的模型中,因此称为“突现”。其中许多能力都非常令人印象深刻,比如复杂推理、知识推理和分布外鲁棒性,我们将在后面详细讨论。值得注意的是,这些能力很接近 NLP 社区几十年来一直寻求的能力,因此代表了一种潜在的研究范式转变,即从微调小模型到使用大模型进行上下文学习。对于先行者来说,范式转变可能是很显然的。然而,出于科学的严谨性,我们确实需要非常明确的理由来说明为什么人们应该转向大型语言模型,即使这些模型昂贵、难以使用,并且效果可能一般。在本文中,我们将仔细研究这些能力是什么,大型语言模型可以提供什么,以及它们在更广泛的 NLP / ML 任务中的潜在优势是什么。

前提: 我们假设读者具备以下知识:

预训练、精调、提示(普通从业者应具备的自然语言处理/深度学习能力)

思维链提示、便签本(普通从业者可能不太了解,但不影响阅读)

存在于大模型而非小模型的突现能力

在以上的效果图中,我们可以观察到模型的表现:

当尺寸 ...

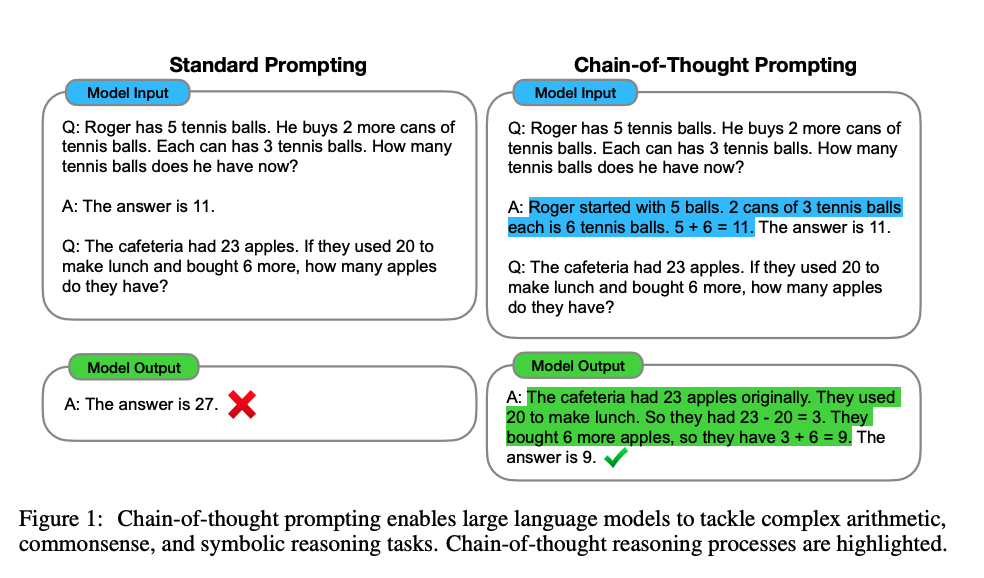

Chain of Thought Prompting Elicits Reasoning in Large Language Models

本文探讨了大型语言模型(LLM)产生一个连贯的思维链的能力——一系列的短句子,模仿一个人在回答一个问题时可能拥有的推理过程。通过Chain of Thoughts(CoT,即推理中间过程),提升大型语言模型(LLM)推理能力,在三个LLM上证明了CoT能够显著提升算术、常识、符号推理能力。

论文地址: https://arxiv.org/pdf/2201.11903.pdf

方法

语言模型的规模达到 100B 的参数量之后,就能够在像情感分类 、主题分类等这种分类任务上取得非常好的结果,作者将这类任务归纳为 system-1,也就是能够人类很快很直观地理解的任务。还有一类任务需要很慢而且是很仔细的考虑,作者将其归纳为 system-2 (比如一些设计逻辑、常识的推理任务),作者发现,即便语言模型的规模达到了几百B的参数量,也很难在 system-2 这类任务上获得很好的表现。

作者将这种现象称为 flat scaling curves:如果将语言模型参数量作为横坐标,在 system-2 这类任务上的表现作为纵坐标,则折线就会变得相当平缓,不会像在 system-1 这类任务上那么容 ...

Git速查表

本速查表基于 git 2.24 书写, 为必填参数, [param] 为选填参数, 使用前建议先看一遍 Pro Git , 请使用ctrl+f进行搜索。

配置

git config --list [–system|–global|–local] 显示当前配置

git config [–system|–global|–local] 设置参数

git config -e [–system|–global|–local] 编辑配置

git config --global alias. “” 创建别名

git 使用别名

git config --global --unset 移除单个变量

git config --global --unset-all 移除所有变量

设置例子

1234567# 设定身份git config --global user.name "cheatsheet"git config --global user.email "cheatsheet@cheatsheet.wang"# 首选编辑器git config - ...

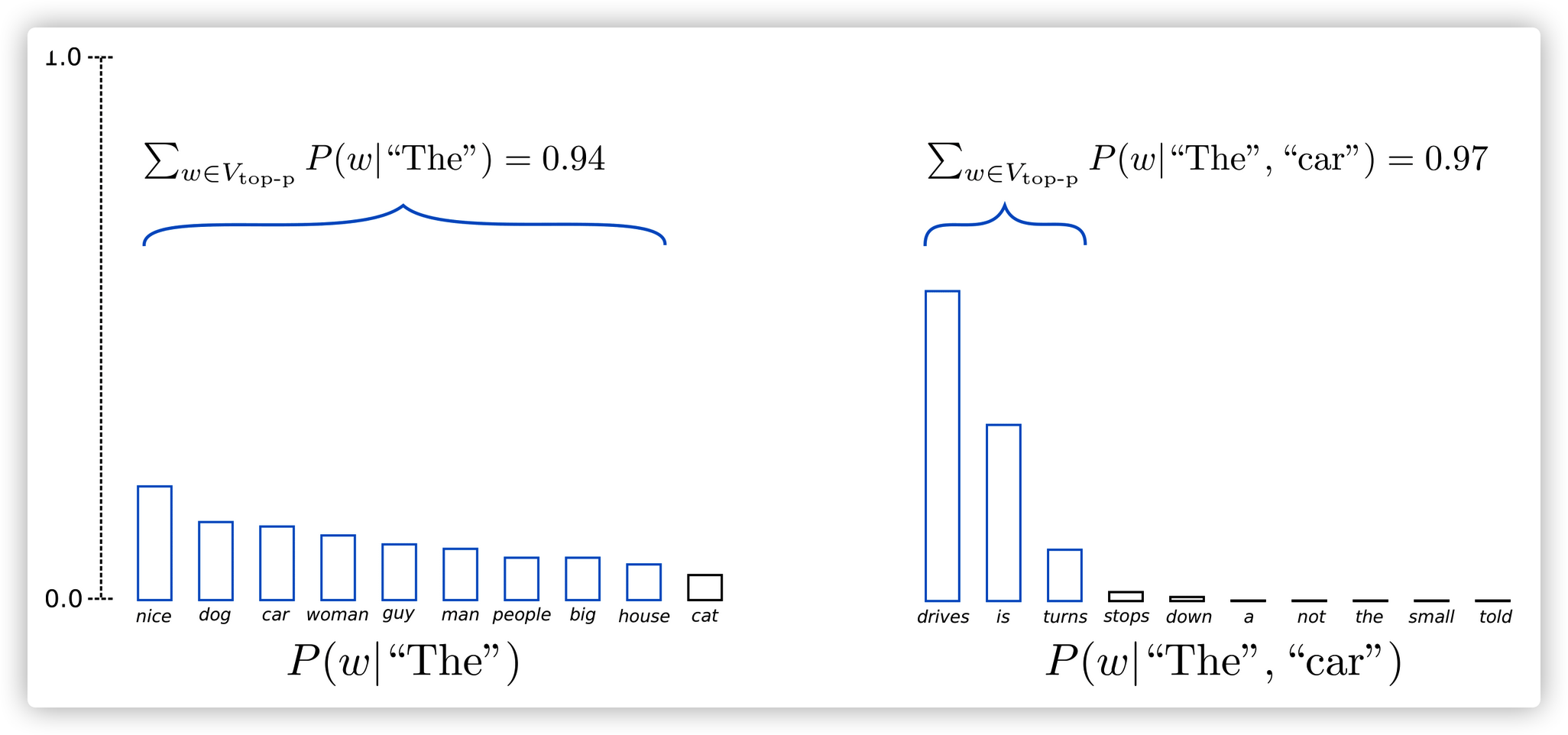

Transformers-文本生成的解码方法介绍

最近几年,以OpenAI公司的GPT3为代表,基于Transformer结构的大模型都已经开始在上百万级别的网页上面训练。因此大家对开放领域语言生成的期待值也越来越高。开放领域的条件语言生成效果也日新月异,例如GPT2、XLNet、CTRL。除了transformers结构和海量的无监督预训练数据,更好的解码方法也在其中扮演了重要角色。

这篇博客简要回顾了多种解码策略,帮助你用transformers库实现他们。

所有的方法都可以通过自回归(auto-regressive)语言生成实现新手导航,简而言之,自回归语言生成就是基于当前的词分布,预测下一个词的概率分布。

P(w1:T∣W0)=∏t=1TP(wt∣w1:t−1,W0),with w1:0=∅P(w_{1:T}|W_0) = \prod_{t=1}^TP(w_t|w_{1:t-1},W_0), with\ w_{1:0}=\emptyset

P(w1:T∣W0)=t=1∏TP(wt∣w1:t−1,W0),with w1:0=∅

这里W0W_0W0表示生成前的初始词序列,生成词序列的长度TTT取决于生成概率中P(w ...