本篇博文主要内容为 2026-01-06 从Arxiv.org论文网站获取的最新论文列表,自动更新,按照NLP、CV、ML、AI、IR五个大方向区分,若需要邮件定时接收,请在评论区留下你的邮箱号。

说明:每日论文数据从Arxiv.org获取,每天早上12:00左右定时自动更新。

友情提示: 如何您需要邮箱接收每日论文数据,请在评论处留下你的邮箱。

目录

概览 (2026-01-06)

今日共更新820篇论文,其中:

- 自然语言处理共109篇(Computation and Language (cs.CL))

- 人工智能共258篇(Artificial Intelligence (cs.AI))

- 计算机视觉共206篇(Computer Vision and Pattern Recognition (cs.CV))

- 机器学习共247篇(Machine Learning (cs.LG))

自然语言处理

[NLP-0] Robust Persona-Aware Toxicity Detection with Prompt Optimization and Learned Ensembling

【速读】: 该论文旨在解决毒性检测(toxicity detection)这一主观性任务中因不同人群视角差异而导致的模型性能不稳定问题,即现有大型语言模型(Large Language Model, LLM)在不同角色(persona)设定下表现出显著的性能波动。其核心解决方案在于提出一种轻量级元集成方法:基于四种提示变体(prompting variants)的预测结果构建一个4-bit向量,并使用支持向量机(SVM)对该向量进行加权融合。该方法通过捕捉不同提示策略与模型-角色组合间的互补误差,在多样化的角色条件下实现比单一提示方法和传统多数投票法更稳健且一致的性能表现,从而为主观自然语言处理任务提供了一种有效的多元评价范式。

链接: https://arxiv.org/abs/2601.02337

作者: Berk Atil,Rebecca J. Passonneau,Ninareh Mehrabi

机构: Pennsylvania State University (宾夕法尼亚州立大学); Resolution

类目: Computation and Language (cs.CL)

备注:

Abstract:Toxicity detection is inherently subjective, shaped by the diverse perspectives and social priors of different demographic groups. While ``pluralistic’’ modeling as used in economics and the social sciences aims to capture perspective differences across contexts, current Large Language Model (LLM) prompting techniques have different results across different personas and base models. In this work, we conduct a systematic evaluation of persona-aware toxicity detection, showing that no single prompting method, including our proposed automated prompt optimization strategy, uniformly dominates across all model-persona pairs. To exploit complementary errors, we explore ensembling four prompting variants and propose a lightweight meta-ensemble: an SVM over the 4-bit vector of prompt predictions. Our results demonstrate that the proposed SVM ensemble consistently outperforms individual prompting methods and traditional majority-voting techniques, achieving the strongest overall performance across diverse personas. This work provides one of the first systematic comparisons of persona-conditioned prompting for toxicity detection and offers a robust method for pluralistic evaluation in subjective NLP tasks.

zh

[NLP-1] Estimating Text Temperature

【速读】: 该论文旨在解决如何对任意文本(包括人类书写的文本)在给定语言模型下估计其温度参数(temperature parameter)的问题,从而量化生成文本的随机性水平。解决方案的关键在于提出了一种基于最大似然估计(maximum likelihood estimation)的方法,能够从已生成或已存在的文本中反推其对应的温度值,进而实现对不同文本来源(如人类写作或模型生成)的温度差异进行量化分析。通过在多个小到中等规模的语言模型上验证该方法的有效性,并最终使用表现最佳的Qwen3 14B模型对主流语料库进行温度估计,验证了该方法的实用性与泛化能力。

链接: https://arxiv.org/abs/2601.02320

作者: Nikolay Mikhaylovskiy

机构: NTR Labs (NTR 实验室); Higher IT School of Tomsk State University (托木斯克国立大学高级信息技术学院)

类目: Computation and Language (cs.CL)

备注:

Abstract:Autoregressive language models typically use temperature parameter at inference to shape the probability distribution and control the randomness of the text generated. After the text was generated, this parameter can be estimated using maximum likelihood approach. Following it, we propose a procedure to estimate the temperature of any text, including ones written by humans, with respect to a given language model. We evaluate the temperature estimation capability of a wide selection of small-to-medium LLMs. We then use the best-performing Qwen3 14B to estimate temperatures of popular corpora.

zh

[NLP-2] Classifying several dialectal Nawatl varieties

【速读】: 该论文旨在解决纳瓦特尔语(Nawatl)方言分类困难的问题,因其存在约30种方言变体及书写形式不统一,导致计算机资源匮乏。解决方案的关键在于利用机器学习(Machine Learning)与神经网络(Neural Networks)技术对纳瓦特尔语的不同方言进行自动识别与分类,从而为语言处理和数字化保护提供可行的技术路径。

链接: https://arxiv.org/abs/2601.02303

作者: Juan-José Guzmán-Landa,Juan-Manuel Torres-Moreno,Miguel Figueroa-Saavedra,Carlos-Emiliano González-Gallardo,Graham Ranger,Martha Lorena-Avendaño-Garrido

机构: LIA/Avignon Université (法国阿维尼翁大学); LIFAT/Université de Tours (法国图尔大学); Universidad Veracruzana (墨西哥韦拉克鲁斯大学); ICTT/Avignon Université (法国阿维尼翁大学)

类目: Computation and Language (cs.CL)

备注: 9 pages, 5 figures, 4 tables

Abstract:Mexico is a country with a large number of indigenous languages, among which the most widely spoken is Nawatl, with more than two million people currently speaking it (mainly in North and Central America). Despite its rich cultural heritage, which dates back to the 15th century, Nawatl is a language with few computer resources. The problem is compounded when it comes to its dialectal varieties, with approximately 30 varieties recognised, not counting the different spellings in the written forms of the language. In this research work, we addressed the problem of classifying Nawatl varieties using Machine Learning and Neural Networks.

zh

[NLP-3] Power-of-Two Quantization-Aware-Training (PoT-QAT) in Large Language Models (LLM s)

【速读】: 该论文旨在解决大型语言模型(Large Language Models, LLMs)参数规模急剧增长所带来的边缘计算设备部署难题,尤其是受限于边缘设备的内存容量和算力资源。其核心解决方案是采用一种特殊的量化方法——仅保留幂次为2的数值(Power-of-Two Quantization, PoT),从而显著降低模型存储需求(仅需存储指数部分)并利用位移操作替代高成本乘法运算,实现推理速度提升。为缓解严格PoT量化导致的性能损失,研究进一步引入量化感知训练(Quantization Aware Training, QAT),通过额外训练优化模型表现。实验表明,在GPT-2 124M模型上,PoT量化结合QAT后,困惑度(perplexity)相比基线提升66%,BERT分数损失仅为1%,同时内存占用减少87.5%,推理速度提升3–10倍。

链接: https://arxiv.org/abs/2601.02298

作者: Mahmoud Elgenedy

机构: Stanford University (斯坦福大学)

类目: Computation and Language (cs.CL); Signal Processing (eess.SP)

备注:

Abstract:In Large Language Models (LLMs), the number of parameters has grown exponentially in the past few years, e.g., from 1.5 billion parameters in GPT-2 to 175 billion in GPT-3 to possibly more than trillion in higher versions. This raises a significant challenge for implementation, especially for Edge devices. Unlike cloud computing, memory and processing power for Edge devices are very limited, which necessitates developing novel ideas to make such applications feasible. In this work, we investigate compressing weights with a special quantization that limits numbers to only power-of-two (PoT). This helps save a huge amount of memory as only exponents need to be stored, more importantly, it significantly reduces processing power by replacing costly multiplication with low cost bit shifting. To overcome performance loss due to this strict quantization, we investigate Quantization Aware Training (QAT) to enhance performance through additional training. Results on GPT-2 124M show a major enhancement for quantized PoT model after additional training, with a perplexity enhancement of 66% and BERT-Score loss to baseline GPT-2 of 1%. The memory saving is estimated to be 87.5% while the inference speed is expected to be 3-10x faster with PoT quantization versus full-precision.

zh

[NLP-4] pdfQA: Diverse Challenging and Realistic Question Answering over PDFs

【速读】: 该论文旨在解决当前问答(QA)数据集普遍基于文本源或局限于特定领域,难以全面评估端到端文档问答(Document QA)系统性能的问题。其解决方案的关键在于构建了一个多领域、高质量的PDF问答数据集pdfQA,包含2000个真实标注(real-pdfQA)和2000个合成(syn-pdfQA)的QA对,并在十个复杂度维度(如文件类型、来源模态、答案类型等)上进行细粒度区分,同时通过质量与难度过滤确保数据的有效性与挑战性。该数据集为评估信息检索、文档解析等模块的本地优化及整体问答流程提供了标准化基准。

链接: https://arxiv.org/abs/2601.02285

作者: Tobias Schimanski,Imene Kolli,Jingwei Ni,Yu Fan,Ario Saeid Vaghefi,Elliott Ash,Markus Leippold

机构: University of Zurich (苏黎世大学); ETH Zurich (苏黎世联邦理工学院); Swiss Finance Institute (SFI) (瑞士金融研究所)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:PDFs are the second-most used document type on the internet (after HTML). Yet, existing QA datasets commonly start from text sources or only address specific domains. In this paper, we present pdfQA, a multi-domain 2K human-annotated (real-pdfQA) and 2K synthetic dataset (syn-pdfQA) differentiating QA pairs in ten complexity dimensions (e.g., file type, source modality, source position, answer type). We apply and evaluate quality and difficulty filters on both datasets, obtaining valid and challenging QA pairs. We answer the questions with open-source LLMs, revealing existing challenges that correlate with our complexity dimensions. pdfQA presents a basis for end-to-end QA pipeline evaluation, testing diverse skill sets and local optimizations (e.g., in information retrieval or parsing).

zh

[NLP-5] CD4LM: Consistency Distillation and aDaptive Decoding for Diffusion Language Models

【速读】: 该论文旨在解决扩散语言模型(Diffusion Language Models, DLMs)在推理阶段因静态训练与动态生成需求不匹配而导致的效率低下问题,即训练时优化固定调度下的局部转移,而高效推理需依赖自适应的“长跳”精炼过程。其解决方案的关键在于提出CD4LM框架,通过两个核心机制实现:一是离散空间一致性蒸馏(Discrete-Space Consistency Distillation, DSCD),使学生模型具备轨迹不变性,能够直接从多样化的噪声状态映射到干净分布;二是置信度自适应解码(Confidence-Adaptive Decoding, CAD),基于token置信度动态分配计算资源,实现高效跳步而不损失生成质量。此方法显著提升了DLMs的并行解码效率,在保持甚至提升生成质量的同时,实现了平均3.62倍的速度提升。

链接: https://arxiv.org/abs/2601.02236

作者: Yihao Liang,Ze Wang,Hao Chen,Ximeng Sun,Jialian Wu,Xiaodong Yu,Jiang Liu,Emad Barsoum,Zicheng Liu,Niraj K. Jha

机构: Princeton University (普林斯顿大学); Advanced Micro Devices, Inc (超威半导体公司)

类目: Computation and Language (cs.CL)

备注: 33 pages, 7 figures

Abstract:Autoregressive large language models achieve strong results on many benchmarks, but decoding remains fundamentally latency-limited by sequential dependence on previously generated tokens. Diffusion language models (DLMs) promise parallel generation but suffer from a fundamental static-to-dynamic misalignment: Training optimizes local transitions under fixed schedules, whereas efficient inference requires adaptive “long-jump” refinements through unseen states. Our goal is to enable highly parallel decoding for DLMs with low number of function evaluations while preserving generation quality. To achieve this, we propose CD4LM, a framework that decouples training from inference via Discrete-Space Consistency Distillation (DSCD) and Confidence-Adaptive Decoding (CAD). Unlike standard objectives, DSCD trains a student to be trajectory-invariant, mapping diverse noisy states directly to the clean distribution. This intrinsic robustness enables CAD to dynamically allocate compute resources based on token confidence, aggressively skipping steps without the quality collapse typical of heuristic acceleration. On GSM8K, CD4LM matches the LLaDA baseline with a 5.18x wall-clock speedup; across code and math benchmarks, it strictly dominates the accuracy-efficiency Pareto frontier, achieving a 3.62x mean speedup while improving average accuracy. Code is available at this https URL

zh

[NLP-6] From XAI to Stories: A Factorial Study of LLM -Generated Explanation Quality

【速读】: 该论文旨在解决生成式 AI (Generative AI) 在时间序列预测任务中,如何将可解释人工智能 (Explainable AI, XAI) 方法产生的数值特征归因转化为高质量自然语言解释 (Natural Language Explanations, NLEs) 的问题,尤其关注不同模型、XAI方法、大语言模型(LLM)选择及提示策略对NLE质量的影响。其解决方案的关键在于通过一个系统性的因子实验设计,量化分析四个核心变量:预测模型类型(如XGBoost、SARIMAX等)、XAI方法(SHAP、LIME与无XAI基线)、LLM选择(GPT-4o、Llama-3-8B、DeepSeek-R1)以及提示策略(共八种),并采用基于LLM-as-a-judge的G-Eval评估框架进行多维度测评,从而识别出影响NLE质量的主导因素与非直观现象,例如发现LLM选择比其他因素更具影响力,且存在“可解释性悖论”——即传统统计模型SARIMAX虽预测精度更高,但生成的NLE质量反而低于机器学习模型。

链接: https://arxiv.org/abs/2601.02224

作者: Fabian Lukassen,Jan Herrmann,Christoph Weisser,Benjamin Saefken,Thomas Kneib

机构: University of Göttingen (哥廷根大学); BASF SE (巴斯夫公司); TU Clausthal (克劳斯塔尔工业大学)

类目: Computation and Language (cs.CL)

备注:

Abstract:Explainable AI (XAI) methods like SHAP and LIME produce numerical feature attributions that remain inaccessible to non expert users. Prior work has shown that Large Language Models (LLMs) can transform these outputs into natural language explanations (NLEs), but it remains unclear which factors contribute to high-quality explanations. We present a systematic factorial study investigating how Forecasting model choice, XAI method, LLM selection, and prompting strategy affect NLE quality. Our design spans four models (XGBoost (XGB), Random Forest (RF), Multilayer Perceptron (MLP), and SARIMAX - comparing black-box Machine-Learning (ML) against classical time-series approaches), three XAI conditions (SHAP, LIME, and a no-XAI baseline), three LLMs (GPT-4o, Llama-3-8B, DeepSeek-R1), and eight prompting strategies. Using G-Eval, an LLM-as-a-judge evaluation method, with dual LLM judges and four evaluation criteria, we evaluate 660 explanations for time-series forecasting. Our results suggest that: (1) XAI provides only small improvements over no-XAI baselines, and only for expert audiences; (2) LLM choice dominates all other factors, with DeepSeek-R1 outperforming GPT-4o and Llama-3; (3) we observe an interpretability paradox: in our setting, SARIMAX yielded lower NLE quality than ML models despite higher prediction accuracy; (4) zero-shot prompting is competitive with self-consistency at 7-times lower cost; and (5) chain-of-thought hurts rather than helps.

zh

[NLP-7] ARCADE: A City-Scale Corpus for Fine-Grained Arabic Dialect Tagging

【速读】: 该论文旨在解决阿拉伯语语音识别中城市级别方言细粒度标注不足的问题,即现有研究难以准确映射语音到具体城市级别的方言来源。其解决方案的关键在于构建首个具有城市级方言粒度的阿拉伯语语音语料库ARCADE(Arabic Radio Corpus for Audio Dialect Evaluation),通过从阿拉伯世界多个广播流媒体平台采集30秒音频片段,并由母语者进行多维度标注(包括情感、语音类型、方言类别及有效性标签),最终形成包含58个城市、19个国家的3,790个独特音频段和6,907条标注数据的高质量语料库,为城市级方言识别任务提供可靠的基准和多任务学习基础。

链接: https://arxiv.org/abs/2601.02209

作者: Omer Nacar,Serry Sibaee,Adel Ammar,Yasser Alhabashi,Nadia Samer Sibai,Yara Farouk Ahmed,Ahmed Saud Alqusaiyer,Sulieman Mahmoud AlMahmoud,Abdulrhman Mamdoh Mukhaniq,Lubaba Raed,Sulaiman Mohammed Alatwah,Waad Nasser Alqahtani,Yousif Abdulmajeed Alnasser,Mohamed Aziz Khadraoui,Wadii Boulila

机构: Tuwaiq Academy (图威克学院); Prince Sultan University (王子苏丹大学); Higher School of Communication of Tunis (SUP’COM) (突尼斯通信高等学院)

类目: Computation and Language (cs.CL); Computers and Society (cs.CY); Sound (cs.SD)

备注:

Abstract:The Arabic language is characterized by a rich tapestry of regional dialects that differ substantially in phonetics and lexicon, reflecting the geographic and cultural diversity of its speakers. Despite the availability of many multi-dialect datasets, mapping speech to fine-grained dialect sources, such as cities, remains underexplored. We present ARCADE (Arabic Radio Corpus for Audio Dialect Evaluation), the first Arabic speech dataset designed explicitly with city-level dialect granularity. The corpus comprises Arabic radio speech collected from streaming services across the Arab world. Our data pipeline captures 30-second segments from verified radio streams, encompassing both Modern Standard Arabic (MSA) and diverse dialectal speech. To ensure reliability, each clip was annotated by one to three native Arabic reviewers who assigned rich metadata, including emotion, speech type, dialect category, and a validity flag for dialect identification tasks. The resulting corpus comprises 6,907 annotations and 3,790 unique audio segments spanning 58 cities across 19 countries. These fine-grained annotations enable robust multi-task learning, serving as a benchmark for city-level dialect tagging. We detail the data collection methodology, assess audio quality, and provide a comprehensive analysis of label distributions. The dataset is available on: this https URL

zh

[NLP-8] oward Global Large Language Models in Medicine

【速读】: 该论文旨在解决当前大型语言模型(Large Language Models, LLMs)在医疗领域应用中存在语言资源分布不均的问题,即现有模型主要基于高资源语言训练,导致低资源语言场景下的医疗应用效果显著受限。其解决方案的关键在于构建了GlobMed——一个包含超过50万条目、覆盖12种语言(包括四种低资源语言)的多语言医学数据集,并在此基础上开发了GlobMed-Bench评估基准和GlobMed-LLMs系列多语言医学大模型。其中,GlobMed-LLMs通过在GlobMed数据集上训练,实现了相对于基线模型平均40%以上的性能提升,尤其在低资源语言上性能提升超过三倍,从而为全球范围内公平、可及的医疗AI应用提供了重要基础。

链接: https://arxiv.org/abs/2601.02186

作者: Rui Yang,Huitao Li,Weihao Xuan,Heli Qi,Xin Li,Kunyu Yu,Yingjian Chen,Rongrong Wang,Jacques Behmoaras,Tianxi Cai,Bibhas Chakraborty,Qingyu Chen,Lionel Tim-Ee Cheng,Marie-Louise Damwanza,Chido Dzinotyiwei,Aosong Feng,Chuan Hong,Yusuke Iwasawa,Yuhe Ke,Linah Kitala,Taehoon Ko,Jisan Lee,Irene Li,Jonathan Chong Kai Liew,Hongfang Liu,Lian Leng Low,Edison Marrese-Taylor,Yutaka Matsuo,Isheanesu Misi,Yilin Ning,Jasmine Chiat Ling Ong,Marcus Eng Hock Ong,Enrico Petretto,Hossein Rouhizadeh,Abiram Sandralegar,Oren Schreier,Iain Bee Huat Tan,Patrick Tan,Daniel Shu Wei Ting,Junjue Wang,Chunhua Weng,Matthew Yu Heng Wong,Fang Wu,Yunze Xiao,Xuhai Xu,Qingcheng Zeng,Zhuo Zheng,Yifan Peng,Douglas Teodoro,Nan Liu

机构: 未知

类目: Computation and Language (cs.CL)

备注: 182 pages, 65 figures

Abstract:Despite continuous advances in medical technology, the global distribution of health care resources remains uneven. The development of large language models (LLMs) has transformed the landscape of medicine and holds promise for improving health care quality and expanding access to medical information globally. However, existing LLMs are primarily trained on high-resource languages, limiting their applicability in global medical scenarios. To address this gap, we constructed GlobMed, a large multilingual medical dataset, containing over 500,000 entries spanning 12 languages, including four low-resource languages. Building on this, we established GlobMed-Bench, which systematically assesses 56 state-of-the-art proprietary and open-weight LLMs across multiple multilingual medical tasks, revealing significant performance disparities across languages, particularly for low-resource languages. Additionally, we introduced GlobMed-LLMs, a suite of multilingual medical LLMs trained on GlobMed, with parameters ranging from 1.7B to 8B. GlobMed-LLMs achieved an average performance improvement of over 40% relative to baseline models, with a more than threefold increase in performance on low-resource languages. Together, these resources provide an important foundation for advancing the equitable development and application of LLMs globally, enabling broader language communities to benefit from technological advances.

zh

[NLP-9] Confidence Estimation for LLM s in Multi-turn Interactions

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)在多轮对话中置信度估计(confidence estimation)的可靠性问题,尤其是在上下文累积和歧义逐步消解的动态场景下,现有方法普遍缺乏校准性(calibration)和置信度随信息增加而单调递增的特性。其解决方案的关键在于提出首个系统性的多轮对话置信度评估框架,基于两个核心标准:逐轮校准性和置信度单调性,并引入两项创新工具:长度归一化的期望校准误差(InfoECE)指标以及用于生成可控评估数据集的“Hinter-Guesser”范式。实验表明,主流置信度方法在多轮场景中表现不佳,而作者提出的基于logit的探测器P(Sufficient)展现出相对更优的性能,但仍存在显著改进空间。

链接: https://arxiv.org/abs/2601.02179

作者: Caiqi Zhang,Ruihan Yang,Xiaochen Zhu,Chengzu Li,Tiancheng Hu,Yijiang River Dong,Deqing Yang,Nigel Collier

机构: University of Cambridge (剑桥大学); Fudan University (复旦大学)

类目: Computation and Language (cs.CL)

备注:

Abstract:While confidence estimation is a promising direction for mitigating hallucinations in Large Language Models (LLMs), current research dominantly focuses on single-turn settings. The dynamics of model confidence in multi-turn conversations, where context accumulates and ambiguity is progressively resolved, remain largely unexplored. Reliable confidence estimation in multi-turn settings is critical for many downstream applications, such as autonomous agents and human-in-the-loop systems. This work presents the first systematic study of confidence estimation in multi-turn interactions, establishing a formal evaluation framework grounded in two key desiderata: per-turn calibration and monotonicity of confidence as more information becomes available. To facilitate this, we introduce novel metrics, including a length-normalized Expected Calibration Error (InfoECE), and a new “Hinter-Guesser” paradigm for generating controlled evaluation datasets. Our experiments reveal that widely-used confidence techniques struggle with calibration and monotonicity in multi-turn dialogues. We propose P(Sufficient), a logit-based probe that achieves comparatively better performance, although the task remains far from solved. Our work provides a foundational methodology for developing more reliable and trustworthy conversational agents.

zh

[NLP-10] EverMemOS: A Self-Organizing Memory Operating System for Structured Long-Horizon Reasoning

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)在长期交互中因上下文窗口有限而导致行为连贯性难以维持的问题。现有记忆系统通常存储孤立记录并检索片段信息,无法有效整合用户状态的演变与冲突消解。其解决方案的关键在于提出EverMemOS——一种受记忆痕迹(engram)启发的自组织记忆操作系统,通过三个核心机制实现:1)情景痕迹形成(Episodic Trace Formation),将对话流转化为包含情景痕迹、原子事实和时间边界前瞻性信号的MemCells;2)语义巩固(Semantic Consolidation),将MemCells组织为主题性的MemScenes,提炼稳定语义结构并更新用户画像;3)重构回忆(Reconstructive Recollection),基于MemScene引导的代理检索,生成下游推理所需的必要且充分上下文。该架构显著提升了记忆增强型推理任务的表现,并支持用户画像构建与前瞻性能力等高级交互特性。

链接: https://arxiv.org/abs/2601.02163

作者: Chuanrui Hu,Xingze Gao,Zuyi Zhou,Dannong Xu,Yi Bai,Xintong Li,Hui Zhang,Tong Li,Chong Zhang,Lidong Bing,Yafeng Deng

机构: 未知

类目: Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注: 16 pages, 6 figures, 12 tables. Code available at this https URL

Abstract:Large Language Models (LLMs) are increasingly deployed as long-term interactive agents, yet their limited context windows make it difficult to sustain coherent behavior over extended interactions. Existing memory systems often store isolated records and retrieve fragments, limiting their ability to consolidate evolving user states and resolve conflicts. We introduce EverMemOS, a self-organizing memory operating system that implements an engram-inspired lifecycle for computational memory. Episodic Trace Formation converts dialogue streams into MemCells that capture episodic traces, atomic facts, and time-bounded Foresight signals. Semantic Consolidation organizes MemCells into thematic MemScenes, distilling stable semantic structures and updating user profiles. Reconstructive Recollection performs MemScene-guided agentic retrieval to compose the necessary and sufficient context for downstream reasoning. Experiments on LoCoMo and LongMemEval show that EverMemOS achieves state-of-the-art performance on memory-augmented reasoning tasks. We further report a profile study on PersonaMem v2 and qualitative case studies illustrating chat-oriented capabilities such as user profiling and Foresight. Code is available at this https URL.

zh

[NLP-11] FormationEval an open multiple-choice benchmark for petroleum geoscience

【速读】: 该论文旨在解决当前大语言模型在石油地质科学(petroleum geoscience)与地下学科领域评估缺乏专业、可靠基准测试的问题。解决方案的关键在于构建一个名为FormationEval的开放多选题基准数据集,涵盖7个子领域(如岩石物理、石油地质学和油藏工程),共505道题目,源自权威资料并通过概念驱动的方法生成,避免直接复制受版权保护的内容;同时提供完整的来源元数据以支持可追溯性,并对模型性能进行全面评估(覆盖72个来自OpenAI、Anthropic、Google、Meta等主流厂商及开源模型的版本),从而揭示不同模型类别(闭源与开源)、领域间的性能差异与潜在偏差(如答案长度偏倚),为后续研究提供标准化评估工具和透明度保障。

链接: https://arxiv.org/abs/2601.02158

作者: Almaz Ermilov

机构: UiT The Arctic University of Norway(北挪威大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG); Geophysics (physics.geo-ph)

备注: 24 pages, 8 figures, 10 tables; benchmark and code at this https URL

Abstract:This paper presents FormationEval, an open multiple-choice question benchmark for evaluating language models on petroleum geoscience and subsurface disciplines. The dataset contains 505 questions across seven domains including petrophysics, petroleum geology and reservoir engineering, derived from three authoritative sources using a reasoning model with detailed instructions and a concept-based approach that avoids verbatim copying of copyrighted text. Each question includes source metadata to support traceability and audit. The evaluation covers 72 models from major providers including OpenAI, Anthropic, Google, Meta and open-weight alternatives. The top performers achieve over 97% accuracy, with Gemini 3 Pro Preview reaching 99.8%, while tier and domain gaps persist. Among open-weight models, GLM-4.7 leads at 98.6%, with several DeepSeek, Llama, Qwen and Mistral models also exceeding 93%. The performance gap between open-weight and closed models is narrower than expected, with several lower-cost open-weight models exceeding 90% accuracy. Petrophysics emerges as the most challenging domain across all models, while smaller models show wider performance variance. Residual length bias in the dataset (correct answers tend to be longer) is documented along with bias mitigation strategies applied during construction. The benchmark, evaluation code and results are publicly available.

zh

[NLP-12] Entropy-Adaptive Fine-Tuning: Resolving Confident Conflicts to Mitigate Forgetting

【速读】: 该论文旨在解决监督微调(Supervised Fine-Tuning, SFT)在领域适配过程中常引发的灾难性遗忘(catastrophic forgetting)问题,其核心在于SFT与模型内部信念之间的分布偏差(distributional gap)。具体而言,SFT强制模型拟合外部标注,导致在某些“自信冲突”(Confident Conflicts)token上产生高置信度但低熵的预测,从而触发破坏性的梯度更新。为此,作者提出熵自适应微调(Entropy-Adaptive Fine-Tuning, EAFT),其关键创新在于引入token级熵作为门控机制,区分认知不确定性(epistemic uncertainty)与知识冲突(knowledge conflict),从而在保留不确定样本学习能力的同时抑制冲突数据的梯度传播,实现下游任务性能与通用能力之间的一致性平衡。

链接: https://arxiv.org/abs/2601.02151

作者: Muxi Diao,Lele Yang,Wuxuan Gong,Yutong Zhang,Zhonghao Yan,Yufei Han,Kongming Liang,Weiran Xu,Zhanyu Ma

机构: Beijing University of Posts and Telecommunications (北京邮电大学); Zhongguancun Academy (中关村学院)

类目: Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注:

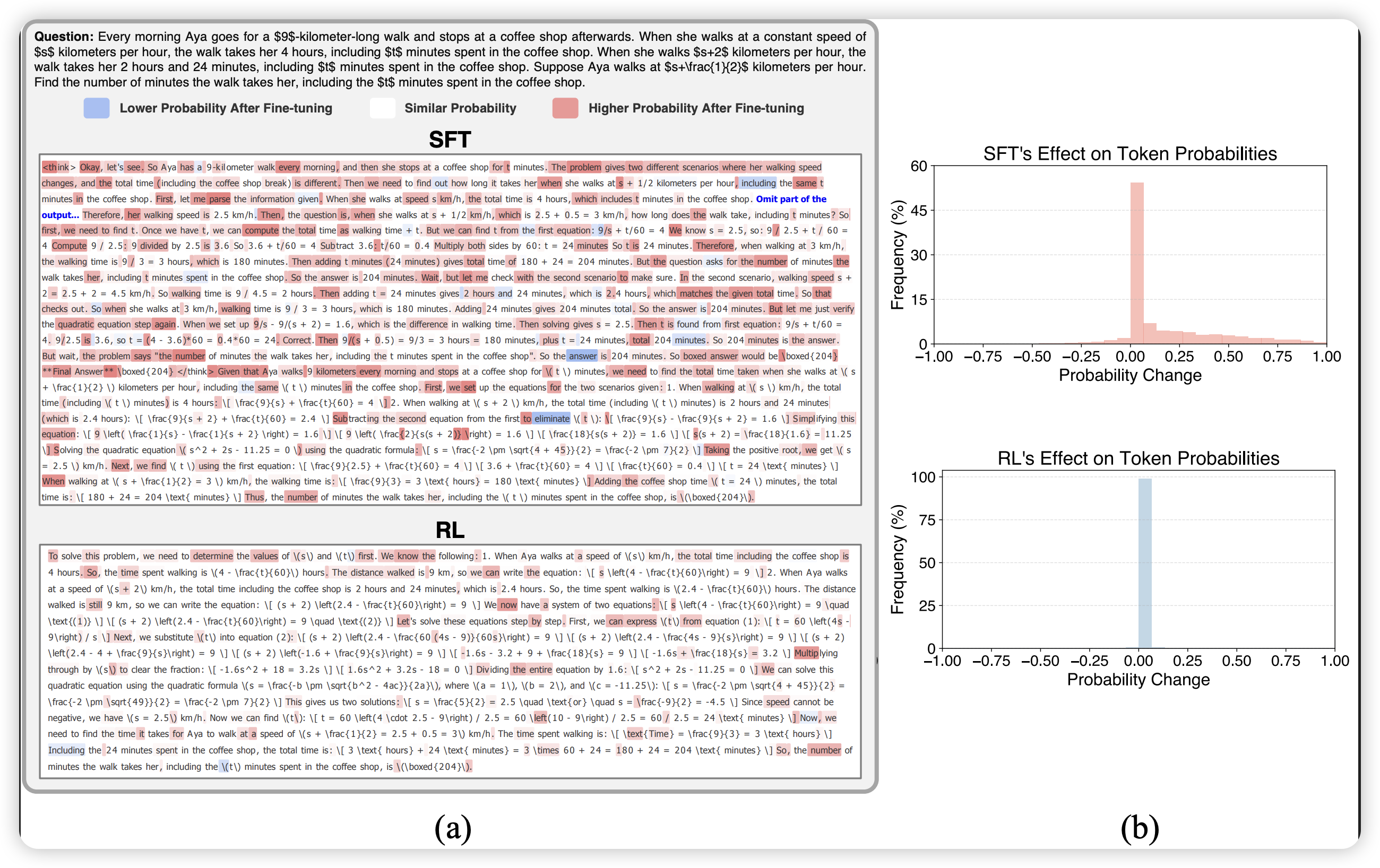

Abstract:Supervised Fine-Tuning (SFT) is the standard paradigm for domain adaptation, yet it frequently incurs the cost of catastrophic forgetting. In sharp contrast, on-policy Reinforcement Learning (RL) effectively preserves general capabilities. We investigate this discrepancy and identify a fundamental distributional gap: while RL aligns with the model’s internal belief, SFT forces the model to fit external supervision. This mismatch often manifests as “Confident Conflicts” tokens characterized by low probability but low entropy. In these instances, the model is highly confident in its own prediction but is forced to learn a divergent ground truth, triggering destructive gradient updates. To address this, we propose Entropy-Adaptive Fine-Tuning (EAFT). Unlike methods relying solely on prediction probability, EAFT utilizes token-level entropy as a gating mechanism to distinguish between epistemic uncertainty and knowledge conflict. This allows the model to learn from uncertain samples while suppressing gradients on conflicting data. Extensive experiments on Qwen and GLM series (ranging from 4B to 32B parameters) across mathematical, medical, and agentic domains confirm our hypothesis. EAFT consistently matches the downstream performance of standard SFT while significantly mitigating the degradation of general capabilities.

zh

[NLP-13] Routing by Analogy: kNN-Augmented Expert Assignment for Mixture-of-Experts

【速读】: 该论文旨在解决混合专家(Mixture-of-Experts, MoE)架构在面对分布偏移(distribution shift)时路由决策脆弱的问题,即传统方法中固定训练后冻结的路由器难以适应新场景。其解决方案的关键在于提出kNN-MoE,一种基于检索增强的路由框架:通过离线构建一个记忆库,该记忆库直接优化token级路由logits以最大化参考集上的似然,并利用检索到的相似历史案例的聚合相似度作为置信度驱动的混合系数,从而在无相关案例时自动退回到冻结的原始路由器,实现鲁棒且灵活的动态路由。

链接: https://arxiv.org/abs/2601.02144

作者: Boxuan Lyu,Soichiro Murakami,Hidetaka Kamigaito,Peinan Zhang

机构: Institute of Science Tokyo (东京科学研究所); CyberAgent (CyberAgent); Nara Institute of Science and Technology (奈良科学技术大学院大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Mixture-of-Experts (MoE) architectures scale large language models efficiently by employing a parametric “router” to dispatch tokens to a sparse subset of experts. Typically, this router is trained once and then frozen, rendering routing decisions brittle under distribution shifts. We address this limitation by introducing kNN-MoE, a retrieval-augmented routing framework that reuses optimal expert assignments from a memory of similar past cases. This memory is constructed offline by directly optimizing token-wise routing logits to maximize the likelihood on a reference set. Crucially, we use the aggregate similarity of retrieved neighbors as a confidence-driven mixing coefficient, thus allowing the method to fall back to the frozen router when no relevant cases are found. Experiments show kNN-MoE outperforms zero-shot baselines and rivals computationally expensive supervised fine-tuning.

zh

[NLP-14] owards Multi-Level Transcript Segmentation: LoRA Fine-Tuning for Table-of-Contents Generation INTERSPEECH INTERSPEECH2025

【速读】: 该论文旨在解决语音转录文本的层次化主题分割问题,以提升下游处理效率并增强无障碍访问体验。其解决方案的关键在于提出一种新型的多层级主题分割方法,通过生成包含主题与子主题边界的层级目录结构,并对比零样本提示(zero-shot prompting)与LoRA微调(LoRA fine-tuning)在大语言模型上的性能差异,同时融合高阶语音停顿特征以增强分割准确性。实验表明,该方法在英语会议录音和多语种讲座转录(葡萄牙语、德语)上均显著优于现有基线模型,且改进了多层级分割的统一评估指标,实现了对所有层级信息的一体化衡量。

链接: https://arxiv.org/abs/2601.02128

作者: Steffen Freisinger,Philipp Seeberger,Thomas Ranzenberger,Tobias Bocklet,Korbinian Riedhammer

机构: 未知

类目: Computation and Language (cs.CL); Audio and Speech Processing (eess.AS)

备注: Published in Proceedings of Interspeech 2025. Please cite the proceedings version (DOI: https://doi.org/10.21437/Interspeech.2025-2792 )

Abstract:Segmenting speech transcripts into thematic sections benefits both downstream processing and users who depend on written text for accessibility. We introduce a novel approach to hierarchical topic segmentation in transcripts, generating multi-level tables of contents that capture both topic and subtopic boundaries. We compare zero-shot prompting and LoRA fine-tuning on large language models, while also exploring the integration of high-level speech pause features. Evaluations on English meeting recordings and multilingual lecture transcripts (Portuguese, German) show significant improvements over established topic segmentation baselines. Additionally, we adapt a common evaluation measure for multi-level segmentation, taking into account all hierarchical levels within one metric.

zh

[NLP-15] DeCode: Decoupling Content and Delivery for Medical QA

【速读】: 该论文旨在解决大型语言模型(Large Language Models, LLMs)在临床场景中生成答案时缺乏对个体患者情境适配的问题,即模型虽能提供医学上正确的回答,但往往与具体患者的临床需求不匹配。解决方案的关键在于提出一种无需训练、与模型无关的框架 DeCode,该框架通过引入上下文感知机制,在不修改原模型参数的前提下,使现有 LLM 能够根据患者特定信息生成更具临床相关性的回答。实验表明,DeCode 在 OpenAI HealthBench 基准测试中将性能从 28.4% 提升至 49.8%,实现了 75% 的相对改进,验证了其在提升临床问答准确性方面的有效性。

链接: https://arxiv.org/abs/2601.02123

作者: Po-Jen Ko,Chen-Han Tsai,Yu-Shao Peng

机构: National Taiwan University (台湾大学); HTC DeepQ

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注: Preprint

Abstract:Large language models (LLMs) exhibit strong medical knowledge and can generate factually accurate responses. However, existing models often fail to account for individual patient contexts, producing answers that are clinically correct yet poorly aligned with patients’ needs. In this work, we introduce DeCode, a training-free, model-agnostic framework that adapts existing LLMs to produce contextualized answers in clinical settings. We evaluate DeCode on OpenAI HealthBench, a comprehensive and challenging benchmark designed to assess clinical relevance and validity of LLM responses. DeCode improves the previous state of the art from 28.4% to 49.8% , corresponding to a 75% relative improvement. Experimental results suggest the effectiveness of DeCode in improving clinical question answering of LLMs.

zh

[NLP-16] Deferred Commitment Decoding for Diffusion Language Models with Confidence-Aware Sliding Windows

【速读】: 该论文旨在解决块状扩散语言模型(block-based diffusion language models)中存在的边界诱导上下文截断(Boundary-Induced Context Truncation, BICT)问题:在块边界附近的未解码 token 被迫提前提交,而无法利用邻近的未来上下文信息,导致不确定性增加、生成质量下降,尤其在数学推理和代码生成等需要精确推理的任务中表现不佳。解决方案的关键在于提出一种无需训练的解码策略——延迟承诺解码(Deferred Commitment Decoding, DCD),其核心思想是维护一个基于置信度的滑动窗口,在窗口内优先确定低不确定性 token,同时推迟高不确定性 token 的提交,直至获得充分的上下文证据。该设计实现了窗口内的双向信息流动而不牺牲效率,显著提升了生成准确率(平均提升 1.39%,最高达 9.0%)。

链接: https://arxiv.org/abs/2601.02076

作者: Yingte Shu,Yuchuan Tian,Chao Xu,Yunhe Wang,Hanting Chen

机构: Peking University (北京大学); Huawei Technologies Co., Ltd (华为技术有限公司)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Diffusion language models (DLMs) have recently emerged as a strong alternative to autoregressive models by enabling parallel text generation. To improve inference efficiency and KV-cache compatibility, prior work commonly adopts block-based diffusion, decoding tokens block by block. However, this paradigm suffers from a structural limitation that we term Boundary-Induced Context Truncation (BICT): undecoded tokens near block boundaries are forced to commit without access to nearby future context, even when such context could substantially reduce uncertainty. This limitation degrades decoding confidence and generation quality, especially for tasks requiring precise reasoning, such as mathematical problem solving and code generation. We propose Deferred Commitment Decoding (DCD), a novel, training-free decoding strategy that mitigates this issue. DCD maintains a confidence-aware sliding window over masked tokens, resolving low-uncertainty tokens early while deferring high-uncertainty tokens until sufficient contextual evidence becomes available. This design enables effective bidirectional information flow within the decoding window without sacrificing efficiency. Extensive experiments across multiple diffusion language models, benchmarks, and caching configurations show that DCD improves generation accuracy by 1.39% with comparable time on average compared to fixed block-based diffusion methods, with the most significant improvement reaching 9.0%. These results demonstrate that deferring token commitment based on uncertainty is a simple yet effective principle for improving both the quality and efficiency of diffusion language model decoding.

zh

[NLP-17] Cost-Efficient Cross-Lingual Retrieval-Augmented Generation for Low-Resource Languages: A Case Study in Bengali Agricultural Advisory

【速读】: 该论文旨在解决发展中国家农业知识获取受限的问题,尤其是因语言障碍导致的权威农业手册(主要为英文)与农民常用低资源本地语言(如孟加拉语)之间的信息断层。其解决方案的关键在于提出一种基于翻译中心架构的跨语言检索增强生成(Retrieval-Augmented Generation, RAG)框架:通过将孟加拉语用户查询翻译为英文,结合领域特定关键词注入以对齐农民口语术语与科学术语,再利用密集向量检索从精选英文农业手册(如FAO、IRRI)中提取事实依据生成回答,并最终将英文结果回译为孟加拉语,从而实现高准确性、低成本且可在消费级硬件上部署的农业咨询服务。

链接: https://arxiv.org/abs/2601.02065

作者: Md. Asif Hossain,Nabil Subhan,Mantasha Rahman Mahi,Jannatul Ferdous Nabila

机构: 未知

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注: 5 pages, 3 figures, 1 table

Abstract:Access to reliable agricultural advisory remains limited in many developing regions due to a persistent language barrier: authoritative agricultural manuals are predominantly written in English, while farmers primarily communicate in low-resource local languages such as Bengali. Although recent advances in Large Language Models (LLMs) enable natural language interaction, direct generation in low-resource languages often exhibits poor fluency and factual inconsistency, while cloud-based solutions remain cost-prohibitive. This paper presents a cost-efficient, cross-lingual Retrieval-Augmented Generation (RAG) framework for Bengali agricultural advisory that emphasizes factual grounding and practical deployability. The proposed system adopts a translation-centric architecture in which Bengali user queries are translated into English, enriched through domain-specific keyword injection to align colloquial farmer terminology with scientific nomenclature, and answered via dense vector retrieval over a curated corpus of English agricultural manuals (FAO, IRRI). The generated English response is subsequently translated back into Bengali to ensure accessibility. The system is implemented entirely using open-source models and operates on consumer-grade hardware without reliance on paid APIs. Experimental evaluation demonstrates reliable source-grounded responses, robust rejection of out-of-domain queries, and an average end-to-end latency below 20 seconds. The results indicate that cross-lingual retrieval combined with controlled translation offers a practical and scalable solution for agricultural knowledge access in low-resource language settings

zh

[NLP-18] Simulated Reasoning is Reasoning

【速读】: 该论文旨在解决当前基础模型(Foundational Models, FM)在推理能力上的本质问题,即这些模型虽能通过模仿“自言自语式思考”(thinking out loud)实现问题求解,但其推理过程缺乏人类认知中的常识性根基(grounding and common sense),从而导致推理结果的脆弱性(brittleness)。论文指出,传统将FM类比为“随机鹦鹉”(stochastic parrot)的隐喻已不再适用,因为FM展现出的是一种不同于人类符号推理(symbolic reasoning)的新形式推理。解决方案的关键在于重新理解推理的本质及其必要条件,并基于此构建更安全、鲁棒的防御机制,以应对FM推理过程中因缺乏常识和情境依赖所引发的风险。

链接: https://arxiv.org/abs/2601.02043

作者: Hendrik Kempt,Alon Lavie

机构: 未知

类目: Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注: 21 pages

Abstract:Reasoning has long been understood as a pathway between stages of understanding. Proper reasoning leads to understanding of a given subject. This reasoning was conceptualized as a process of understanding in a particular way, i.e., “symbolic reasoning”. Foundational Models (FM) demonstrate that this is not a necessary condition for many reasoning tasks: they can “reason” by way of imitating the process of “thinking out loud”, testing the produced pathways, and iterating on these pathways on their own. This leads to some form of reasoning that can solve problems on its own or with few-shot learning, but appears fundamentally different from human reasoning due to its lack of grounding and common sense, leading to brittleness of the reasoning process. These insights promise to substantially alter our assessment of reasoning and its necessary conditions, but also inform the approaches to safety and robust defences against this brittleness of FMs. This paper offers and discusses several philosophical interpretations of this phenomenon, argues that the previously apt metaphor of the “stochastic parrot” has lost its relevance and thus should be abandoned, and reflects on different normative elements in the safety- and appropriateness-considerations emerging from these reasoning models and their growing capacity.

zh

[NLP-19] Output Embedding Centering for Stable LLM Pretraining

【速读】: 该论文旨在解决大规模语言模型预训练过程中因大学习率导致的输出logit发散(output logit divergence)问题,这是一种常见的训练不稳定性现象。现有主流缓解策略z-loss仅针对症状而非根本原因,无法有效保障训练稳定性和学习率鲁棒性。论文从输出嵌入(output embeddings)几何结构的角度分析了该不稳定性的成因,并提出一种新的解决方案——输出嵌入中心化(Output Embedding Centering, OEC),其核心在于通过将输出嵌入向量中心化(如μ-centering的确定性操作或μ-loss的正则化方法)来抑制logit发散。实验表明,OEC在训练稳定性和学习率敏感度上均优于z-loss,尤其能在z-loss失效时仍确保收敛,且μ-loss对超参数调优的依赖显著低于z-loss。

链接: https://arxiv.org/abs/2601.02031

作者: Felix Stollenwerk,Anna Lokrantz,Niclas Hertzberg

机构: AI Sweden

类目: Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注: 11 pages, 5 figures

Abstract:Pretraining of large language models is not only expensive but also prone to certain training instabilities. A specific instability that often occurs for large learning rates at the end of training is output logit divergence. The most widely used mitigation strategy, z-loss, merely addresses the symptoms rather than the underlying cause of the problem. In this paper, we analyze the instability from the perspective of the output embeddings’ geometry and identify its cause. Based on this, we propose output embedding centering (OEC) as a new mitigation strategy, and prove that it suppresses output logit divergence. OEC can be implemented in two different ways, as a deterministic operation called \mu-centering, or a regularization method called \mu-loss. Our experiments show that both variants outperform z-loss in terms of training stability and learning rate sensitivity. In particular, they ensure that training converges even for large learning rates when z-loss fails. Furthermore, we find that \mu-loss is significantly less sensitive to regularization hyperparameter tuning than z-loss.

zh

[NLP-20] Not All Needles Are Found: How Fact Distribution and Dont Make It Up Prompts Shape Literal Extraction Logical Inference and Hallucination Risks in Long-Context LLM s

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)在处理超长输入上下文时,信息提取与推理能力不稳定的问题,特别是当事实信息在文档中分布稀疏或位置偏移时,模型性能显著下降。其核心挑战在于:尽管LLMs支持长上下文,但其实际表现高度依赖于事实的放置方式、语料库中的事实分布模式以及是否使用“不要编造”(Don’t Make It Up)类提示来抑制幻觉。解决方案的关键在于构建一个扩展的“针在 haystack 中”基准测试(needle-in-a-haystack benchmark),涵盖四个生产级模型(Gemini-2.5-flash、ChatGPT-5-mini、Claude-4.5-haiku 和 Deepseek-v3.2-chat),并区分评估字面提取(literal extraction)、逻辑推理(logical inference)和幻觉风险(hallucination risk)。研究发现,单纯增加上下文长度并不能提升性能,反而可能因证据稀释而恶化;此外,抗幻觉提示虽能降低虚构风险,却可能导致模型过度保守,损害准确性。因此,关键洞见是:模型的有效上下文利用能力(而非仅上下文长度)才是决定可靠性的核心因素,这对企业级应用中直接插入大量未过滤文档的场景具有重要实践意义。

链接: https://arxiv.org/abs/2601.02023

作者: Amirali Ebrahimzadeh,Seyyed M. Salili

机构: University of Michigan, Ann Arbor, MI 48109, USA (密歇根大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注: 25 pages, 8 figures, 3 tables

Abstract:Large language models (LLMs) increasingly support very long input contexts. Yet it remains unclear how reliably they extract and infer information at scale. Performance varies with context length and strongly interacts with how information is distributed in real-world corpora. Motivated by these observations, we study how fact placement, corpus-level fact distributions, and Don’t Make It Up prompts influence model behavior. We introduce an extended needle-in-a-haystack benchmark across four production-scale models: Gemini-2.5-flash, ChatGPT-5-mini, Claude-4.5-haiku, and Deepseek-v3.2-chat. Unlike prior work, we separately evaluate literal extraction, logical inference, and hallucination risk. Our study considers both positional effects and realistic distributions of evidence across long contexts, as well as prompts that explicitly discourage fabrication. We find that longer contexts alone do not guarantee better performance and can be detrimental when relevant evidence is diluted or widely dispersed. Performance varies substantially across models: some show severe degradation under realistic conditions, while others remain more robust at longer context lengths. Anti-hallucination (AH) instructions can make some models overly conservative, sharply reducing accuracy in literal extraction and logical inference. While we do not directly compare retrieval-augmented generation (RAG) and cache-augmented generation (CAG), our results suggest many failures stem from ineffective context utilization. Models often struggle to identify and prioritize relevant information even when it is present. These findings have direct practical implications, as enterprise workflows increasingly involve pasting large volumes of unfiltered documents into LLM prompts. Effective context length and model-specific robustness to long contexts are therefore critical for reliable LLM deployment in research and business.

zh

[NLP-21] Surprisal and Metaphor Novelty: Moderate Correlations and Divergent Scaling Effects EACL2026

【速读】: 该论文旨在解决语言模型(Language Models, LMs)在理解新颖隐喻(novel metaphor)时,其预测不确定性(即 surprisal)是否能有效反映人类对隐喻新颖性的标注这一问题。解决方案的关键在于通过对比分析16种不同规模的语言模型在基于语料库和人工构造的隐喻新颖性数据集上的surprisal值与人类标注分数之间的相关性,并引入一种基于完整句子上下文的cloze-style surprisal计算方法,从而揭示出surprisal作为衡量隐喻新颖性指标的局限性及其与模型规模之间的非线性关系——具体表现为:在语料库数据上呈现“反向缩放效应”(inverse scaling effect),而在合成数据上则符合“质量-功率假说”(Quality-Power Hypothesis)。

链接: https://arxiv.org/abs/2601.02015

作者: Omar Momen,Emilie Sitter,Berenike Herrmann,Sina Zarrieß

机构: Bielefeld University (比勒费尔德大学); CRC 1646 – Linguistic Creativity in Communication (语言创造力在交流中的CRC 1646)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Information Theory (cs.IT)

备注: to be published at EACL 2026 main conference

Abstract:Novel metaphor comprehension involves complex semantic processes and linguistic creativity, making it an interesting task for studying language models (LMs). This study investigates whether surprisal, a probabilistic measure of predictability in LMs, correlates with different metaphor novelty datasets. We analyse surprisal from 16 LM variants on corpus-based and synthetic metaphor novelty datasets. We explore a cloze-style surprisal method that conditions on full-sentence context. Results show that LMs yield significant moderate correlations with scores/labels of metaphor novelty. We further identify divergent scaling patterns: on corpus-based data, correlation strength decreases with model size (inverse scaling effect), whereas on synthetic data it increases (Quality-Power Hypothesis). We conclude that while surprisal can partially account for annotations of metaphor novelty, it remains a limited metric of linguistic creativity.

zh

[NLP-22] Exploring Approaches for Detecting Memorization of Recommender System Data in Large Language Models

【速读】: 该论文旨在解决大型语言模型(Large Language Models, LLMs)在推荐场景中可能因训练数据泄露而导致的信息安全问题,特别是针对模型是否记忆了特定数据集(如MovieLens-1M)以及如何自动化检测和提取这些记忆内容的问题。解决方案的关键在于系统性地评估三种不同策略:(i) 攻击性提示工程(jailbreak prompt engineering),(ii) 基于内部激活的无监督潜在知识发现(通过Contrast-Consistent Search和Cluster-Norm实现),以及(iii) 自动提示工程(Automatic Prompt Engineering, APE),其中APE将提示发现建模为元学习过程并迭代优化候选指令,实验表明该方法在自动提取记忆样本方面最具潜力。

链接: https://arxiv.org/abs/2601.02002

作者: Antonio Colacicco,Vito Guida,Dario Di Palma,Fedelucio Narducci,Tommaso Di Noia

机构: Politecnico di Bari (巴里理工大学)

类目: Information Retrieval (cs.IR); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注:

Abstract:Large Language Models (LLMs) are increasingly applied in recommendation scenarios due to their strong natural language understanding and generation capabilities. However, they are trained on vast corpora whose contents are not publicly disclosed, raising concerns about data leakage. Recent work has shown that the MovieLens-1M dataset is memorized by both the LLaMA and OpenAI model families, but the extraction of such memorized data has so far relied exclusively on manual prompt engineering. In this paper, we pose three main questions: Is it possible to enhance manual prompting? Can LLM memorization be detected through methods beyond manual prompting? And can the detection of data leakage be automated? To address these questions, we evaluate three approaches: (i) jailbreak prompt engineering; (ii) unsupervised latent knowledge discovery, probing internal activations via Contrast-Consistent Search (CCS) and Cluster-Norm; and (iii) Automatic Prompt Engineering (APE), which frames prompt discovery as a meta-learning process that iteratively refines candidate instructions. Experiments on MovieLens-1M using LLaMA models show that jailbreak prompting does not improve the retrieval of memorized items and remains inconsistent; CCS reliably distinguishes genuine from fabricated movie titles but fails on numerical user and rating data; and APE retrieves item-level information with moderate success yet struggles to recover numerical interactions. These findings suggest that automatically optimizing prompts is the most promising strategy for extracting memorized samples.

zh

[NLP-23] Exploring Diversity Novelty and Popularity Bias in ChatGPT s Recommendations

【速读】: 该论文旨在解决当前生成式 AI(如 ChatGPT)在推荐系统(Recommender Systems, RSs)中应用时,对多样性、新颖性和流行度偏差(popularity bias)等非准确性指标缺乏系统评估的问题。现有研究主要聚焦于模型的推荐准确率,而忽视了这些维度对长期个性化和用户体验的重要性。解决方案的关键在于通过多数据集实验,在 Top-N 推荐和冷启动场景下,定量评估 ChatGPT-3.5 和 ChatGPT-4 在多样性、新颖性及流行度偏差方面的表现,结果表明 ChatGPT-4 在平衡新颖性与多样性方面可媲美甚至超越传统推荐算法,并在冷启动场景中展现出更高的准确率与新颖性,凸显其在提升用户满意度和解决新用户推荐难题上的潜力。

链接: https://arxiv.org/abs/2601.01997

作者: Dario Di Palma,Giovanni Maria Biancofiore,Vito Walter Anelli,Fedelucio Narducci,Tommaso Di Noia

机构: Politecnico di Bari (巴里理工大学)

类目: Information Retrieval (cs.IR); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注:

Abstract:ChatGPT has emerged as a versatile tool, demonstrating capabilities across diverse domains. Given these successes, the Recommender Systems (RSs) community has begun investigating its applications within recommendation scenarios primarily focusing on accuracy. While the integration of ChatGPT into RSs has garnered significant attention, a comprehensive analysis of its performance across various dimensions remains largely unexplored. Specifically, the capabilities of providing diverse and novel recommendations or exploring potential biases such as popularity bias have not been thoroughly examined. As the use of these models continues to expand, understanding these aspects is crucial for enhancing user satisfaction and achieving long-term personalization. This study investigates the recommendations provided by ChatGPT-3.5 and ChatGPT-4 by assessing ChatGPT’s capabilities in terms of diversity, novelty, and popularity bias. We evaluate these models on three distinct datasets and assess their performance in Top-N recommendation and cold-start scenarios. The findings reveal that ChatGPT-4 matches or surpasses traditional recommenders, demonstrating the ability to balance novelty and diversity in recommendations. Furthermore, in the cold-start scenario, ChatGPT models exhibit superior performance in both accuracy and novelty, suggesting they can be particularly beneficial for new users. This research highlights the strengths and limitations of ChatGPT’s recommendations, offering new perspectives on the capacity of these models to provide recommendations beyond accuracy-focused metrics. Subjects: Information Retrieval (cs.IR); Artificial Intelligence (cs.AI); Computation and Language (cs.CL) Cite as: arXiv:2601.01997 [cs.IR] (or arXiv:2601.01997v1 [cs.IR] for this version) https://doi.org/10.48550/arXiv.2601.01997 Focus to learn more arXiv-issued DOI via DataCite (pending registration)

zh

[NLP-24] Hidden State Poisoning Attacks against Mamba-based Language Models ACL2026

【速读】: 该论文旨在解决状态空间模型(State Space Models, SSMs)在对抗攻击下的鲁棒性问题,特别是针对一种新型的“隐藏状态投毒攻击”(Hidden State Poisoning Attack, HiSPA),该攻击通过特定短语引发模型隐藏状态的信息丢失,导致其产生部分遗忘效应。解决方案的关键在于识别并验证HiSPA对SSMs的显著破坏性——例如,在RoBench25基准上,即使是近期的52B参数混合SSM-Transformer模型(Jamba)也因优化后的HiSPA触发词而失效,且该攻击同样削弱其在Open-Prompt-Injections基准上的表现;同时,研究通过可解释性分析揭示了Mamba模型在遭受HiSPA时隐藏层中的规律性模式,为构建针对性的防御机制提供了理论基础和实践路径。

链接: https://arxiv.org/abs/2601.01972

作者: Alexandre Le Mercier,Chris Develder,Thomas Demeester

机构: IDLab–T2K, Ghent University–imec (IDLab–T2K, 根特大学–imec)

类目: Computation and Language (cs.CL)

备注: 17 pages, 4 figures. Submitted to ACL 2026

Abstract:State space models (SSMs) like Mamba offer efficient alternatives to Transformer-based language models, with linear time complexity. Yet, their adversarial robustness remains critically unexplored. This paper studies the phenomenon whereby specific short input phrases induce a partial amnesia effect in such models, by irreversibly overwriting information in their hidden states, referred to as a Hidden State Poisoning Attack (HiSPA). Our benchmark RoBench25 allows evaluating a model’s information retrieval capabilities when subject to HiSPAs, and confirms the vulnerability of SSMs against such attacks. Even a recent 52B hybrid SSM-Transformer model from the Jamba family collapses on RoBench25 under optimized HiSPA triggers, whereas pure Transformers do not. We also observe that HiSPA triggers significantly weaken the Jamba model on the popular Open-Prompt-Injections benchmark, unlike pure Transformers. Finally, our interpretability study reveals patterns in Mamba’s hidden layers during HiSPAs that could be used to build a HiSPA mitigation system. The full code and data to reproduce the experiments can be found at this https URL.

zh

[NLP-25] CSF: Contrastive Semantic Features for Direct Multilingual Sign Language Generation

【速读】: 该论文旨在解决现有手语翻译系统普遍依赖英语作为中介语言所导致的非英语使用者在聋人社区中面临的技术壁垒问题。其解决方案的关键在于提出了一种语言无关的语义表示框架——规范语义形式(Canonical Semantic Form, CSF),该框架将话语分解为九个通用语义槽(event, intent, time, condition, agent, object, location, purpose, modifier),并构建了一个包含35类条件类型的综合性条件分类体系,从而实现任意源语言到手语的直接翻译,无需英语中介。该方法通过一个轻量级Transformer提取器(模型大小仅0.74 MB)实现了跨四种语言(英语、越南语、日语和法语)的平均99.03%语义槽提取准确率,尤其在复杂条件分类任务上达到99.4%准确率,且推理延迟仅为3.02ms(CPU),支持浏览器端实时手语生成。

链接: https://arxiv.org/abs/2601.01964

作者: Tran Sy Bao

机构: 未知

类目: Computation and Language (cs.CL)

备注: 9 pages, 8 tables, code available at this https URL

Abstract:Sign language translation systems typically require English as an intermediary language, creating barriers for non-English speakers in the global deaf community. We present Canonical Semantic Form (CSF), a language-agnostic semantic representation framework that enables direct translation from any source language to sign language without English mediation. CSF decomposes utterances into nine universal semantic slots: event, intent, time, condition, agent, object, location, purpose, and modifier. A key contribution is our comprehensive condition taxonomy comprising 35 condition types across eight semantic categories, enabling nuanced representation of conditional expressions common in everyday communication. We train a lightweight transformer-based extractor (0.74 MB) that achieves 99.03% average slot extraction accuracy across four typologically diverse languages: English, Vietnamese, Japanese, and French. The model demonstrates particularly strong performance on condition classification (99.4% accuracy) despite the 35-class complexity. With inference latency of 3.02ms on CPU, our approach enables real-time sign language generation in browser-based applications. We release our code, trained models, and multilingual dataset to support further research in accessible sign language technology.

zh

[NLP-26] he Invisible Hand of AI Libraries Shaping Open Source Projects and Communities

【速读】: 该论文旨在解决当前对人工智能(Artificial Intelligence, AI)在开源软件(Open Source Software, OSS)项目中采用情况及其影响的研究不足问题,特别是AI库在Python和Java OSS项目中的整合如何重塑开发实践、技术生态与社区参与度。其解决方案的关键在于开展一项大规模分析,基于157,700个潜在OSS仓库,利用仓库级指标与软件度量方法,系统比较采纳AI库与未采纳AI库的项目在开发活跃度、社区互动性和代码复杂性等方面的差异,从而提供实证依据以揭示AI集成对软件工程实践的结构性影响。

链接: https://arxiv.org/abs/2601.01944

作者: Matteo Esposito,Andrea Janes,Valentina Lenarduzzi,Davide Taibi

机构: 未知

类目: oftware Engineering (cs.SE); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Information Retrieval (cs.IR); Programming Languages (cs.PL)

备注: ACCEPTED REGISTERED REPORT AT SANER (CORE A*) 2026

Abstract:In the early 1980s, Open Source Software emerged as a revolutionary concept amidst the dominance of proprietary software. What began as a revolutionary idea has now become the cornerstone of computer science. Amidst OSS projects, AI is increasing its presence and relevance. However, despite the growing popularity of AI, its adoption and impacts on OSS projects remain underexplored. We aim to assess the adoption of AI libraries in Python and Java OSS projects and examine how they shape development, including the technical ecosystem and community engagement. To this end, we will perform a large-scale analysis on 157.7k potential OSS repositories, employing repository metrics and software metrics to compare projects adopting AI libraries against those that do not. We expect to identify measurable differences in development activity, community engagement, and code complexity between OSS projects that adopt AI libraries and those that do not, offering evidence-based insights into how AI integration reshapes software development practices. Comments: ACCEPTED REGISTERED REPORT AT SANER (CORE A*) 2026 Subjects: Software Engineering (cs.SE); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Information Retrieval (cs.IR); Programming Languages (cs.PL) Cite as: arXiv:2601.01944 [cs.SE] (or arXiv:2601.01944v1 [cs.SE] for this version) https://doi.org/10.48550/arXiv.2601.01944 Focus to learn more arXiv-issued DOI via DataCite (pending registration)

zh

[NLP-27] ackling the Inherent Difficulty of Noise Filtering in RAG

【速读】: 该论文旨在解决检索增强生成(Retrieval-Augmented Generation, RAG)系统中因引入噪声或无关文档而导致大语言模型(Large Language Models, LLMs)性能下降甚至产生幻觉的问题。现有方法难以完全过滤无关内容,且标准微调策略受限于注意力机制的结构特性,无法有效引导模型选择性地利用相关信息并忽略无关内容。解决方案的关键在于提出一种新颖的微调方法,专门设计用于提升LLM在检索到的文档中区分相关信息与无关信息的能力,从而显著增强模型对噪声的鲁棒性和整体性能。

链接: https://arxiv.org/abs/2601.01896

作者: Jingyu Liu,Jiaen Lin,Yong Liu

机构: Gaoling School of Artificial Intelligence, Renmin University of China (中国人民大学高瓴人工智能学院); Beijing Key Laboratory of Big Data Management and Analysis Methods (北京市大数据管理与分析方法重点实验室); School of Software Tsinghua University (清华大学软件学院)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Retrieval-Augmented Generation (RAG) has become a widely adopted approach to enhance Large Language Models (LLMs) by incorporating external knowledge and reducing hallucinations. However, noisy or irrelevant documents are often introduced during RAG, potentially degrading performance and even causing hallucinated outputs. While various methods have been proposed to filter out such noise, we argue that identifying irrelevant information from retrieved content is inherently difficult and limited number of transformer layers can hardly solve this. Consequently, retrievers fail to filter out irrelevant documents entirely. Therefore, LLMs must be robust against such noise, but we demonstrate that standard fine-tuning approaches are often ineffective in enabling the model to selectively utilize relevant information while ignoring irrelevant content due to the structural constraints of attention patterns. To address this, we propose a novel fine-tuning method designed to enhance the model’s ability to distinguish between relevant and irrelevant information within retrieved documents. Extensive experiments across multiple benchmarks show that our approach significantly improves the robustness and performance of LLMs.

zh

[NLP-28] Agent ic Memory: Learning Unified Long-Term and Short-Term Memory Management for Large Language Model Agents

【速读】: 该论文旨在解决大语言模型(Large Language Model, LLM)代理在长程推理任务中因上下文窗口有限而导致的长期记忆(Long-Term Memory, LTM)与短期记忆(Short-Term Memory, STM)管理效率低下问题。现有方法通常将LTM和STM作为独立模块处理,依赖启发式规则或辅助控制器,限制了模型的自适应性和端到端优化能力。其解决方案的关键在于提出一种统一的记忆框架——Agentic Memory (AgeMem),将LTM和STM的管理直接嵌入代理策略中,通过工具化动作(tool-based actions)形式暴露存储、检索、更新、总结和丢弃等记忆操作,使LLM代理能够自主决策何时以及如何操作记忆内容。同时,论文设计了一个三阶段渐进式强化学习训练策略和步进式GRPO算法,以应对由记忆操作引发的稀疏且不连续奖励问题,从而实现更高效、高质量的长程推理性能。

链接: https://arxiv.org/abs/2601.01885

作者: Yi Yu,Liuyi Yao,Yuexiang Xie,Qingquan Tan,Jiaqi Feng,Yaliang Li,Libing Wu

机构: Alibaba Group(阿里巴巴集团); School of Cyber Science and Engineering, Wuhan University(武汉大学网络科学与工程学院)

类目: Computation and Language (cs.CL)

备注:

Abstract:Large language model (LLM) agents face fundamental limitations in long-horizon reasoning due to finite context windows, making effective memory management critical. Existing methods typically handle long-term memory (LTM) and short-term memory (STM) as separate components, relying on heuristics or auxiliary controllers, which limits adaptability and end-to-end optimization. In this paper, we propose Agentic Memory (AgeMem), a unified framework that integrates LTM and STM management directly into the agent’s policy. AgeMem exposes memory operations as tool-based actions, enabling the LLM agent to autonomously decide what and when to store, retrieve, update, summarize, or discard information. To train such unified behaviors, we propose a three-stage progressive reinforcement learning strategy and design a step-wise GRPO to address sparse and discontinuous rewards induced by memory operations. Experiments on five long-horizon benchmarks demonstrate that AgeMem consistently outperforms strong memory-augmented baselines across multiple LLM backbones, achieving improved task performance, higher-quality long-term memory, and more efficient context usage.

zh

[NLP-29] DermoGPT : Open Weights and Open Data for Morphology-Grounded Dermatological Reasoning MLLM s

【速读】: 该论文旨在解决当前多模态大语言模型(Multimodal Large Language Models, MLLMs)在皮肤科领域应用中面临的三大瓶颈:训练数据稀缺、任务覆盖范围狭窄以及缺乏以临床专家诊断流程为基准的监督信号。其解决方案的关键在于构建一个系统性框架,包含三个核心组件:首先,提出DermoInstruct——一个大规模形态学锚定的指令语料库,涵盖211,243张图像和772,675条诊断轨迹,完整映射从形态观察到最终诊断的全流程;其次,建立DermoBench基准测试体系,涵盖11项任务及四个临床维度(形态、诊断、推理与公平性),并提供3,600个专家验证的开放问答实例和人类性能基线;最后,开发DermoGPT模型,通过监督微调结合形态锚定视觉推理一致性(Morphologically-Anchored Visual-Inference-Consistent, MAVIC)强化学习目标,确保视觉观察与诊断结论的一致性,并在推理阶段引入置信度一致性测试时自适应(Confidence-Consistency Test-time adaptation, CCT)机制,从而显著提升模型鲁棒性和准确性,实现对16个代表性基线的全面超越。

链接: https://arxiv.org/abs/2601.01868

作者: Jinghan Ru,Siyuan Yan,Yuguo Yin,Yuexian Zou,Zongyuan Ge

机构: Peking University (北京大学); Monash University (莫纳什大学)

类目: Computation and Language (cs.CL)

备注:

Abstract:Multimodal Large Language Models (MLLMs) show promise for medical applications, yet progress in dermatology lags due to limited training data, narrow task coverage, and lack of clinically-grounded supervision that mirrors expert diagnostic workflows. We present a comprehensive framework to address these gaps. First, we introduce DermoInstruct, a large-scale morphology-anchored instruction corpus comprising 211,243 images and 772,675 trajectories across five task formats, capturing the complete diagnostic pipeline from morphological observation and clinical reasoning to final diagnosis. Second, we establish DermoBench, a rigorous benchmark evaluating 11 tasks across four clinical axes: Morphology, Diagnosis, Reasoning, and Fairness, including a challenging subset of 3,600 expert-verified open-ended instances and human performance baselines. Third, we develop DermoGPT, a dermatology reasoning MLLM trained via supervised fine-tuning followed by our Morphologically-Anchored Visual-Inference-Consistent (MAVIC) reinforcement learning objective, which enforces consistency between visual observations and diagnostic conclusions. At inference, we deploy Confidence-Consistency Test-time adaptation (CCT) for robust predictions. Experiments show DermoGPT significantly outperforms 16 representative baselines across all axes, achieving state-of-the-art performance while substantially narrowing the human-AI gap. DermoInstruct, DermoBench and DermoGPT will be made publicly available at this https URL upon acceptance.

zh

[NLP-30] Judging with Personality and Confidence: A Study on Personality-Conditioned LLM Relevance Assessment

【速读】: 该论文旨在解决生成式 AI(Generative AI)在Web搜索中的相关性判断与置信度校准问题,特别是如何通过模拟大五人格特质(Big Five personality traits)来提升语言模型的决策可靠性。现有研究虽表明提示工程可使大语言模型(LLMs)模拟特定人格特征,但缺乏对其在关键搜索任务如相关性评估和信心校准方面影响的系统理解。论文的关键解决方案是:基于不同人格条件下的模型输出构建个性化的相关性评分与置信度分布特征,并将其作为输入引入随机森林分类器,在有限训练数据下实现了优于单一人格条件的性能表现,验证了人格引导的置信度作为互补预测信号的有效性,从而为更可靠且符合人类偏好的LLM评估体系提供新路径。

链接: https://arxiv.org/abs/2601.01862

作者: Nuo Chen,Hanpei Fang,Piaohong Wang,Jiqun Liu,Tetsuya Sakai,Xiao-Ming Wu

机构: The Hong Kong Polytechnic University(香港理工大学); Waseda University(早稻田大学); City University of Hong Kong(香港城市大学); The University of Oklahoma(俄克拉荷马大学)

类目: Computation and Language (cs.CL); Information Retrieval (cs.IR)

备注:

Abstract:Recent studies have shown that prompting can enable large language models (LLMs) to simulate specific personality traits and produce behaviors that align with those traits. However, there is limited understanding of how these simulated personalities influence critical web search decisions, specifically relevance assessment. Moreover, few studies have examined how simulated personalities impact confidence calibration, specifically the tendencies toward overconfidence or underconfidence. This gap exists even though psychological literature suggests these biases are trait-specific, often linking high extraversion to overconfidence and high neuroticism to underconfidence. To address this gap, we conducted a comprehensive study evaluating multiple LLMs, including commercial models and open-source models, prompted to simulate Big Five personality traits. We tested these models across three test collections (TREC DL 2019, TREC DL 2020, and LLMJudge), collecting two key outputs for each query-document pair: a relevance judgment and a self-reported confidence score. The findings show that personalities such as low agreeableness consistently align more closely with human labels than the unprompted condition. Additionally, low conscientiousness performs well in balancing the suppression of both overconfidence and underconfidence. We also observe that relevance scores and confidence distributions vary systematically across different personalities. Based on the above findings, we incorporate personality-conditioned scores and confidence as features in a random forest classifier. This approach achieves performance that surpasses the best single-personality condition on a new dataset (TREC DL 2021), even with limited training data. These findings highlight that personality-derived confidence offers a complementary predictive signal, paving the way for more reliable and human-aligned LLM evaluators. Subjects: Computation and Language (cs.CL); Information Retrieval (cs.IR) Cite as: arXiv:2601.01862 [cs.CL] (or arXiv:2601.01862v1 [cs.CL] for this version) https://doi.org/10.48550/arXiv.2601.01862 Focus to learn more arXiv-issued DOI via DataCite (pending registration)

zh

[NLP-31] owards Automated Lexicography: Generating and Evaluating Definitions for Learners Dictionaries

【速读】: 该论文旨在解决学习者词典定义生成(Learner’s Dictionary Definition Generation, LDDG)问题,即自动生成简洁、易懂的词汇定义,以降低人工编写词典定义的成本。其核心挑战在于如何在保持语义准确性的同时确保所生成定义的词汇简单性,从而满足语言学习者的需求。解决方案的关键在于提出一种基于大语言模型(Large Language Model, LLM)的迭代简化方法(iterative simplification),通过LLM逐步优化定义表达,在保证语义完整性的同时持续降低词汇复杂度;同时,作者构建了一个由专业词典学家协作标注的日语数据集,并设计了一种基于LLM-as-a-judge的新评估框架,使自动化评估结果与人工评判高度一致,从而有效支撑了LDDG任务的系统性研究和性能验证。

链接: https://arxiv.org/abs/2601.01842

作者: Yusuke Ide,Adam Nohejl,Joshua Tanner,Hitomi Yanaka,Christopher Lindsay,Taro Watanabe

机构: Nara Institute of Science and Technology (奈良科学技术大学院大学); RIKEN (理化学研究所); Resolve Research; The University of Tokyo (东京大学); Tohoku University (东北大学); Serpenti Sei Japan

类目: Computation and Language (cs.CL)

备注:

Abstract:We study dictionary definition generation (DDG), i.e., the generation of non-contextualized definitions for given headwords. Dictionary definitions are an essential resource for learning word senses, but manually creating them is costly, which motivates us to automate the process. Specifically, we address learner’s dictionary definition generation (LDDG), where definitions should consist of simple words. First, we introduce a reliable evaluation approach for DDG, based on our new evaluation criteria and powered by an LLM-as-a-judge. To provide reference definitions for the evaluation, we also construct a Japanese dataset in collaboration with a professional lexicographer. Validation results demonstrate that our evaluation approach agrees reasonably well with human annotators. Second, we propose an LDDG approach via iterative simplification with an LLM. Experimental results indicate that definitions generated by our approach achieve high scores on our criteria while maintaining lexical simplicity.

zh

[NLP-32] Emergent Introspective Awareness in Large Language Models

【速读】: 该论文旨在解决大语言模型是否具备对其内部状态进行自我反思(introspection)的能力这一问题。由于仅通过对话难以区分真实的自我觉察与虚假编造(confabulations),作者提出了一种基于激活空间干预的实验方法:向模型的内部激活中注入已知概念的表征,并测量这些操作对模型自述状态的影响。关键在于利用可控的神经激活扰动来观察模型能否识别并报告这些注入内容,从而间接验证其内部状态的可感知性和可追溯性。实验表明,部分先进模型(如Claude Opus 4和4.1)能在特定情境下识别注入概念、区分自身输出与人工前缀,并在指令驱动下调节内部激活,证明当前语言模型具备一定程度的功能性自我觉察能力,尽管该能力仍具高度不稳定性与情境依赖性。

链接: https://arxiv.org/abs/2601.01828

作者: Jack Lindsey

机构: Anthropic

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:We investigate whether large language models can introspect on their internal states. It is difficult to answer this question through conversation alone, as genuine introspection cannot be distinguished from confabulations. Here, we address this challenge by injecting representations of known concepts into a model’s activations, and measuring the influence of these manipulations on the model’s self-reported states. We find that models can, in certain scenarios, notice the presence of injected concepts and accurately identify them. Models demonstrate some ability to recall prior internal representations and distinguish them from raw text inputs. Strikingly, we find that some models can use their ability to recall prior intentions in order to distinguish their own outputs from artificial prefills. In all these experiments, Claude Opus 4 and 4.1, the most capable models we tested, generally demonstrate the greatest introspective awareness; however, trends across models are complex and sensitive to post-training strategies. Finally, we explore whether models can explicitly control their internal representations, finding that models can modulate their activations when instructed or incentivized to “think about” a concept. Overall, our results indicate that current language models possess some functional introspective awareness of their own internal states. We stress that in today’s models, this capacity is highly unreliable and context-dependent; however, it may continue to develop with further improvements to model capabilities.

zh

[NLP-33] Aspect Extraction from E-Commerce Product and Service Reviews

【速读】: 该论文旨在解决在低资源和代码混用(code-switched)场景下,如菲律宾电商评论中常见的Taglish(塔加洛语与英语混合)语境中,情感分析中的方面抽取(Aspect Extraction, AE)任务难以有效实施的问题。其解决方案的关键在于构建一个综合性的AE流水线,融合规则驱动、大语言模型(Large Language Model, LLM)生成与微调技术,并引入一种基于多方法主题建模的分层方面框架(Hierarchical Aspect Framework, HAF)以及双模式标注方案以区分显式与隐式方面。实验表明,采用生成式LLM(Gemini 2.0 Flash)的方法在所有任务中均取得最高性能(Macro F1达0.91),尤其在处理隐式方面时表现优异,凸显了生成式AI在复杂语言环境下的适应性和有效性。

链接: https://arxiv.org/abs/2601.01827

作者: Valiant Lance D. Dionela,Fatima Kriselle S. Dy,Robin James M. Hombrebueno,Aaron Rae M. Nicolas,Charibeth K. Cheng,Raphael W. Gonda

机构: De La Salle University (德拉萨大学)

类目: Computation and Language (cs.CL); Machine Learning (cs.LG)

备注:

Abstract:Aspect Extraction (AE) is a key task in Aspect-Based Sentiment Analysis (ABSA), yet it remains difficult to apply in low-resource and code-switched contexts like Taglish, a mix of Tagalog and English commonly used in Filipino e-commerce reviews. This paper introduces a comprehensive AE pipeline designed for Taglish, combining rule-based, large language model (LLM)-based, and fine-tuning techniques to address both aspect identification and extraction. A Hierarchical Aspect Framework (HAF) is developed through multi-method topic modeling, along with a dual-mode tagging scheme for explicit and implicit aspects. For aspect identification, four distinct models are evaluated: a Rule-Based system, a Generative LLM (Gemini 2.0 Flash), and two Fine-Tuned Gemma-3 1B models trained on different datasets (Rule-Based vs. LLM-Annotated). Results indicate that the Generative LLM achieved the highest performance across all tasks (Macro F1 0.91), demonstrating superior capability in handling implicit aspects. In contrast, the fine-tuned models exhibited limited performance due to dataset imbalance and architectural capacity constraints. This work contributes a scalable and linguistically adaptive framework for enhancing ABSA in diverse, code-switched environments.

zh

[NLP-34] CSCBench: A PVC Diagnostic Benchmark for Commodity Supply Chain Reasoning

【速读】: 该论文旨在解决大型语言模型(Large Language Models, LLMs)在商品供应链(Commodity Supply Chains, CSCs)领域中推理能力不足的问题,尤其是其在受制度规则体系和可行性约束驱动的复杂场景下的表现尚不明确。解决方案的关键在于构建了一个名为CSCBench的2.3K+单选题基准测试集,并提出PVC三维度评估框架(Process、Variety、Cognition),其中Process轴对应SCOR+Enable流程阶段,Variety轴刻画商品特异性规则系统及其物质-信息-金融耦合约束,Cognition轴基于布卢姆修订版认知分类法设计多层推理任务。实证表明,LLMs在Process与Cognition维度表现良好,但在Variety维度(特别是货运协议相关任务)显著退化,从而为诊断和提升LLM在高风险供应链场景中的推理能力提供了可量化、结构化的评估工具。

链接: https://arxiv.org/abs/2601.01825

作者: Yaxin Cui,Yuanqiang Zeng,Jiapeng Yan,Keling Lin,Kai Ji,Jianhui Zeng,Sheng Zhang,Xin Luo,Binzhu Su,Chaolai Shen,Jiahao Yu

机构: Xiamen SmartChain Innovations Co., Ltd.(厦门智链创新科技有限公司); Xiamen ITG Digital Technology Co., Ltd.(厦门ITG数字科技有限公司); Xiamen C&D Co., Ltd.(厦门C&D有限公司); Xiamen Xiangyu Co., Ltd.(厦门翔宇有限公司)

类目: Computation and Language (cs.CL)

备注:

Abstract:Large Language Models (LLMs) have achieved remarkable success in general benchmarks, yet their competence in commodity supply chains (CSCs) – a domain governed by institutional rule systems and feasibility constraints – remains under-explored. CSC decisions are shaped jointly by process stages (e.g., planning, procurement, delivery), variety-specific rules (e.g., contract specifications and delivery grades), and reasoning depth (from retrieval to multi-step analysis and decision selection). We introduce CSCBench, a 2.3K+ single-choice benchmark for CSC reasoning, instantiated through our PVC 3D Evaluation Framework (Process, Variety, and Cognition). The Process axis aligns tasks with SCOR+Enable; the Variety axis operationalizes commodity-specific rule systems under coupled material-information-financial constraints, grounded in authoritative exchange guidebooks/rulebooks and industry reports; and the Cognition axis follows Bloom’s revised taxonomy. Evaluating representative LLMs under a direct prompting setting, we observe strong performance on the Process and Cognition axes but substantial degradation on the Variety axis, especially on Freight Agreements. CSCBench provides a diagnostic yardstick for measuring and improving LLM capabilities in this high-stakes domain.

zh

[NLP-35] HyperCLOVA X 8B Omni

【速读】: 该论文旨在解决多模态模型在实际应用中因模态间割裂而导致的泛化能力不足问题,即如何实现文本、音频和视觉模态之间的任意双向交互(any-to-any omni interaction)。其解决方案的关键在于构建一个统一的8B规模的端到端生成式AI(Generative AI)模型——HyperCLOVA X 8B Omni,通过共享的下一个词预测接口(next-token prediction interface)将交错排列的多模态序列进行统一建模,并利用视觉与音频编码器注入连续嵌入(continuous embeddings)以实现细粒度理解与跨模态对齐,从而在不依赖特定模态管道的前提下,支持所有模态作为输入或输出的灵活组合。

链接: https://arxiv.org/abs/2601.01792

作者: NAVER Cloud HyperCLOVA X Team

机构: NAVER Cloud (NAVER云); HyperCLOVA X Team (HyperCLOVA X团队)

类目: Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Sound (cs.SD)

备注: Technical Report

Abstract:In this report, we present HyperCLOVA X 8B Omni, the first any-to-any omnimodal model in the HyperCLOVA X family that supports text, audio, and vision as both inputs and outputs. By consolidating multimodal understanding and generation into a single model rather than separate modality-specific pipelines, HyperCLOVA X 8B Omni serves as an 8B-scale omni-pathfinding point toward practical any-to-any omni assistants. At a high level, the model unifies modalities through a shared next-token prediction interface over an interleaved multimodal sequence, while vision and audio encoders inject continuous embeddings for fine-grained understanding and grounding. Empirical evaluations demonstrate competitive performance against comparably sized models across diverse input-output combinations spanning text, audio, and vision, in both Korean and English. We anticipate that the open-weight release of HyperCLOVA X 8B Omni will support a wide range of research and deployment scenarios.

zh

[NLP-36] BanglaIPA: Towards Robust Text-to-IPA Transcription with Contextual Rewriting in Bengali

【速读】: 该论文旨在解决孟加拉语(Bengali)缺乏一个鲁棒的自动国际音标(IPA)转写系统的问题,尤其是现有方法在处理区域方言、数字表达以及未见词汇时表现不佳。解决方案的关键在于提出BanglaIPA系统,该系统结合基于字符的词典与词级对齐机制,通过预计算的词到IPA映射字典提升推理效率,并显著增强对区域变体和数值表达的准确性,从而实现更鲁棒的语音转写性能。

链接: https://arxiv.org/abs/2601.01778

作者: Jakir Hasan,Shrestha Datta,Md Saiful Islam,Shubhashis Roy Dipta,Ameya Debnath

机构: Shahjalal University of Science and Technology (沙贾拉尔科技大学); University of Maryland, Baltimore County (马里兰大学巴尔的摩县分校)

类目: Computation and Language (cs.CL)

备注:

Abstract:Despite its widespread use, Bengali lacks a robust automated International Phonetic Alphabet (IPA) transcription system that effectively supports both standard language and regional dialectal texts. Existing approaches struggle to handle regional variations, numerical expressions, and generalize poorly to previously unseen words. To address these limitations, we propose BanglaIPA, a novel IPA generation system that integrates a character-based vocabulary with word-level alignment. The proposed system accurately handles Bengali numerals and demonstrates strong performance across regional dialects. BanglaIPA improves inference efficiency by leveraging a precomputed word-to-IPA mapping dictionary for previously observed words. The system is evaluated on the standard Bengali and six regional variations of the DUAL-IPA dataset. Experimental results show that BanglaIPA outperforms baseline IPA transcription models by 58.4-78.7% and achieves an overall mean word error rate of 11.4%, highlighting its robustness in phonetic transcription generation for the Bengali language.

zh

[NLP-37] Can LLM s Track Their Output Length? A Dynamic Feedback Mechanism for Precise Length Regulation

【速读】: 该论文旨在解决大型语言模型(Large Language Models, LLMs)在生成文本时难以精确控制输出长度的问题,尤其是在需要满足特定词数、句数或标记数(token count)约束的实际应用中。研究表明,LLMs 常因无法准确感知输入文本长度而导致生成结果偏离目标长度。解决方案的关键在于提出一种无需训练的动态长度反馈机制,在生成过程中实时引入长度反馈信号,使模型能够自适应调整生成策略以逼近目标长度,同时保持生成质量。实验表明,该方法在摘要生成和传记生成任务中显著提升了长度控制精度,并通过监督微调可进一步拓展至更广泛的文本生成场景。

链接: https://arxiv.org/abs/2601.01768

作者: Meiman Xiao,Ante Wang,Qingguo Hu,Zhongjian Miao,Huangjun Shen,Longyue Wang,Weihua Luo,Jinsong Su

机构: Xiamen University (厦门大学); Alibaba International Digital Commerce Group; Li Auto Inc.

类目: Computation and Language (cs.CL)

备注:

Abstract:Precisely controlling the length of generated text is a common requirement in real-world applications. However, despite significant advancements in following human instructions, Large Language Models (LLMs) still struggle with this task. In this work, we demonstrate that LLMs often fail to accurately measure input text length, leading to poor adherence to length constraints. To address this issue, we propose a novel length regulation approach that incorporates dynamic length feedback during generation, enabling adaptive adjustments to meet target lengths. Experiments on summarization and biography tasks show our training-free approach significantly improves precision in achieving target token, word, or sentence counts without compromising quality. Additionally, we demonstrate that further supervised fine-tuning allows our method to generalize effectively to broader text-generation tasks.

zh

[NLP-38] Context-Free Recognition with Transformers

【速读】: 该论文旨在解决生成式 AI(Generative AI)中 Transformer 模型对上下文无关语言(Context-Free Languages, CFLs)的识别能力问题。此前研究表明,标准 Transformer 在不引入额外机制的情况下无法识别 CFL,甚至无法识别更简单的正则语言(Regular Languages),这限制了其在语法结构复杂任务中的应用。本文的关键解决方案是引入带有 O(logn) 层循环(looping layers)的扩展 Transformer 架构,并通过添加 O(n6) 个填充标记(padding tokens)来实现对任意 CFL 的识别。尽管该方案理论上可行,但其高复杂度在实践中难以应用;进一步地,作者发现对于自然子类如无歧义 CFL(Unambiguous CFLs),只需 O(n3) 的填充即可实现高效识别,显著提升了实用性。实验验证了循环机制在理论上需对数深度的语言上确实有效,揭示了 Transformer 对 CFL 识别的内在复杂性与可优化路径。

链接: https://arxiv.org/abs/2601.01754

作者: Selim Jerad,Anej Svete,Sophie Hao,Ryan Cotterell,William Merrill

机构: ETH Zürich (苏黎世联邦理工学院); Boston University (波士顿大学); Allen Institute for AI (艾伦人工智能研究所)

类目: Machine Learning (cs.LG); Computational Complexity (cs.CC); Computation and Language (cs.CL); Formal Languages and Automata Theory (cs.FL)

备注: