本篇博文主要内容为 2025-12-15 从Arxiv.org论文网站获取的最新论文列表,自动更新,按照NLP、CV、ML、AI、IR五个大方向区分,若需要邮件定时接收,请在评论区留下你的邮箱号。

说明:每日论文数据从Arxiv.org获取,每天早上12:00左右定时自动更新。

友情提示: 如何您需要邮箱接收每日论文数据,请在评论处留下你的邮箱。

目录

概览 (2025-12-15)

今日共更新390篇论文,其中:

- 自然语言处理共40篇(Computation and Language (cs.CL))

- 人工智能共91篇(Artificial Intelligence (cs.AI))

- 计算机视觉共105篇(Computer Vision and Pattern Recognition (cs.CV))

- 机器学习共102篇(Machine Learning (cs.LG))

自然语言处理

[NLP-0] SUMFORU: An LLM -Based Review Summarization Framework for Personalized Purchase Decision Support

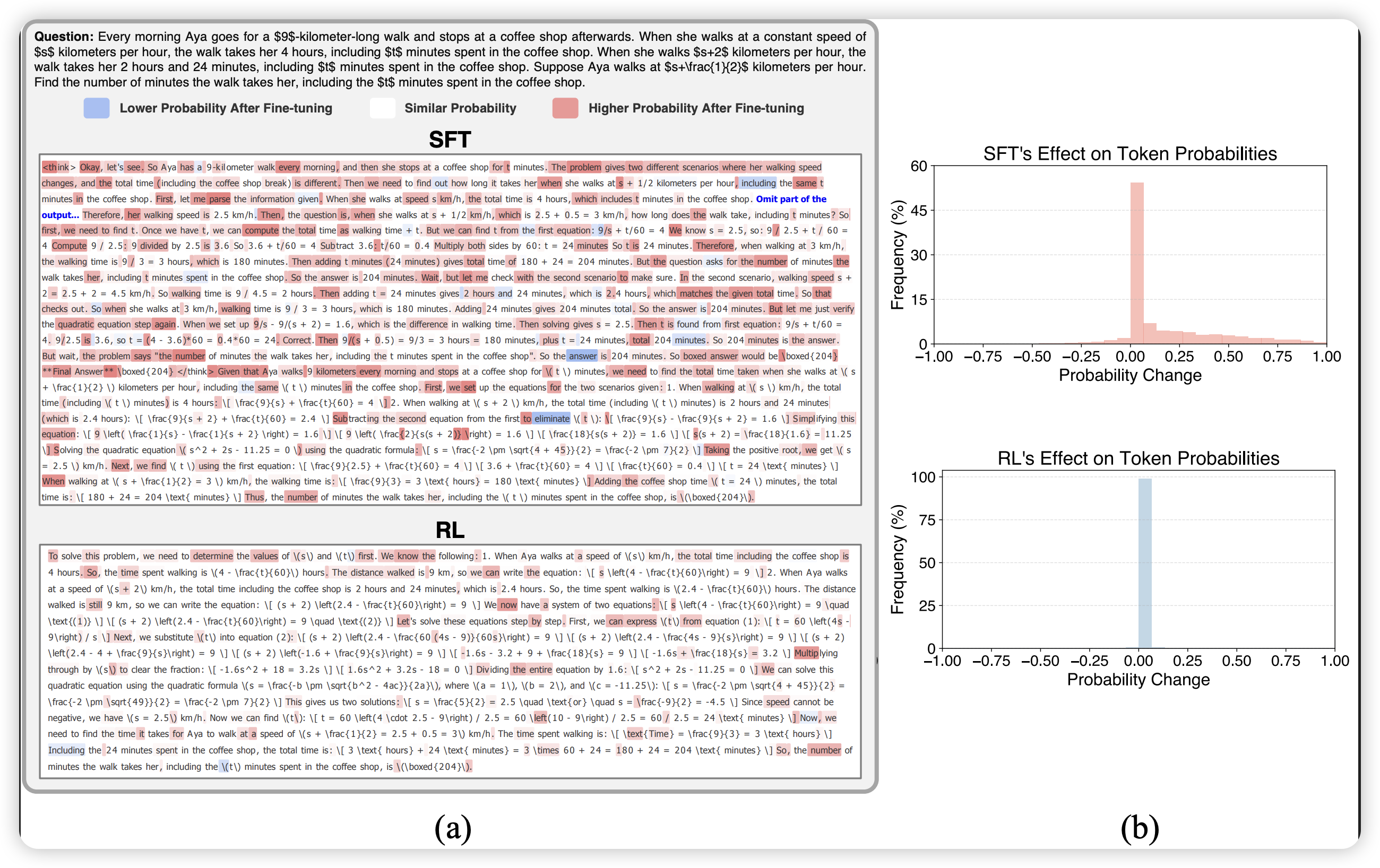

【速读】: 该论文旨在解决在线产品评论中蕴含的丰富但嘈杂的信息信号导致用户决策困难的问题,同时指出当前基于大语言模型(Large Language Models, LLMs)的摘要生成方法普遍存在个性化不足、难以匹配个体偏好等局限性。其解决方案的关键在于提出一个可引导的评论摘要框架SUMFORU,通过构建高质量的数据流水线(基于Amazon 2023 Review Dataset)与两阶段对齐机制实现个性化输出:第一阶段采用非对称知识蒸馏进行面向用户画像的监督微调(persona-aware Supervised Fine-Tuning, SFT),第二阶段利用AI反馈强化学习(Reinforcement Learning with AI Feedback, RLAIF)结合偏好估计器捕捉细粒度的、与用户画像相关的语义信号,从而显著提升摘要的一致性、事实依据性和偏好一致性。

链接: https://arxiv.org/abs/2512.11755

作者: Yuming Feng,Xinrui Jiang

机构: Stanford University (斯坦福大学)

类目: Computation and Language (cs.CL)

备注: Code available at this https URL

Abstract:Online product reviews contain rich but noisy signals that overwhelm users and hinder effective decision-making. Existing LLM-based summarizers remain generic and fail to account for individual preferences, limiting their practical utility. We propose SUMFORU, a steerable review summarization framework that aligns outputs with explicit user personas to support personalized purchase decisions. Our approach integrates a high-quality data pipeline built from the Amazon 2023 Review Dataset with a two-stage alignment procedure: (1) persona-aware Supervised Fine-Tuning (SFT) via asymmetric knowledge distillation, and (2) Reinforcement Learning with AI Feedback (RLAIF) using a preference estimator to capture fine-grained, persona-relevant signals. We evaluate the model across rule-based, LLM-based, and human-centered metrics, demonstrating consistent improvements in consistency, grounding, and preference alignment. Our framework achieves the highest performance across all evaluation settings and generalizes effectively to unseen product categories. Our results highlight the promise of steerable pluralistic alignment for building next-generation personalized decision-support systems.

zh

[NLP-1] From Signal to Turn: Interactional Friction in Modular Speech-to-Speech Pipelines

【速读】: 该论文旨在解决当前基于语音的生成式 AI(Generative AI)系统在实际交互中出现的对话断裂问题,这些问题源于模块化 Speech-to-Speech Retrieval-Augmented Generation (S2S-RAG) 管道设计中的结构性缺陷。论文通过分析典型生产系统,识别出三种核心摩擦模式:时间错位(Temporal Misalignment)、表达扁平化(Expressive Flattening)和修复僵化(Repair Rigidity),并指出这些并非孤立故障,而是模块化架构为追求可控性而牺牲对话流畅性的必然结果。解决方案的关键在于从优化单个组件转向系统级基础设施设计,强调对各模块间接口与协作机制的精细编排,以实现更自然、连贯的口语交互体验。

链接: https://arxiv.org/abs/2512.11724

作者: Titaya Mairittha,Tanakon Sawanglok,Panuwit Raden,Jirapast Buntub,Thanapat Warunee,Napat Asawachaisuvikrom,Thanaphum Saiwongin

机构: AXONS(阿克森斯); Chulalongkorn University(朱拉隆功大学)

类目: Human-Computer Interaction (cs.HC); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Software Engineering (cs.SE)

备注: 6 pages, 1 figure

Abstract:While voice-based AI systems have achieved remarkable generative capabilities, their interactions often feel conversationally broken. This paper examines the interactional friction that emerges in modular Speech-to-Speech Retrieval-Augmented Generation (S2S-RAG) pipelines. By analyzing a representative production system, we move beyond simple latency metrics to identify three recurring patterns of conversational breakdown: (1) Temporal Misalignment, where system delays violate user expectations of conversational rhythm; (2) Expressive Flattening, where the loss of paralinguistic cues leads to literal, inappropriate responses; and (3) Repair Rigidity, where architectural gating prevents users from correcting errors in real-time. Through system-level analysis, we demonstrate that these friction points should not be understood as defects or failures, but as structural consequences of a modular design that prioritizes control over fluidity. We conclude that building natural spoken AI is an infrastructure design challenge, requiring a shift from optimizing isolated components to carefully choreographing the seams between them.

zh

[NLP-2] Speculative Decoding Speed-of-Light: Optimal Lower Bounds via Branching Random Walks

【速读】: 该论文旨在解决生成式 AI(Generative AI)中大规模语言模型(LLMs)推理加速的瓶颈问题,特别是针对推测生成(speculative generation)技术在实现并行化 token 生成时所能达到的最大加速比尚不明确的问题。其解决方案的关键在于建立首个“紧致”的确定性推测生成算法运行时间下界,通过将 token 生成过程类比为分支随机游走(branching random walk),从而将最优草稿树选择问题形式化为概率分析问题,并推导出预期成功预测 token 数量的理论上限:E[X]≤(μ+μ(2))log(P)/μ2+O(1),其中 P 为验证器容量,μ 为验证器输出分布的期望熵,μ(2) 为二阶对数矩。这一理论框架揭示了并行 token 生成的内在限制,并为未来推测解码系统的设计提供了定量指导。

链接: https://arxiv.org/abs/2512.11718

作者: Sergey Pankratov,Dan Alistarh

机构: ISTA (Institute of Science and Technology Austria); Red Hat AI (红帽人工智能)

类目: Computation and Language (cs.CL)

备注:

Abstract:Speculative generation has emerged as a promising technique to accelerate inference in large language models (LLMs) by leveraging parallelism to verify multiple draft tokens simultaneously. However, the fundamental limits on the achievable speedup remain poorly understood. In this work, we establish the first ``tight’’ lower bounds on the runtime of any deterministic speculative generation algorithm. This is achieved by drawing a parallel between the token generation process and branching random walks, which allows us to analyze the optimal draft tree selection problem. We prove, under basic assumptions, that the expected number of tokens successfully predicted per speculative iteration is bounded as \mathbbE[X] \leq (\mu + \mu_(2))\log(P )/\mu^2 + O(1) , where P is the verifier’s capacity, \mu is the expected entropy of the verifier’s output distribution, and \mu_(2) is the expected second log-moment. This result provides new insights into the limits of parallel token generation, and could guide the design of future speculative decoding systems. Empirical evaluations on Llama models validate our theoretical predictions, confirming the tightness of our bounds in practical settings.

zh

[NLP-3] Automating Historical Insight Extraction from Large-Scale Newspaper Archives via Neural Topic Modeling

【速读】: 该论文旨在解决从大规模未结构化历史报纸档案中提取连贯且人类可理解的主题所面临的挑战,这些问题主要源于主题的演变、光学字符识别(OCR)噪声以及文本体量巨大。传统主题建模方法如潜在狄利克雷分配(LDA)难以捕捉历史文本中话语的复杂性和动态性。其解决方案的关键在于采用BERTopic这一神经主题建模方法,该方法利用基于Transformer的嵌入技术提取和分类主题,从而在保持上下文敏感性的同时实现更高效的规模化分析,尤其适用于揭示核能与核安全议题在时间维度上的长期趋势与主题共现模式。

链接: https://arxiv.org/abs/2512.11635

作者: Keerthana Murugaraj,Salima Lamsiyah,Marten During,Martin Theobald

机构: University of Luxembourg (卢森堡大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Information Retrieval (cs.IR)

备注: This is a preprint of a manuscript submitted to Digital Scholarship in the Humanities (Oxford University Press). The paper is currently under peer review

Abstract:Extracting coherent and human-understandable themes from large collections of unstructured historical newspaper archives presents significant challenges due to topic evolution, Optical Character Recognition (OCR) noise, and the sheer volume of text. Traditional topic-modeling methods, such as Latent Dirichlet Allocation (LDA), often fall short in capturing the complexity and dynamic nature of discourse in historical texts. To address these limitations, we employ BERTopic. This neural topic-modeling approach leverages transformerbased embeddings to extract and classify topics, which, despite its growing popularity, still remains underused in historical research. Our study focuses on articles published between 1955 and 2018, specifically examining discourse on nuclear power and nuclear safety. We analyze various topic distributions across the corpus and trace their temporal evolution to uncover long-term trends and shifts in public discourse. This enables us to more accurately explore patterns in public discourse, including the co-occurrence of themes related to nuclear power and nuclear weapons and their shifts in topic importance over time. Our study demonstrates the scalability and contextual sensitivity of BERTopic as an alternative to traditional approaches, offering richer insights into historical discourses extracted from newspaper archives. These findings contribute to historical, nuclear, and social-science research while reflecting on current limitations and proposing potential directions for future work.

zh

[NLP-4] Bounding Hallucinations: Information-Theoretic Guarantees for RAG Systems via Merlin-Arthur Protocols

【速读】: 该论文旨在解决当前检索增强生成(Retrieval-Augmented Generation, RAG)系统中检索模块被当作弱启发式而非可验证证据的问题,从而导致大语言模型(Large Language Model, LLM)在无支持情况下作答、在不完整或误导性上下文中幻觉以及依赖虚假证据的现象。解决方案的关键在于引入一种基于Merlin-Arthur(M/A)协议的训练框架,将整个RAG流水线(包括检索器和生成器)建模为一个交互式证明系统:生成器(Arthur)在未知来源的问题上训练,梅林(Merlin)提供有益证据,莫甘娜(Morgana)注入对抗性误导上下文,二者均使用线性时间的可解释人工智能(Explainable AI, XAI)方法识别并修改对Arthur影响最大的证据片段。由此,生成器学会在证据支持时作答、证据不足时拒绝回答,并仅依赖真正支撑答案的具体文本片段。该方法显著提升了RAG系统的接地性(groundedness)、完整性(completeness)、一致性(soundness)及拒答行为,同时减少了幻觉,且无需人工标注不可回答问题。

链接: https://arxiv.org/abs/2512.11614

作者: Björn Deiseroth,Max Henning Höth,Kristian Kersting,Letitia Parcalabescu

机构: Aleph Alpha Research Lab; TU Darmstadt; Hessian.AI Lab

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

备注: 34 pages, 19 figures

Abstract:Retrieval-augmented generation (RAG) models rely on retrieved evidence to guide large language model (LLM) generators, yet current systems treat retrieval as a weak heuristic rather than verifiable evidence. As a result, LLMs answer without support, hallucinate under incomplete or misleading context, and rely on spurious evidence. We introduce a training framework that treats the entire RAG pipeline – both the retriever and the generator – as an interactive proof system via an adaptation of the Merlin-Arthur (M/A) protocol. Arthur (the generator LLM) trains on questions of unkown provenance: Merlin provides helpful evidence, while Morgana injects adversarial, misleading context. Both use a linear-time XAI method to identify and modify the evidence most influential to Arthur. Consequently, Arthur learns to (i) answer when the context support the answer, (ii) reject when evidence is insufficient, and (iii) rely on the specific context spans that truly ground the answer. We further introduce a rigorous evaluation framework to disentangle explanation fidelity from baseline predictive errors. This allows us to introduce and measure the Explained Information Fraction (EIF), which normalizes M/A certified mutual-information guarantees relative to model capacity and imperfect benchmarks. Across three RAG datasets and two model families of varying sizes, M/A-trained LLMs show improved groundedness, completeness, soundness, and reject behavior, as well as reduced hallucinations – without needing manually annotated unanswerable questions. The retriever likewise improves recall and MRR through automatically generated M/A hard positives and negatives. Our results demonstrate that autonomous interactive-proof-style supervision provides a principled and practical path toward reliable RAG systems that treat retrieved documents not as suggestions, but as verifiable evidence.

zh

[NLP-5] Visualizing token importance for black-box language models

【速读】: 该论文旨在解决黑箱大语言模型(Large Language Models, LLMs)在实际部署中,尤其是高风险领域(如法律、医疗和合规)下,如何评估其输出对输入token的敏感性问题。现有方法通常仅关注模型行为的孤立方面(如特定偏见或公平性),难以全面揭示输入与输出之间的依赖关系。解决方案的关键在于提出一种轻量级、模型无关的分布感知敏感性分析方法(Distribution-Based Sensitivity Analysis, DBSA),无需假设LLM的概率分布即可量化每个输入token对输出的影响。DBSA通过避免计算提示级别的梯度(因LLM为随机函数而不可行),转而基于输出分布的变化来评估敏感性,从而为从业者提供可快速集成、直观探索模型对特定输入token依赖性的实用工具。

链接: https://arxiv.org/abs/2512.11573

作者: Paulius Rauba,Qiyao Wei,Mihaela van der Schaar

机构: 未知

类目: Computation and Language (cs.CL); Machine Learning (cs.LG)

备注:

Abstract:We consider the problem of auditing black-box large language models (LLMs) to ensure they behave reliably when deployed in production settings, particularly in high-stakes domains such as legal, medical, and regulatory compliance. Existing approaches for LLM auditing often focus on isolated aspects of model behavior, such as detecting specific biases or evaluating fairness. We are interested in a more general question – can we understand how the outputs of black-box LLMs depend on each input token? There is a critical need to have such tools in real-world applications that rely on inaccessible API endpoints to language models. However, this is a highly non-trivial problem, as LLMs are stochastic functions (i.e. two outputs will be different by chance), while computing prompt-level gradients to approximate input sensitivity is infeasible. To address this, we propose Distribution-Based Sensitivity Analysis (DBSA), a lightweight model-agnostic procedure to evaluate the sensitivity of the output of a language model for each input token, without making any distributional assumptions about the LLM. DBSA is developed as a practical tool for practitioners, enabling quick, plug-and-play visual exploration of LLMs reliance on specific input tokens. Through illustrative examples, we demonstrate how DBSA can enable users to inspect LLM inputs and find sensitivities that may be overlooked by existing LLM interpretability methods.

zh

[NLP-6] Extending a Parliamentary Corpus with MPs Tweets: Automatic Annotation and Evaluation Using MultiParTweet LREC2026

【速读】: 该论文旨在解决社交媒体上政治话语与正式议会辩论之间缺乏跨平台、多模态对比分析资源的问题。其解决方案的关键在于构建MultiParTweet这一多语言推文语料库,该语料库通过连接来自X平台的政治人物推文与德国议会语料库GerParCor,实现了在线社交 discourse 与议会辩论的可比性分析;同时,利用九个文本模型和一个视觉-语言模型(Vision-Language Model, VLM)对推文进行情感、情绪和主题标注,并通过人工标注子集验证自动化标注质量,从而提供一套具备多模态标注且经人工验证的高质量数据资源。此外,研究还开发了TTLABTweetCrawler工具用于标准化采集X平台数据,进一步增强了方法的可复现性和扩展性。

链接: https://arxiv.org/abs/2512.11567

作者: Mevlüt Bagci,Ali Abusaleh,Daniel Baumartz,Giueseppe Abrami,Maxim Konca,Alexander Mehler

机构: 未知

类目: Computation and Language (cs.CL); Multimedia (cs.MM)

备注: Submitted to LREC 2026

Abstract:Social media serves as a critical medium in modern politics because it both reflects politicians’ ideologies and facilitates communication with younger generations. We present MultiParTweet, a multilingual tweet corpus from X that connects politicians’ social media discourse with German political corpus GerParCor, thereby enabling comparative analyses between online communication and parliamentary debates. MultiParTweet contains 39 546 tweets, including 19 056 media items. Furthermore, we enriched the annotation with nine text-based models and one vision-language model (VLM) to annotate MultiParTweet with emotion, sentiment, and topic annotations. Moreover, the automated annotations are evaluated against a manually annotated subset. MultiParTweet can be reconstructed using our tool, TTLABTweetCrawler, which provides a framework for collecting data from X. To demonstrate a methodological demonstration, we examine whether the models can predict each other using the outputs of the remaining models. In summary, we provide MultiParTweet, a resource integrating automatic text and media-based annotations validated with human annotations, and TTLABTweetCrawler, a general-purpose X data collection tool. Our analysis shows that the models are mutually predictable. In addition, VLM-based annotation were preferred by human annotators, suggesting that multimodal representations align more with human interpretation.

zh

[NLP-7] DentalGPT : Incentivizing Multimodal Complex Reasoning in Dentistry

【速读】: 该论文旨在解决当前多模态大语言模型(Multimodal Large Language Models, MLLMs)在牙科领域中难以捕捉精细的口腔视觉细节以及缺乏精确诊断所需复杂推理能力的问题。其解决方案的关键在于两个方面:一是构建了目前最大规模且高质量的牙科多模态数据集,包含超过12万张标注的牙科图像及其强调诊断相关视觉特征的详细描述;二是采用分阶段训练策略,即先通过该数据集进行预训练以增强模型对牙科场景的视觉理解能力,再通过强化学习进一步提升其多模态复杂推理能力。这一方法使 DentalGPT 在疾病分类和牙科视觉问答(Dental Visual Question Answering, VQA)任务上显著优于多个主流 MLLMs,即使参数量仅为 7B。

链接: https://arxiv.org/abs/2512.11558

作者: Zhenyang Cai,Jiaming Zhang,Junjie Zhao,Ziyi Zeng,Yanchao Li,Jingyi Liang,Junying Chen,Yunjin Yang,Jiajun You,Shuzhi Deng,Tongfei Wang,Wanting Chen,Chunxiu Hao,Ruiqi Xie,Zhenwei Wen,Xiangyi Feng,Zou Ting,Jin Zou Lin,Jianquan Li,Guangjun Yu,Liangyi Chen,Junwen Wang,Shan Jiang,Benyou Wang

机构: Shenzhen Stomatology Hospital (Pingshan) of Southern Medical University; The Chinese University of Hong Kong, Shenzhen; State Key Laboratory of Membrane Biology, Beijing Key Laboratory of Cardiometabolic Molecular Medicine, Institute of Molecular Medicine, National Biomedical Imaging Center, School of Future Technology, Peking University; Freedom AI; Division of Applied Oral Sciences & Community Dental Care, Faculty of Dentistry, The University of Hong Kong; Beijing Institute of Collaborative Innovation; National Health Data Institute, Shenzhen; Shenzhen Loop Area Institute; Shenzhen Institute of Big Data

类目: Computer Vision and Pattern Recognition (cs.CV); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注:

Abstract:Reliable interpretation of multimodal data in dentistry is essential for automated oral healthcare, yet current multimodal large language models (MLLMs) struggle to capture fine-grained dental visual details and lack sufficient reasoning ability for precise diagnosis. To address these limitations, we present DentalGPT, a specialized dental MLLM developed through high-quality domain knowledge injection and reinforcement learning. Specifically, the largest annotated multimodal dataset for dentistry to date was constructed by aggregating over 120k dental images paired with detailed descriptions that highlight diagnostically relevant visual features, making it the multimodal dataset with the most extensive collection of dental images to date. Training on this dataset significantly enhances the MLLM’s visual understanding of dental conditions, while the subsequent reinforcement learning stage further strengthens its capability for multimodal complex reasoning. Comprehensive evaluations on intraoral and panoramic benchmarks, along with dental subsets of medical VQA benchmarks, show that DentalGPT achieves superior performance in disease classification and dental VQA tasks, outperforming many state-of-the-art MLLMs despite having only 7B parameters. These results demonstrate that high-quality dental data combined with staged adaptation provides an effective pathway for building capable and domain-specialized dental MLLMs.

zh

[NLP-8] HFS: Holistic Query-Aware Frame Selection for Efficient Video Reasoning

【速读】: 该论文旨在解决视频理解中关键帧选择(key frame selection)的两个核心问题:一是传统Top-K方法因独立评分导致帧在时间上聚集且视觉冗余;二是轻量级选择器使用离线生成的伪标签训练时,监督信号无法动态适应任务目标。解决方案的关键在于提出一个端到端可训练、任务自适应的框架:首先利用Chain-of-Thought引导小型语言模型(Small Language Model, SLM)生成任务相关的隐式查询向量,与多模态特征融合以实现动态帧评分;其次设计了一个包含相关性、覆盖度和冗余度的连续集合级目标函数,通过Gumbel-Softmax实现可微优化,从而在集合层面选出最优帧组合;最后引入学生-教师互学习机制,使SLM与多模态大语言模型(Multimodal Large Language Model, MLLM)通过KL散度对齐重要性分布,结合交叉熵损失完成端到端优化,摆脱对静态伪标签的依赖。

链接: https://arxiv.org/abs/2512.11534

作者: Yiqing Yang,Kin-Man Lam

机构: The Hong Kong Polytechnic University (香港理工大学)

类目: Computer Vision and Pattern Recognition (cs.CV); Computation and Language (cs.CL); Multimedia (cs.MM)

备注: 18 pages, 8 figures

Abstract:Key frame selection in video understanding presents significant challenges. Traditional top-K selection methods, which score frames independently, often fail to optimize the selection as a whole. This independent scoring frequently results in selecting frames that are temporally clustered and visually redundant. Additionally, training lightweight selectors using pseudo labels generated offline by Multimodal Large Language Models (MLLMs) prevents the supervisory signal from dynamically adapting to task objectives. To address these limitations, we propose an end-to-end trainable, task-adaptive framework for frame selection. A Chain-of-Thought approach guides a Small Language Model (SLM) to generate task-specific implicit query vectors, which are combined with multimodal features to enable dynamic frame scoring. We further define a continuous set-level objective function that incorporates relevance, coverage, and redundancy, enabling differentiable optimization via Gumbel-Softmax to select optimal frame combinations at the set level. Finally, student-teacher mutual learning is employed, where the student selector (SLM) and teacher reasoner (MLLM) are trained to align their frame importance distributions via KL divergence. Combined with cross-entropy loss, this enables end-to-end optimization, eliminating reliance on static pseudo labels. Experiments across various benchmarks, including Video-MME, LongVideoBench, MLVU, and NExT-QA, demonstrate that our method significantly outperforms existing approaches.

zh

[NLP-9] Does Less Hallucination Mean Less Creativity? An Empirical Investigation in LLM s

【速读】: 该论文旨在解决大型语言模型(Large Language Models, LLMs)在生成内容时存在的幻觉问题,即模型产生事实性错误信息的问题,尤其是在科学发现等需要同时保证事实准确性和创造性假设生成的应用场景中,现有幻觉缓解方法对创造性能力的影响尚不明确。解决方案的关键在于系统评估三种主流幻觉减少技术——链式验证(Chain of Verification, CoVe)、对比层解码(Decoding by Contrasting Layers, DoLa)和检索增强生成(Retrieval-Augmented Generation, RAG)——对模型发散性创造力(divergent creativity)的差异化影响,结果表明CoVe提升发散思维,DoLa抑制发散思维,而RAG影响最小,从而为科学应用中根据准确性与创造性平衡需求选择合适方法提供实证依据。

链接: https://arxiv.org/abs/2512.11509

作者: Mohor Banerjee,Nadya Yuki Wangsajaya,Syed Ali Redha Alsagoff,Min Sen Tan,Zachary Choy Kit Chun,Alvin Chan Guo Wei

机构: 1. Nanyang Technological University (南洋理工大学); 2. National University of Singapore (新加坡国立大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Large Language Models (LLMs) exhibit remarkable capabilities in natural language understanding and reasoning, but suffer from hallucination: the generation of factually incorrect content. While numerous methods have been developed to reduce hallucinations, their impact on creative generations remains unexplored. This gap is particularly critical for AI-assisted scientific discovery, which requires both factual accuracy and creative hypothesis generation. We investigate how three hallucination-reduction techniques: Chain of Verification (CoVe), Decoding by Contrasting Layers (DoLa), and Retrieval-Augmented Generation (RAG), affect creativity in LLMs. Evaluating multiple model families (LLaMA, Qwen, Mistral) at varying scales (1B - 70B parameters) on two creativity benchmarks (NeoCoder and CS4), we find that these methods have opposing effects on divergent creativity. CoVe enhances divergent thinking, DoLa suppresses it, and RAG shows minimal impact. Our findings provide guidance for selecting appropriate hallucination-reduction methods in scientific applications, where the balance between factual accuracy and creative exploration is crucial.

zh

[NLP-10] Building Patient Journeys in Hebrew: A Language Model for Clinical Timeline Extraction ALT IJCAI2025

【速读】: 该论文旨在解决从电子健康记录(Electronic Health Records, EHR)中自动提取结构化临床时间线(structured clinical timelines)以构建患者就医路径(patient journeys)的问题。其核心挑战在于如何在保持隐私安全的前提下,准确识别和排序医疗事件的时间关系。解决方案的关键在于基于DictaBERT 2.0开发了一个针对希伯来语医学领域的语言模型,并在超过五百万条去标识化的医院记录上持续预训练,从而提升对临床事件时序关系的建模能力;同时,研究发现词汇适应(vocabulary adaptation)可提高分词效率,且去标识化处理不会损害下游任务性能,为隐私保护下的模型开发提供了实证支持。

链接: https://arxiv.org/abs/2512.11502

作者: Kai Golan Hashiloni,Brenda Kasabe Nokai,Michal Shevach,Esthy Shemesh,Ronit Bartin,Anna Bergrin,Liran Harel,Nachum Dershowitz,Liat Nadai Arad,Kfir Bar

机构: Efi Arazi School of Computer Science, Reichman University, Herzilya, Israel; Tel Aviv Sourasky Medical Center, Israel; School of Computer Science and AI, Tel Aviv University, Israel

类目: Computation and Language (cs.CL)

备注: In Proceedings of the Workshop on Large Language Models and Generative AI for Health Informatics 2025, IJCAI 2025, Montreal, Canada

Abstract:We present a new Hebrew medical language model designed to extract structured clinical timelines from electronic health records, enabling the construction of patient journeys. Our model is based on DictaBERT 2.0 and continually pre-trained on over five million de-identified hospital records. To evaluate its effectiveness, we introduce two new datasets – one from internal medicine and emergency departments, and another from oncology – annotated for event temporal relations. Our results show that our model achieves strong performance on both datasets. We also find that vocabulary adaptation improves token efficiency and that de-identification does not compromise downstream performance, supporting privacy-conscious model development. The model is made available for research use under ethical restrictions.

zh

[NLP-11] Mistake Notebook Learning: Selective Batch-Wise Context Optimization for In-Context Learning

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)在任务适应过程中存在的两大问题:一是通过梯度微调(Gradient Fine-Tuning)带来的计算开销大和灾难性遗忘(Catastrophic Forgetting),二是基于上下文学习(In-Context Learning, ICL)的鲁棒性差与错误学习能力弱。为此,作者提出了一种无需训练的框架——错误笔记学习(Mistake Notebook Learning, MNL),其核心创新在于引入一个持续更新的知识库,用于存储从批量错误中抽象出的通用错误模式(abstracted error patterns)。不同于以往依赖单个实例或轨迹的记忆方法,MNL采用批处理级别的错误抽象机制,从多个失败案例中提取可泛化的指导信息,并通过保留验证集上的性能提升来动态维护笔记内容,从而确保模型性能单调改进。实验证明,MNL在GSM8K等复杂推理任务上接近监督微调效果(93.9% vs 94.3%),且显著优于其他免训练方法,在KaggleDBQA上更是实现28%准确率(相对提升47%),验证了其作为高效、可靠训练替代方案的潜力。

链接: https://arxiv.org/abs/2512.11485

作者: Xuanbo Su,Yingfang Zhang,Hao Luo,Xiaoteng Liu,Leo Huang

机构: 未知

类目: Computation and Language (cs.CL)

备注:

Abstract:Large language models (LLMs) adapt to tasks via gradient fine-tuning (heavy computation, catastrophic forgetting) or In-Context Learning (ICL: low robustness, poor mistake learning). To fix this, we introduce Mistake Notebook Learning (MNL), a training-free framework with a persistent knowledge base of abstracted error patterns. Unlike prior instance/single-trajectory memory methods, MNL uses batch-wise error abstraction: it extracts generalizable guidance from multiple failures, stores insights in a dynamic notebook, and retains only baseline-outperforming guidance via hold-out validation (ensuring monotonic improvement). We show MNL nearly matches Supervised Fine-Tuning (93.9% vs 94.3% on GSM8K) and outperforms training-free alternatives on GSM8K, Spider, AIME, and KaggleDBQA. On KaggleDBQA (Qwen3-8B), MNL hits 28% accuracy (47% relative gain), outperforming Memento (15.1%) and Training-Free GRPO (22.1) - proving it’s a strong training-free alternative for complex reasoning.

zh

[NLP-12] Rethinking Expert Trajectory Utilization in LLM Post-training

【速读】: 该论文旨在解决后训练阶段中如何最优利用专家轨迹(expert trajectories)以提升模型性能的问题,尤其是在监督微调(Supervised Fine-Tuning, SFT)与强化学习(Reinforcement Learning, RL)结合时的机制选择与参数配置难题。其解决方案的关键在于提出可塑性上限框架(Plasticity-Ceiling Framework),该框架将最终性能解耦为SFT基础性能和RL可塑性两个维度,并通过系统性实验证明:顺序式SFT-then-RL流水线优于同步方法;进一步给出精确的缩放准则,包括在SFT的稳定或轻度过拟合子阶段切换至RL可最大化性能上限、数据规模决定后训练潜力而轨迹难度仅作为性能乘数,以及最小SFT验证损失可作为筛选最优专家轨迹的鲁棒指标。

链接: https://arxiv.org/abs/2512.11470

作者: Bowen Ding,Yuhan Chen,Jiayang Lv,Jiyao Yuan,Qi Zhu,Shuangshuang Tian,Dantong Zhu,Futing Wang,Heyuan Deng,Fei Mi,Lifeng Shang,Tao Lin

机构: Zhejiang University (浙江大学); School of Engineering, Westlake University (西湖大学工程学院); Institute of Advanced Technology, Westlake Institute for Advanced Study (西湖高等研究院先进技术研究所); Huawei Noah’s Ark Lab (华为诺亚方舟实验室)

类目: Machine Learning (cs.LG); Computation and Language (cs.CL)

备注: 24 pages, 5 figures, under review

Abstract:While effective post-training integrates Supervised Fine-Tuning (SFT) and Reinforcement Learning (RL), the optimal mechanism for utilizing expert trajectories remains unresolved. We propose the Plasticity-Ceiling Framework to theoretically ground this landscape, decomposing performance into foundational SFT performance and the subsequent RL plasticity. Through extensive benchmarking, we establish the Sequential SFT-then-RL pipeline as the superior standard, overcoming the stability deficits of synchronized approaches. Furthermore, we derive precise scaling guidelines: (1) Transitioning to RL at the SFT Stable or Mild Overfitting Sub-phase maximizes the final ceiling by securing foundational SFT performance without compromising RL plasticity; (2) Refuting ``Less is More’’ in the context of SFT-then-RL scaling, we demonstrate that Data Scale determines the primary post-training potential, while Trajectory Difficulty acts as a performance multiplier; and (3) Identifying that the Minimum SFT Validation Loss serves as a robust indicator for selecting the expert trajectories that maximize the final performance ceiling. Our findings provide actionable guidelines for maximizing the value extracted from expert trajectories.

zh

[NLP-13] CLINIC: Evaluating Multilingual Trustworthiness in Language Models for Healthcare

【速读】: 该论文旨在解决当前语言模型(Language Models, LMs)在多语言医疗场景中缺乏可靠可信度评估的问题,尤其是在资源匮乏语言环境下模型表现不佳、存在偏见与隐私风险等关键挑战。其解决方案的核心是提出CLINIC——一个综合性多语言医疗基准测试平台,系统性地从真实性(truthfulness)、公平性(fairness)、安全性(safety)、鲁棒性(robustness)和隐私保护(privacy)五个维度出发,通过18项多样化任务对LMs进行评估,覆盖全球15种主要语言及涵盖疾病状况、预防措施、诊断检测、治疗方案、手术与药物等多个核心医疗领域。该基准揭示了现有模型在事实准确性、群体偏见、隐私泄露和对抗攻击等方面的显著缺陷,为提升医疗语言模型在全球多语环境中的可靠性与安全性提供了可量化的评估框架与改进方向。

链接: https://arxiv.org/abs/2512.11437

作者: Akash Ghosh,Srivarshinee Sridhar,Raghav Kaushik Ravi,Muhsin Muhsin,Sriparna Saha,Chirag Agarwal

机构: Indian Institute of Technology Patna(印度理工学院巴特那分校); IGIMS, Patna(印度医学科学研究所巴特那分校); University of Virginia(弗吉尼亚大学)

类目: Computation and Language (cs.CL)

备注: 49 pages, 31 figures

Abstract:Integrating language models (LMs) in healthcare systems holds great promise for improving medical workflows and decision-making. However, a critical barrier to their real-world adoption is the lack of reliable evaluation of their trustworthiness, especially in multilingual healthcare settings. Existing LMs are predominantly trained in high-resource languages, making them ill-equipped to handle the complexity and diversity of healthcare queries in mid- and low-resource languages, posing significant challenges for deploying them in global healthcare contexts where linguistic diversity is key. In this work, we present CLINIC, a Comprehensive Multilingual Benchmark to evaluate the trustworthiness of language models in healthcare. CLINIC systematically benchmarks LMs across five key dimensions of trustworthiness: truthfulness, fairness, safety, robustness, and privacy, operationalized through 18 diverse tasks, spanning 15 languages (covering all the major continents), and encompassing a wide array of critical healthcare topics like disease conditions, preventive actions, diagnostic tests, treatments, surgeries, and medications. Our extensive evaluation reveals that LMs struggle with factual correctness, demonstrate bias across demographic and linguistic groups, and are susceptible to privacy breaches and adversarial attacks. By highlighting these shortcomings, CLINIC lays the foundation for enhancing the global reach and safety of LMs in healthcare across diverse languages.

zh

[NLP-14] ask-Specific Sparse Feature Masks for Molecular Toxicity Prediction with Chemical Language Models

【速读】: 该论文旨在解决生成式 AI 在分子毒性预测中因黑箱特性导致可解释性不足的问题,这限制了其在高风险药物安全决策中的应用。解决方案的关键在于提出一种多任务学习(Multi-Task Learning, MTL)框架,通过共享化学语言模型并引入任务特定的注意力模块,在每个毒性终点上施加L1稀疏正则化约束,从而迫使模型聚焦于最少数量的关键分子片段进行预测。该方法不仅提升了预测准确性,还通过稀疏注意力权重提供了化学直观的可视化结果,增强了模型决策过程的可解释性。

链接: https://arxiv.org/abs/2512.11412

作者: Kwun Sy Lee,Jiawei Chen,Fuk Sheng Ford Chung,Tianyu Zhao,Zhenyuan Chen,Debby D. Wang

机构: 未知

类目: Computational Engineering, Finance, and Science (cs.CE); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Machine Learning (cs.LG); Biomolecules (q-bio.BM)

备注: 6 pages, 4 figures

Abstract:Reliable in silico molecular toxicity prediction is a cornerstone of modern drug discovery, offering a scalable alternative to experimental screening. However, the black-box nature of state-of-the-art models remains a significant barrier to adoption, as high-stakes safety decisions demand verifiable structural insights alongside predictive performance. To address this, we propose a novel multi-task learning (MTL) framework designed to jointly enhance accuracy and interpretability. Our architecture integrates a shared chemical language model with task-specific attention modules. By imposing an L1 sparsity penalty on these modules, the framework is constrained to focus on a minimal set of salient molecular fragments for each distinct toxicity endpoint. The resulting framework is trained end-to-end and is readily adaptable to various transformer-based backbones. Evaluated on the ClinTox, SIDER, and Tox21 benchmark datasets, our approach consistently outperforms both single-task and standard MTL baselines. Crucially, the sparse attention weights provide chemically intuitive visualizations that reveal the specific fragments influencing predictions, thereby enhancing insight into the model’s decision-making process.

zh

[NLP-15] Minimal Clips Maximum Salience: Long Video Summarization via Key Moment Extraction

【速读】: 该论文旨在解决视觉语言模型(Vision-Language Models, VLMs)在处理长视频时易丢失关键视觉信息的问题,并提出一种低成本、高效率的视频内容分析方法。其解决方案的关键在于:首先将视频划分为短片段,利用轻量级视频字幕模型生成每个片段的紧凑视觉描述;随后将这些描述输入大语言模型(Large Language Model, LLM),由其筛选出包含最相关视觉信息的K个关键片段,用于构建多模态摘要。该方法在MovieSum数据集上验证有效,仅需不到6%的参考片段即可生成完整且高质量的多模态摘要,同时显著优于随机采样策略,并保持较低的计算开销。

链接: https://arxiv.org/abs/2512.11399

作者: Galann Pennec,Zhengyuan Liu,Nicholas Asher,Philippe Muller,Nancy F. Chen

机构: IRIT, University of Toulouse, France; Agency for Science, Technology and Research (A*STAR), Singapore; CNRS@CREATE, Singapore; CNRS, IRIT, France

类目: Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV)

备注:

Abstract:Vision-Language Models (VLMs) are able to process increasingly longer videos. Yet, important visual information is easily lost throughout the entire context and missed by VLMs. Also, it is important to design tools that enable cost-effective analysis of lengthy video content. In this paper, we propose a clip selection method that targets key video moments to be included in a multimodal summary. We divide the video into short clips and generate compact visual descriptions of each using a lightweight video captioning model. These are then passed to a large language model (LLM), which selects the K clips containing the most relevant visual information for a multimodal summary. We evaluate our approach on reference clips for the task, automatically derived from full human-annotated screenplays and summaries in the MovieSum dataset. We further show that these reference clips (less than 6% of the movie) are sufficient to build a complete multimodal summary of the movies in MovieSum. Using our clip selection method, we achieve a summarization performance close to that of these reference clips while capturing substantially more relevant video information than random clip selection. Importantly, we maintain low computational cost by relying on a lightweight captioning model.

zh

[NLP-16] Improving Translation Quality by Selecting Better Data for LLM Fine-Tuning: A Comparative Analysis

【速读】: 该论文旨在解决开放大语言模型(open LLMs)在机器翻译(machine translation, MT)微调过程中,数据选择策略对模型性能影响的量化问题。其核心发现表明,语义层面的数据筛选方法(如COMET Kiwi、QuRate)显著优于基于词法或几何特征的启发式方法(如TF-IDF、FD-Score),且即使所选数据差异小于3%,模型性能仍表现出显著变化,揭示了微调过程对数据质量的高度敏感性。解决方案的关键在于采用受控实验设计,系统比较多种数据选择器在日英平行语料上的表现,从而确立语义感知型选择机制在提升微调效率与效果中的主导作用。

链接: https://arxiv.org/abs/2512.11388

作者: Felipe Ribeiro Fujita de Mello,Hideyuki Takada

机构: 未知

类目: Computation and Language (cs.CL)

备注: To appear at IEEE Big Data 2025

Abstract:We investigated the impact of data selection on machine translation fine-tuning for open LLMs. Using Japanese-English corpora, we compare five selectors: TF-IDF, COMET Kiwi, QuRate, FD-Score, and random selection, under controlled training conditions. We observed that semantic selectors consistently outperform lexical and geometry-based heuristics, and that even when the selected data differ by less than 3%, the impact on model performance is substantial, underscoring the sensitivity of fine-tuning to data quality.

zh

[NLP-17] Mining Legal Arguments to Study Judicial Formalism

【速读】: 该论文旨在解决司法推理在大规模分析中的困难问题,特别是针对中欧和东欧(CEE)地区是否存在形式主义判例的争议。研究通过开发自动化方法检测并分类捷克最高法院判决中的法律论证类型,以实证方式反驳了关于该地区司法形式主义的既有观点。解决方案的关键在于构建了一个高质量标注的数据集MADON(包含272份判决书、9,183段落及八类论证类型),并基于30万条捷克法院判决语料对Transformer大语言模型(LLM)进行持续预训练,结合不对称损失与类别权重等技术缓解数据不平衡问题;最终采用三阶段流水线架构(ModernBERT + Llama 3.1 + 传统特征机器学习)实现高精度的论证段落识别(82.6% macro-F1)、传统法律论证类型分类(77.5% macro-F1)以及判例形式主义/非形式主义的整体分类(83.2% macro-F1),显著提升了计算效率与模型可解释性,为计算法学研究提供了可复用的方法论框架。

链接: https://arxiv.org/abs/2512.11374

作者: Tomáš Koref,Lena Held,Mahammad Namazov,Harun Kumru,Yassine Thlija,Christoph Burchard,Ivan Habernal

机构: 未知

类目: Computation and Language (cs.CL); Computers and Society (cs.CY)

备注: pre-print under review

Abstract:Courts must justify their decisions, but systematically analyzing judicial reasoning at scale remains difficult. This study refutes claims about formalistic judging in Central and Eastern Europe (CEE) by developing automated methods to detect and classify judicial reasoning in Czech Supreme Courts’ decisions using state-of-the-art natural language processing methods. We create the MADON dataset of 272 decisions from two Czech Supreme Courts with expert annotations of 9,183 paragraphs with eight argument types and holistic formalism labels for supervised training and evaluation. Using a corpus of 300k Czech court decisions, we adapt transformer LLMs for Czech legal domain by continued pretraining and experiment with methods to address dataset imbalance including asymmetric loss and class weighting. The best models successfully detect argumentative paragraphs (82.6% macro-F1), classify traditional types of legal argument (77.5% macro-F1), and classify decisions as formalistic/non-formalistic (83.2% macro-F1). Our three-stage pipeline combining ModernBERT, Llama 3.1, and traditional feature-based machine learning achieves promising results for decision classification while reducing computational costs and increasing explainability. Empirically, we challenge prevailing narratives about CEE formalism. This work shows that legal argument mining enables reliable judicial philosophy classification and shows the potential of legal argument mining for other important tasks in computational legal studies. Our methodology is easily replicable across jurisdictions, and our entire pipeline, datasets, guidelines, models, and source codes are available at this https URL.

zh

[NLP-18] qa-FLoRA: Data-free query-adaptive Fusion of LoRAs for LLM s AAAI2026

【速读】: 该论文旨在解决多领域复合查询下低秩适配器(Low-Rank Adaptation, LoRA)融合的难题,即如何在不依赖特定组合训练数据或监督信号的情况下,动态、自适应地整合多个LoRA模块以提升模型在复杂多域任务中的性能。其解决方案的关键在于提出qa-FLoRA方法,该方法通过测量基础模型与各LoRA适配器之间的分布差异(distributional divergence),在推理阶段动态计算层级融合权重,从而实现无需额外训练和数据的查询自适应融合,显著优于静态加权和训练-free基线,并逼近监督式融合的效果。

链接: https://arxiv.org/abs/2512.11366

作者: Shreya Shukla,Aditya Sriram,Milinda Kuppur Narayanaswamy,Hiteshi Jain

机构: 未知

类目: Computation and Language (cs.CL)

备注: Accepted at AAAI 2026 (Main Technical Track)

Abstract:The deployment of large language models for specialized tasks often requires domain-specific parameter-efficient finetuning through Low-Rank Adaptation (LoRA) modules. However, effectively fusing these adapters to handle complex, multi-domain composite queries remains a critical challenge. Existing LoRA fusion approaches either use static weights, which assign equal relevance to each participating LoRA, or require data-intensive supervised training for every possible LoRA combination to obtain respective optimal fusion weights. We propose qa-FLoRA, a novel query-adaptive data-and-training-free method for LoRA fusion that dynamically computes layer-level fusion weights by measuring distributional divergence between the base model and respective adapters. Our approach eliminates the need for composite training data or domain-representative samples, making it readily applicable to existing adapter collections. Extensive experiments across nine multilingual composite tasks spanning mathematics, coding, and medical domains, show that qa-FLoRA outperforms static fusion by ~5% with LLaMA-2 and ~6% with LLaMA-3, and the training-free baselines by ~7% with LLaMA-2 and ~10% with LLaMA-3, while significantly closing the gap with supervised baselines. Further, layer-level analysis of our fusion weights reveals interpretable fusion patterns, demonstrating the effectiveness of our approach for robust multi-domain adaptation.

zh

[NLP-19] Unifying Dynamic Tool Creation and Cross-Task Experience Sharing through Cognitive Memory Architecture

【速读】: 该论文旨在解决大型语言模型代理(Large Language Model agents)在面对新任务时难以适应的问题,其核心挑战在于工具可用性受限和经验无法复用。现有方法要么依赖预定义工具导致覆盖范围有限,要么从零构建工具而未利用历史经验,从而造成探索效率低下和性能不佳。解决方案的关键在于提出SMITH(Shared Memory Integrated Tool Hub),这是一个统一的认知架构,通过分层记忆组织实现动态工具创建与跨任务经验共享的无缝集成:将代理记忆划分为程序性、语义性和情景性三个层次,系统性扩展能力的同时保留成功执行模式;同时,将工具创建形式化为受控沙箱环境中的迭代代码生成,并通过语义相似度匹配实现情景记忆检索来促进经验复用;此外,引入基于代理集合难度重估的课程学习策略以优化训练过程。实验表明,SMITH在GAIA基准上达到81.8%的Pass@1准确率,显著优于Alita(75.2%)和Memento(70.9%)。

链接: https://arxiv.org/abs/2512.11303

作者: Jiarun Liu,Shiyue Xu,Yang Li,Shangkun Liu,Yongli Yu,Peng Cao

机构: JD.com(京东)

类目: Computation and Language (cs.CL)

备注:

Abstract:Large Language Model agents face fundamental challenges in adapting to novel tasks due to limitations in tool availability and experience reuse. Existing approaches either rely on predefined tools with limited coverage or build tools from scratch without leveraging past experiences, leading to inefficient exploration and suboptimal performance. We introduce SMITH (Shared Memory Integrated Tool Hub), a unified cognitive architecture that seamlessly integrates dynamic tool creation with cross-task experience sharing through hierarchical memory organization. SMITH organizes agent memory into procedural, semantic, and episodic components, enabling systematic capability expansion while preserving successful execution patterns. Our approach formalizes tool creation as iterative code generation within controlled sandbox environments and experience sharing through episodic memory retrieval with semantic similarity matching. We further propose a curriculum learning strategy based on agent-ensemble difficulty re-estimation. Extensive experiments on the GAIA benchmark demonstrate SMITH’s effectiveness, achieving 81.8% Pass@1 accuracy and outperforming state-of-the-art baselines including Alita (75.2%) and Memento (70.9%). Our work establishes a foundation for building truly adaptive agents that continuously evolve their capabilities through principled integration of tool creation and experience accumulation.

zh

[NLP-20] LegalRikai: Open Benchmark – A Benchmark for Complex Japanese Corporate Legal Tasks

【速读】: 该论文旨在解决当前大语言模型(Large Language Models, LLMs)在法律领域应用中缺乏真实、复杂任务评估基准的问题,尤其针对日本公司法律实务场景下长文本结构化输出能力的不足。其解决方案的关键在于构建了一个由法律专业人士监督设计的开放基准 LegalRikai:该基准包含四个模拟实际法律操作的复杂任务,涵盖100个需生成长篇结构化内容的样本,并通过人工与自动化评估相结合的方式验证模型表现。研究发现,传统短文本任务难以捕捉模型在文档级编辑上的缺陷,而自动化评估在具有明确语言依据的指标上与人工判断高度一致,可作为专家资源有限时的有效筛选工具,从而推动更贴近实践的法律AI研究。

链接: https://arxiv.org/abs/2512.11297

作者: Shogo Fujita,Yuji Naraki,Yiqing Zhu,Shinsuke Mori

机构: 未知

类目: Computation and Language (cs.CL)

备注:

Abstract:This paper introduces LegalRikai: Open Benchmark, a new benchmark comprising four complex tasks that emulate Japanese corporate legal practices. The benchmark was created by legal professionals under the supervision of an attorney. This benchmark has 100 samples that require long-form, structured outputs, and we evaluated them against multiple practical criteria. We conducted both human and automated evaluations using leading LLMs, including GPT-5, Gemini 2.5 Pro, and Claude Opus 4.1. Our human evaluation revealed that abstract instructions prompted unnecessary modifications, highlighting model weaknesses in document-level editing that were missed by conventional short-text tasks. Furthermore, our analysis reveals that automated evaluation aligns well with human judgment on criteria with clear linguistic grounding, and assessing structural consistency remains a challenge. The result demonstrates the utility of automated evaluation as a screening tool when expert availability is limited. We propose a dataset evaluation framework to promote more practice-oriented research in the legal domain.

zh

[NLP-21] CIP: A Plug-and-Play Causal Prompting Framework for Mitigating Hallucinations under Long-Context Noise

【速读】: 该论文旨在解决大语言模型在处理长且噪声较多的检索上下文时易产生幻觉(hallucination)的问题,其根源在于模型依赖于虚假相关性而非真实的因果关系进行推理。解决方案的关键在于提出一种轻量级、可插拔的因果提示框架(Causal Prompting, CIP),该框架通过构建实体、动作与事件之间的因果关系序列并注入提示中,引导模型聚焦于因果相关的证据;同时借助因果干预和反事实推理抑制非因果推理路径,从而提升事实准确性、可解释性和推理效率。

链接: https://arxiv.org/abs/2512.11282

作者: Qingsen Ma,Dianyun Wang,Ran Jing,Yujun Sun,Zhenbo Xu

机构: Beijing University of Posts and Telecommunications(北京邮电大学); Northwestern University(西北大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Large language models often hallucinate when processing long and noisy retrieval contexts because they rely on spurious correlations rather than genuine causal relationships. We propose CIP, a lightweight and plug-and-play causal prompting framework that mitigates hallucinations at the input stage. CIP constructs a causal relation sequence among entities, actions, and events and injects it into the prompt to guide reasoning toward causally relevant evidence. Through causal intervention and counterfactual reasoning, CIP suppresses non causal reasoning paths, improving factual grounding and interpretability. Experiments across seven mainstream language models, including GPT-4o, Gemini 2.0 Flash, and Llama 3.1, show that CIP consistently enhances reasoning quality and reliability, achieving 2.6 points improvement in Attributable Rate, 0.38 improvement in Causal Consistency Score, and a fourfold increase in effective information density. API level profiling further shows that CIP accelerates contextual understanding and reduces end to end response latency by up to 55.1 percent. These results suggest that causal reasoning may serve as a promising paradigm for improving the explainability, stability, and efficiency of large language models.

zh

[NLP-22] AdaSD: Adaptive Speculative Decoding for Efficient Language Model Inference

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)因参数规模增大而导致推理速度显著下降的问题。现有基于推测解码(Speculative Decoding)的方法通常依赖额外训练、复杂超参数调优或对模型与任务的预先分析,限制了其部署灵活性。解决方案的关键在于提出自适应推测解码(Adaptive Speculative Decoding, AdaSD),其通过动态调整生成长度和接受标准来实现高效推理:引入两个实时更新的自适应阈值——一个用于决定候选token生成终止时机,另一个用于判断token是否被接受,二者均基于token熵(token entropy)和Jensen-Shannon散度(Jensen-Shannon distance)计算得出。该方法无需预分析或微调,兼容现成模型,在保持精度损失低于2%的前提下,相较标准推测解码最高提升49%的推理速度,从而提供了一种实用且自适应的LLM高效推理方案。

链接: https://arxiv.org/abs/2512.11280

作者: Kuan-Wei Lu,Ding-Yong Hong,Pangfeng Liu

机构: Institute of Information Science, Academia Sinica (中央研究院资讯科学研究所); Department of Computer Science and Information Engineering, National Taiwan University (台湾大学电机资讯学院资讯工程学系)

类目: Computation and Language (cs.CL)

备注:

Abstract:Large language models (LLMs) have achieved remarkable performance across a wide range of tasks, but their increasing parameter sizes significantly slow down inference. Speculative decoding mitigates this issue by leveraging a smaller draft model to predict candidate tokens, which are then verified by a larger target model. However, existing approaches often require additional training, extensive hyperparameter tuning, or prior analysis of models and tasks before deployment. In this paper, we propose Adaptive Speculative Decoding (AdaSD), a hyperparameter-free decoding scheme that dynamically adjusts generation length and acceptance criteria during inference. AdaSD introduces two adaptive thresholds: one to determine when to stop candidate token generation and another to decide token acceptance, both updated in real time based on token entropy and Jensen-Shannon distance. This approach eliminates the need for pre-analysis or fine-tuning and is compatible with off-the-shelf models. Experiments on benchmark datasets demonstrate that AdaSD achieves up to 49% speedup over standard speculative decoding while limiting accuracy degradation to under 2%, making it a practical solution for efficient and adaptive LLM inference.

zh

[NLP-23] When Actions Teach You to Think: Reasoning -Action Synergy via Reinforcement Learning in Conversational Agents

【速读】: 该论文旨在解决监督微调(Supervised Fine-Tuning, SFT)在面对数据分布变化时泛化能力不足的问题,尤其是当新数据未完全脱离训练域但仍导致性能下降时。此外,论文指出高质量推理轨迹(reasoning traces)的收集成本高、主观性强且难以扩展,限制了SFT在复杂任务中的应用。解决方案的关键在于利用强化学习(Reinforcement Learning, RL)让模型直接从任务结果中学习推理策略,而非依赖人工标注的推理过程。具体而言,作者提出了一种基于组相对策略优化(Group Relative Policy Optimization, GRPO)的框架,通过设计以工具调用准确性和答案正确性为核心的奖励机制,使大语言模型(LLM)能够迭代优化其推理步骤与行动决策,从而实现推理与动作学习的统一。实验表明,该方法显著提升了推理质量与工具调用精度,在基准模型上实现了1.5%的相对改进和40%的绝对提升。

链接: https://arxiv.org/abs/2512.11277

作者: Mrinal Rawat,Arkajyoti Chakraborty,Neha Gupta,Roberto Pieraccini

机构: Uniphore

类目: Computation and Language (cs.CL); Machine Learning (cs.LG)

备注:

Abstract:Supervised fine-tuning (SFT) has emerged as one of the most effective ways to improve the performance of large language models (LLMs) in downstream tasks. However, SFT can have difficulty generalizing when the underlying data distribution changes, even when the new data does not fall completely outside the training domain. Recent reasoning-focused models such as o1 and R1 have demonstrated consistent gains over their non-reasoning counterparts, highlighting the importance of reasoning for improved generalization and reliability. However, collecting high-quality reasoning traces for SFT remains challenging – annotations are costly, subjective, and difficult to scale. To address this limitation, we leverage Reinforcement Learning (RL) to enable models to learn reasoning strategies directly from task outcomes. We propose a pipeline in which LLMs generate reasoning steps that guide both the invocation of tools (e.g., function calls) and the final answer generation for conversational agents. Our method employs Group Relative Policy Optimization (GRPO) with rewards designed around tool accuracy and answer correctness, allowing the model to iteratively refine its reasoning and actions. Experimental results demonstrate that our approach improves both the quality of reasoning and the precision of tool invocations, achieving a 1.5% relative improvement over the SFT model (trained without explicit thinking) and a 40% gain compared to the base of the vanilla Qwen3-1.7B model. These findings demonstrate the promise of unifying reasoning and action learning through RL to build more capable and generalizable conversational agents.

zh

[NLP-24] Leverag ing LLM s for Title and Abstract Screening for Systematic Review: A Cost-Effective Dynamic Few-Shot Learning Approach

【速读】: 该论文旨在解决系统性综述(systematic review)中标题和摘要筛选步骤因文献数量激增而带来的高时间成本与资源消耗问题。其解决方案的关键在于提出一种两阶段动态少样本学习(dynamic few-shot learning, DFSL)方法:首先利用低成本大语言模型(LLM)进行初步筛选,随后对置信度较低的样本由高性能LLM重新评估,从而在保障筛选性能的同时有效控制计算开销,显著提升系统性综述的效率与可扩展性。

链接: https://arxiv.org/abs/2512.11261

作者: Yun-Chung Liu,Rui Yang,Jonathan Chong Kai Liew,Ziran Yin,Henry Foote,Christopher J. Lindsell,Chuan Hong

机构: 未知

类目: Computation and Language (cs.CL)

备注: 22 pages, 3 figures

Abstract:Systematic reviews are a key component of evidence-based medicine, playing a critical role in synthesizing existing research evidence and guiding clinical decisions. However, with the rapid growth of research publications, conducting systematic reviews has become increasingly burdensome, with title and abstract screening being one of the most time-consuming and resource-intensive steps. To mitigate this issue, we designed a two-stage dynamic few-shot learning (DFSL) approach aimed at improving the efficiency and performance of large language models (LLMs) in the title and abstract screening task. Specifically, this approach first uses a low-cost LLM for initial screening, then re-evaluates low-confidence instances using a high-performance LLM, thereby enhancing screening performance while controlling computational costs. We evaluated this approach across 10 systematic reviews, and the results demonstrate its strong generalizability and cost-effectiveness, with potential to reduce manual screening burden and accelerate the systematic review process in practical applications.

zh

[NLP-25] Multi-Intent Spoken Language Understanding: Methods Trends and Challenges

【速读】: 该论文旨在解决多意图语音语言理解(multi-intent spoken language understanding, SLU)领域缺乏系统性综述的问题,以梳理近年来的研究进展并为后续研究提供指导。其解决方案的关键在于从解码范式(decoding paradigms)和建模方法(modeling approaches)两个维度对现有研究进行深入分析,同时对比代表性模型的性能,明确其优势与局限,并进一步探讨当前挑战与未来研究方向,从而为多意图SLU领域的理论发展与技术应用提供结构化参考。

链接: https://arxiv.org/abs/2512.11258

作者: Di Wu,Ruiyu Fang,Liting Jiang,Shuangyong Song,Xiaomeng Huang,Shiquan Wang,Zhongqiu Li,Lingling Shi,Mengjiao Bao,Yongxiang Li,Hao Huang

机构: China Telecom (中国电信); Xinjiang University (新疆大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Multi-intent spoken language understanding (SLU) involves two tasks: multiple intent detection and slot filling, which jointly handle utterances containing more than one intent. Owing to this characteristic, which closely reflects real-world applications, the task has attracted increasing research attention, and substantial progress has been achieved. However, there remains a lack of a comprehensive and systematic review of existing studies on multi-intent SLU. To this end, this paper presents a survey of recent advances in multi-intent SLU. We provide an in-depth overview of previous research from two perspectives: decoding paradigms and modeling approaches. On this basis, we further compare the performance of representative models and analyze their strengths and limitations. Finally, we discuss the current challenges and outline promising directions for future research. We hope this survey will offer valuable insights and serve as a useful reference for advancing research in multi-intent SLU.

zh

[NLP-26] Adaptive Soft Rolling KV Freeze with Entropy-Guided Recovery: Sublinear Memory Growth for Efficient LLM Inference

【速读】: 该论文旨在解决大语言模型(Large Language Model, LLM)在长文本生成场景中因KV缓存(Key-Value Cache)占用内存过大而导致的部署瓶颈问题。现有方法多采用基于淘汰(eviction-based)的缓存管理策略,但此类方法会永久丢弃部分上下文信息,损害模型对长距离依赖的建模能力。论文提出的解决方案核心是自适应软冻结机制(Adaptive Soft Rolling KV Freeze, ASR-KF),其关键在于:通过滑动注意力窗口动态识别低重要性token,并采用可逆的软冻结策略暂停这些token的KV更新,同时将全部token保留在离线GPU存储中;此外引入熵引导恢复机制(Entropy-Guided Recovery, EGR),根据token被重复标记为低重要性的次数按次线性增长冻结时长,从而避免过度压缩导致的信息丢失。该方案无需训练即可实现55–67%的活跃KV缓存空间减少,且保持生成质量与needle-in-haystack检索性能,适用于多种架构且具备良好的实用性。

链接: https://arxiv.org/abs/2512.11221

作者: Adilet Metinov,Gulida M. Kudakeeva,Bolotbek uulu Nursultan,Gulnara D. Kabaeva

机构: Kyrgyz State Technical University named after I. Razzakov (吉尔吉斯斯坦技术大学,以I. Razzakov命名)

类目: Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注: 6 pages, 3 tables , 1 figure

Abstract:We present Adaptive Soft Rolling KV Freeze with Entropy-Guided Recovery (ASR-KF-EGR), a training-free inference-time framework for efficient large language model generation. Our method introduces a reversible soft-freeze mechanism that temporarily suspends key-value (KV) updates for low-importance tokens identified within a sliding attention window. Unlike eviction-based approaches that permanently discard context, ASR-KF-EGR preserves all tokens in off-GPU storage and restores them on demand. We extend the framework with sublinear freeze scheduling, where freeze duration grows sublinearly with repeated low-importance detections, preventing over-aggressive compression. Preliminary experiments on LLaMA-3 8B demonstrate 55-67% reduction in active KV cache size while maintaining generation quality and passing needle-in-haystack retrieval tests. The method is architecture-agnostic, requires no fine-tuning, and provides a practical solution for memory-constrained deployment of long-context LLMs.

zh

[NLP-27] FutureWeaver: Planning Test-Time Compute for Multi-Agent Systems with Modularized Collaboration

【速读】: 该论文试图解决多智能体系统中如何在固定推理预算下高效分配计算资源以提升协作性能的问题(即如何将测试时扩展(test-time scaling)技术推广至多智能体协作场景)。其解决方案的关键在于提出FutureWeaver框架,该框架通过自-play反思自动提取可复用的多智能体协作模块(modularized collaboration),并构建双层规划架构,在当前任务状态推理与未来步骤推测之间优化计算资源分配,从而实现预算约束下的协同效率最大化。

链接: https://arxiv.org/abs/2512.11213

作者: Dongwon Jung,Peng Shi,Yi Zhang

机构: University of California, Davis (加州大学戴维斯分校); University of Waterloo (滑铁卢大学); Greenshoe, Inc. (Greenshoe公司)

类目: Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注:

Abstract:Scaling test-time computation improves large language model performance without additional training. Recent work demonstrates that techniques such as repeated sampling, self-verification, and self-reflection can significantly enhance task success by allocating more inference-time compute. However, applying these techniques across multiple agents in a multi-agent system is difficult: there does not exist principled mechanisms to allocate compute to foster collaboration among agents, to extend test-time scaling to collaborative interactions, or to distribute compute across agents under explicit budget constraints. To address this gap, we propose FutureWeaver, a framework for planning and optimizing test-time compute allocation in multi-agent systems under fixed budgets. FutureWeaver introduces modularized collaboration, formalized as callable functions that encapsulate reusable multi-agent workflows. These modules are automatically derived through self-play reflection by abstracting recurring interaction patterns from past trajectories. Building on these modules, FutureWeaver employs a dual-level planning architecture that optimizes compute allocation by reasoning over the current task state while also speculating on future steps. Experiments on complex agent benchmarks demonstrate that FutureWeaver consistently outperforms baselines across diverse budget settings, validating its effectiveness for multi-agent collaboration in inference-time optimization.

zh

[NLP-28] SciLaD: A Large-Scale Transparent Reproducible Dataset for Natural Scientific Language Processing

【速读】: 该论文旨在解决科学文献大规模高质量数据集稀缺的问题,以支持自然语言处理(Natural Language Processing, NLP)在学术文本理解与生成中的研究。其解决方案的关键在于构建一个名为SciLaD的开源、可扩展的科学语言数据集,该数据集完全基于开放源代码框架和公开数据源,包含超过1000万篇英文科学文献(经筛选)和3500多万篇多语言未过滤TEI XML格式文献,并配套发布可复现的数据处理流水线。通过在该数据集上预训练RoBERTa模型并在多个基准测试中验证其性能,证明了SciLaD在保持高数据质量的同时具备良好的实用性和可扩展性,为科研人员提供了可靠的研究资源和透明的实验环境。

链接: https://arxiv.org/abs/2512.11192

作者: Luca Foppiano,Sotaro Takeshita,Pedro Ortiz Suarez,Ekaterina Borisova,Raia Abu Ahmad,Malte Ostendorff,Fabio Barth,Julian Moreno-Schneider,Georg Rehm

机构: 未知

类目: Computation and Language (cs.CL)

备注: 12 pages, 2 figures, 3 tables

Abstract:SciLaD is a novel, large-scale dataset of scientific language constructed entirely using open-source frameworks and publicly available data sources. It comprises a curated English split containing over 10 million scientific publications and a multilingual, unfiltered TEI XML split including more than 35 million publications. We also publish the extensible pipeline for generating SciLaD. The dataset construction and processing workflow demonstrates how open-source tools can enable large-scale, scientific data curation while maintaining high data quality. Finally, we pre-train a RoBERTa model on our dataset and evaluate it across a comprehensive set of benchmarks, achieving performance comparable to other scientific language models of similar size, validating the quality and utility of SciLaD. We publish the dataset and evaluation pipeline to promote reproducibility, transparency, and further research in natural scientific language processing and understanding including scholarly document processing.

zh

[NLP-29] FIBER: A Multilingual Evaluation Resource for Factual Inference Bias

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)在多语言环境下事实知识准确性与推理偏倚评估不足的问题,尤其关注单实体与多实体情境下的表现差异及提示语语言对模型输出的影响。其解决方案的关键在于构建了一个名为FIBER的多语言基准测试集,涵盖英语、意大利语和土耳其语的句子补全、问答和对象计数预测任务,从而系统性地评估模型在不同语言和实体复杂度下的事实知识掌握能力,并揭示提示语言可能引发的国家相关实体选择偏倚及其跨语言差异模式。

链接: https://arxiv.org/abs/2512.11110

作者: Evren Ayberk Munis,Deniz Yılmaz,Arianna Muti,Çağrı Toraman

机构: 未知

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Large language models are widely used across domains, yet there are concerns about their factual reliability and biases. Factual knowledge probing offers a systematic means to evaluate these aspects. Most existing benchmarks focus on single-entity facts and monolingual data. We therefore present FIBER, a multilingual benchmark for evaluating factual knowledge in single- and multi-entity settings. The dataset includes sentence completion, question-answering, and object-count prediction tasks in English, Italian, and Turkish. Using FIBER, we examine whether the prompt language induces inference bias in entity selection and how large language models perform on multi-entity versus single-entity questions. The results indicate that the language of the prompt can influence the model’s generated output, particularly for entities associated with the country corresponding to that language. However, this effect varies across different topics such that 31% of the topics exhibit factual inference bias score greater than 0.5. Moreover, the level of bias differs across languages such that Turkish prompts show higher bias compared to Italian in 83% of the topics, suggesting a language-dependent pattern. Our findings also show that models face greater difficulty when handling multi-entity questions than the single-entity questions. Model performance differs across both languages and model sizes. The highest mean average precision is achieved in English, while Turkish and Italian lead to noticeably lower scores. Larger models, including Llama-3.1-8B and Qwen-2.5-7B, show consistently better performance than smaller 3B-4B models.

zh

[NLP-30] Explanation Bias is a Product: Revealing the Hidden Lexical and Position Preferences in Post-Hoc Feature Attribution

【速读】: 该论文旨在解决生成式 AI(Generative AI)中特征归因方法(feature attribution methods)在提供token级解释时存在的不一致性问题,即不同归因方法对同一输入可能产生差异显著的解释结果,从而引发用户对解释可信度的质疑或不当信任。其解决方案的关键在于提出一个模型和方法无关的评估框架,通过三个评价指标系统性地量化和结构化归因方法中的词汇偏置(lexical bias)与位置偏置(position bias),并在人工数据和自然数据上分别验证了这两种偏置在不同Transformer模型间的结构性失衡现象,揭示了异常解释更可能源于方法自身的偏差。

链接: https://arxiv.org/abs/2512.11108

作者: Jonathan Kamp,Roos Bakker,Dominique Blok

机构: Computational Linguistics and Text Mining Lab, Vrije Universiteit Amsterdam (阿姆斯特丹自由大学计算语言学与文本挖掘实验室); TNO–The Netherlands Organization for Applied Scientific Research, The Hague (荷兰应用科学研究组织,海牙); Leiden University Centre for Linguistics (LUCL), Leiden University, Leiden (莱顿大学语言学研究中心(LUCL),莱顿大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Good quality explanations strengthen the understanding of language models and data. Feature attribution methods, such as Integrated Gradient, are a type of post-hoc explainer that can provide token-level insights. However, explanations on the same input may vary greatly due to underlying biases of different methods. Users may be aware of this issue and mistrust their utility, while unaware users may trust them inadequately. In this work, we delve beyond the superficial inconsistencies between attribution methods, structuring their biases through a model- and method-agnostic framework of three evaluation metrics. We systematically assess both the lexical and position bias (what and where in the input) for two transformers; first, in a controlled, pseudo-random classification task on artificial data; then, in a semi-controlled causal relation detection task on natural data. We find that lexical and position biases are structurally unbalanced in our model comparison, with models that score high on one type score low on the other. We also find signs that methods producing anomalous explanations are more likely to be biased themselves.

zh

[NLP-31] Applying NLP to iMessages: Understanding Topic Avoidance Responsiveness and Sentiment

【速读】: 该论文旨在解决用户对其iMessage消息数据潜在价值的认知不足问题,尤其是在缺乏对本地存储数据进行深度分析工具的情况下。其核心问题是:如何利用苹果公司开放的iMessage本地存储文件(即包含所有消息及附加元数据的单一文件),从多维度挖掘用户通信行为的价值,包括主题建模、响应时间分析、情感倾向识别和拒绝程度评分等。解决方案的关键在于开发了一个iMessage文本消息分析器(iMessage text message analyzer),通过该工具对原始数据进行结构化处理与量化分析,从而回答五个核心研究问题,并为未来基于iMessage数据的通信行为研究提供可行的技术路径与实证基础。

链接: https://arxiv.org/abs/2512.11079

作者: Alan Gerber,Sam Cooperman

机构: 未知

类目: Computation and Language (cs.CL); Computers and Society (cs.CY); Applications (stat.AP); Other Statistics (stat.OT)

备注: 11 pages, 18 figures, this https URL

Abstract:What is your messaging data used for? While many users do not often think about the information companies can gather based off of their messaging platform of choice, it is nonetheless important to consider as society increasingly relies on short-form electronic communication. While most companies keep their data closely guarded, inaccessible to users or potential hackers, Apple has opened a door to their walled-garden ecosystem, providing iMessage users on Mac with one file storing all their messages and attached metadata. With knowledge of this locally stored file, the question now becomes: What can our data do for us? In the creation of our iMessage text message analyzer, we set out to answer five main research questions focusing on topic modeling, response times, reluctance scoring, and sentiment analysis. This paper uses our exploratory data to show how these questions can be answered using our analyzer and its potential in future studies on iMessage data.

zh

[NLP-32] MultiScript30k: Leverag ing Multilingual Embeddings to Extend Cross Script Parallel Data

【速读】: 该论文旨在解决多模态机器翻译(Multimodal Machine Translation, MMT)研究中因数据集语言覆盖有限而导致的多样性不足问题。原始Multi30k数据集仅包含四种使用拉丁字母的欧洲语言(捷克语、英语、法语和德语),限制了对非欧洲语言及不同书写系统的研究。为扩展语言覆盖范围,作者提出MultiScript30k,这是一个基于NLLB200-3.3B模型将Multi30k英文版本(Multi30k-En)翻译成阿拉伯语(Ar)、西班牙语(Es)、乌克兰语(Uk)、简体中文(Zh_Hans)和繁体中文(Zh_Hant)的新扩展数据集。其关键创新在于通过大规模神经网络翻译模型实现多语言、多书写系统(包括阿拉伯文、西里尔文、汉字等)的高质量平行语料生成,并验证了翻译质量在语义相似性(cosine similarity > 0.8)和分布差异(symmetric KL divergence < 0.000251)上的稳定性,从而推动MMT研究向全球语言多样性发展。

链接: https://arxiv.org/abs/2512.11074

作者: Christopher Driggers-Ellis,Detravious Brinkley,Ray Chen,Aashish Dhawan,Daisy Zhe Wang,Christan Grant

机构: 未知

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG); Multimedia (cs.MM)

备注: 7 pages, 2 figures, 5 tables. Not published at any conference at this time

Abstract:Multi30k is frequently cited in the multimodal machine translation (MMT) literature, offering parallel text data for training and fine-tuning deep learning models. However, it is limited to four languages: Czech, English, French, and German. This restriction has led many researchers to focus their investigations only on these languages. As a result, MMT research on diverse languages has been stalled because the official Multi30k dataset only represents European languages in Latin scripts. Previous efforts to extend Multi30k exist, but the list of supported languages, represented language families, and scripts is still very short. To address these issues, we propose MultiScript30k, a new Multi30k dataset extension for global languages in various scripts, created by translating the English version of Multi30k (Multi30k-En) using NLLB200-3.3B. The dataset consists of over (30000) sentences and provides translations of all sentences in Multi30k-En into Ar, Es, Uk, Zh_Hans and Zh_Hant. Similarity analysis shows that Multi30k extension consistently achieves greater than (0.8) cosine similarity and symmetric KL divergence less than (0.000251) for all languages supported except Zh_Hant which is comparable to the previous Multi30k extensions ArEnMulti30k and Multi30k-Uk. COMETKiwi scores reveal mixed assessments of MultiScript30k as a translation of Multi30k-En in comparison to the related work. ArEnMulti30k scores nearly equal MultiScript30k-Ar, but Multi30k-Uk scores 6.4% greater than MultiScript30k-Uk per split.

zh

[NLP-33] PIAST: Rapid Prompting with In-context Augmentation for Scarce Training data

【速读】: 该论文旨在解决大型语言模型(Large Language Models, LLMs)在提示工程(prompt engineering)中对人工设计提示高度敏感的问题,尤其是手动构造有效提示困难且依赖复杂少样本示例(few-shot examples)的挑战。其解决方案的关键在于提出一种快速自动提示构建算法,通过生成少量高质量的少样本示例来增强人类指令,利用蒙特卡洛沙普利值(Monte Carlo Shapley estimation)评估每个示例的效用,并采用迭代替换/删除/保留策略优化示例集合;同时结合激进的子采样和回放缓冲区(replay buffer)以提升计算效率。实验表明,精心构造的示例比广泛搜索指令更能实现高效、低资源的提示工程效果。

链接: https://arxiv.org/abs/2512.11013

作者: Pawel Batorski,Paul Swoboda

机构: Heinrich Heine Universität Düsseldorf (海因里希海涅杜塞尔多夫大学)

类目: Computation and Language (cs.CL)

备注:

Abstract:LLMs are highly sensitive to prompt design, but handcrafting effective prompts is difficult and often requires intricate crafting of few-shot examples. We propose a fast automatic prompt construction algorithm that augments human instructions by generating a small set of few shot examples. Our method iteratively replaces/drops/keeps few-shot examples using Monte Carlo Shapley estimation of example utility. For faster execution, we use aggressive subsampling and a replay buffer for faster evaluations. Our method can be run using different compute time budgets. On a limited budget, we outperform existing automatic prompting methods on text simplification and GSM8K and obtain second best results on classification and summarization. With an extended, but still modest compute budget we set a new state of the art among automatic prompting methods on classification, simplification and GSM8K. Our results show that carefully constructed examples, rather than exhaustive instruction search, are the dominant lever for fast and data efficient prompt engineering. Our code is available at this https URL.

zh

[NLP-34] KBQA-R1: Reinforcing Large Language Models for Knowledge Base Question Answering

【速读】: 该论文旨在解决知识库问答(Knowledge Base Question Answering, KBQA)中大语言模型(Large Language Models, LLMs)存在的两个核心问题:一是生成幻觉式查询而未验证知识图谱(Knowledge Graph, KG)schema的存在性;二是采用僵化的模板化推理方式,缺乏对环境的真正理解。解决方案的关键在于提出KBQA-R1框架,将KBQA建模为多轮决策过程,并通过强化学习(Reinforcement Learning)优化交互策略,利用Group Relative Policy Optimization(GRPO)基于实际执行反馈进行策略迭代,而非依赖静态监督信号;同时引入引用拒绝采样(Referenced Rejection Sampling, RRS)方法,从数据合成层面确保推理轨迹与真实动作序列严格对齐,从而有效提升LLM在可验证执行基础上的推理能力。

链接: https://arxiv.org/abs/2512.10999

作者: Xin Sun,Zhongqi Chen,Xing Zheng,Qiang Liu,Shu Wu,Bowen Song,Zilei Wang,Weiqiang Wang,Liang Wang

机构: Institute of Automation, Chinese Academy of Sciences (中国科学院自动化研究所); Ant Group (蚂蚁集团); University of Science and Technology of China (中国科学技术大学)

类目: Computation and Language (cs.CL)

备注:

Abstract:Knowledge Base Question Answering (KBQA) challenges models to bridge the gap between natural language and strict knowledge graph schemas by generating executable logical forms. While Large Language Models (LLMs) have advanced this field, current approaches often struggle with a dichotomy of failure: they either generate hallucinated queries without verifying schema existence or exhibit rigid, template-based reasoning that mimics synthesized traces without true comprehension of the environment. To address these limitations, we present \textbfKBQA-R1, a framework that shifts the paradigm from text imitation to interaction optimization via Reinforcement Learning. Treating KBQA as a multi-turn decision process, our model learns to navigate the knowledge base using a list of actions, leveraging Group Relative Policy Optimization (GRPO) to refine its strategies based on concrete execution feedback rather than static supervision. Furthermore, we introduce \textbfReferenced Rejection Sampling (RRS), a data synthesis method that resolves cold-start challenges by strictly aligning reasoning traces with ground-truth action sequences. Extensive experiments on WebQSP, GrailQA, and GraphQuestions demonstrate that KBQA-R1 achieves state-of-the-art performance, effectively grounding LLM reasoning in verifiable execution.

zh

[NLP-35] SCOUT: A Defense Against Data Poisoning Attacks in Fine-Tuned Language Models

【速读】: 该论文旨在解决语言模型在医疗等敏感领域中面临的后门攻击(backdoor attack)安全威胁问题,特别是针对那些利用上下文合理触发词(contextually-appropriate triggers)的隐蔽攻击,这些攻击能够绕过传统基于上下文异常检测的防御机制。解决方案的关键在于提出一种名为SCOUT(Saliency-based Classification Of Untrusted Tokens)的新防御框架,其核心创新是通过token-level显著性分析(saliency analysis)而非传统上下文依赖方法来识别后门触发词:SCOUT构建显著性图谱,量化每个token移除对目标标签输出logits的影响,从而有效检测出明显和隐秘的恶意操纵行为,同时在干净输入上保持高准确率。

链接: https://arxiv.org/abs/2512.10998

作者: Mohamed Afane,Abhishek Satyam,Ke Chen,Tao Li,Junaid Farooq,Juntao Chen

机构: Fordham University (福特汉姆大学); Zhejiang University (浙江大学); City University of Hong Kong (香港城市大学); University of Michigan-Dearborn (密歇根大学迪尔伯恩分校)

类目: Cryptography and Security (cs.CR); Computation and Language (cs.CL)

备注: 9 pages, 3 figures

Abstract:Backdoor attacks create significant security threats to language models by embedding hidden triggers that manipulate model behavior during inference, presenting critical risks for AI systems deployed in healthcare and other sensitive domains. While existing defenses effectively counter obvious threats such as out-of-context trigger words and safety alignment violations, they fail against sophisticated attacks using contextually-appropriate triggers that blend seamlessly into natural language. This paper introduces three novel contextually-aware attack scenarios that exploit domain-specific knowledge and semantic plausibility: the ViralApp attack targeting social media addiction classification, the Fever attack manipulating medical diagnosis toward hypertension, and the Referral attack steering clinical recommendations. These attacks represent realistic threats where malicious actors exploit domain-specific vocabulary while maintaining semantic coherence, demonstrating how adversaries can weaponize contextual appropriateness to evade conventional detection methods. To counter both traditional and these sophisticated attacks, we present \textbfSCOUT (Saliency-based Classification Of Untrusted Tokens), a novel defense framework that identifies backdoor triggers through token-level saliency analysis rather than traditional context-based detection methods. SCOUT constructs a saliency map by measuring how the removal of individual tokens affects the model’s output logits for the target label, enabling detection of both conspicuous and subtle manipulation attempts. We evaluate SCOUT on established benchmark datasets (SST-2, IMDB, AG News) against conventional attacks (BadNet, AddSent, SynBkd, StyleBkd) and our novel attacks, demonstrating that SCOUT successfully detects these sophisticated threats while preserving accuracy on clean inputs.

zh

[NLP-36] MedBioRAG : Semantic Search and Retrieval-Augmented Generation with Large Language Models for Medical and Biological QA ACL2025

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)在生物医学问答(Biomedical Question Answering, Biomedical QA)任务中因知识局限性和上下文理解不足而导致性能受限的问题。解决方案的关键在于提出MedBioRAG,一个结合语义搜索(semantic search)与词法搜索(lexical search)、文档检索(document retrieval)及监督微调(supervised fine-tuning)的检索增强生成(Retrieval-Augmented Generation, RAG)框架。该方法通过高效检索并排序相关生物医学文献,显著提升了回答的准确性与上下文相关性,在多个基准数据集(如NFCorpus、TREC-COVID、MedQA、PubMedQA和BioASQ)上均超越了现有最先进(SoTA)模型及GPT-4o基础版本。

链接: https://arxiv.org/abs/2512.10996

作者: Seonok Kim

机构: 未知

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注: Submitted to ACL 2025. 9 pages, 4 figures, 5 tables (including 2 appendix tables)

Abstract:Recent advancements in retrieval-augmented generation (RAG) have significantly enhanced the ability of large language models (LLMs) to perform complex question-answering (QA) tasks. In this paper, we introduce MedBioRAG, a retrieval-augmented model designed to improve biomedical QA performance through a combination of semantic and lexical search, document retrieval, and supervised fine-tuning. MedBioRAG efficiently retrieves and ranks relevant biomedical documents, enabling precise and context-aware response generation. We evaluate MedBioRAG across text retrieval, close-ended QA, and long-form QA tasks using benchmark datasets such as NFCorpus, TREC-COVID, MedQA, PubMedQA, and BioASQ. Experimental results demonstrate that MedBioRAG outperforms previous state-of-the-art (SoTA) models and the GPT-4o base model in all evaluated tasks. Notably, our approach improves NDCG and MRR scores for document retrieval, while achieving higher accuracy in close-ended QA and ROUGE scores in long-form QA. Our findings highlight the effectiveness of semantic search-based retrieval and LLM fine-tuning in biomedical applications.

zh

[NLP-37] Benchmarking Automatic Speech Recognition Models for African Languages

【速读】: 该论文旨在解决非洲语言自动语音识别(ASR)在低资源条件下面临的两大核心问题:一是标注数据稀缺导致模型性能受限,二是缺乏系统性的模型选择、数据扩展和解码策略指导。其解决方案的关键在于对四种前沿ASR模型(MMS、W2v-BERT、XLS-R和Whisper)在13种非洲语言上进行统一基准测试,通过在1至400小时的逐步扩增语料库中微调模型,量化分析不同模型在不同数据规模下的表现差异,并揭示预训练覆盖范围、模型架构、数据域与资源可用性之间的交互作用,从而为低资源场景下ASR系统的构建提供可操作的实践依据。

链接: https://arxiv.org/abs/2512.10968

作者: Alvin Nahabwe,Sulaiman Kagumire,Denis Musinguzi,Bruno Beijuka,Jonah Mubuuke Kyagaba,Peter Nabende,Andrew Katumba,Joyce Nakatumba-Nabende

机构: Makerere University Centre for Artificial Intelligence(Makerere大学人工智能中心); Marconi Lab(Marconi实验室); Makerere University(Makerere大学); Department of Information Systems(信息系); Department of Electrical Engineering(电气工程系); Department of Computer Science(计算机科学系)

类目: Computation and Language (cs.CL); Sound (cs.SD); Audio and Speech Processing (eess.AS)

备注: 19 pages, 8 figures, Deep Learning Indiba, Proceedings of Machine Learning Research