本篇博文主要内容为 2026-01-28 从Arxiv.org论文网站获取的最新论文列表,自动更新,按照NLP、CV、ML、AI、IR五个大方向区分,若需要邮件定时接收,请在评论区留下你的邮箱号。

说明:每日论文数据从Arxiv.org获取,每天早上12:00左右定时自动更新。

友情提示: 如何您需要邮箱接收每日论文数据,请在评论处留下你的邮箱。

目录

概览 (2026-01-28)

今日共更新562篇论文,其中:

- 自然语言处理共64篇(Computation and Language (cs.CL))

- 人工智能共159篇(Artificial Intelligence (cs.AI))

- 计算机视觉共91篇(Computer Vision and Pattern Recognition (cs.CV))

- 机器学习共175篇(Machine Learning (cs.LG))

自然语言处理

[NLP-0] Evaluation of Oncotimia: An LLM based system for supporting tumour boards

【速读】: 该论文旨在解决多学科肿瘤委员会(Multidisciplinary Tumour Boards, MDTBs)在肺癌诊疗决策中因手动处理大量异构临床信息而导致的文档负担过重问题。其解决方案的关键在于开发了一个名为ONCOTIMIA的模块化、安全的临床工具,该工具通过整合生成式人工智能(Generative AI, GenAI),利用大语言模型(Large Language Models, LLMs)实现肺肿瘤板表单的自动填写。系统核心包括多层数据湖、混合关系型与向量存储、检索增强生成(Retrieval-Augmented Generation, RAG)以及规则驱动的自适应表单模型,能够将非结构化临床文本转化为结构化、标准化的肿瘤委员会记录,从而显著降低文档工作量并保持数据质量。

链接: https://arxiv.org/abs/2601.19899

作者: Luis Lorenzo,Marcos Montana-Mendez,Sergio Figueiras,Miguel Boubeta,Cristobal Bernardo-Castineira

机构: Bahía Software SLU(巴亚软件SLU)

类目: Computation and Language (cs.CL)

备注: 9 pages, 2 figures

Abstract:Multidisciplinary tumour boards (MDTBs) play a central role in oncology decision-making but require manual processes and structuring large volumes of heterogeneous clinical information, resulting in a substantial documentation burden. In this work, we present ONCOTIMIA, a modular and secure clinical tool designed to integrate generative artificial intelligence (GenAI) into oncology workflows and evaluate its application to the automatic completion of lung cancer tumour board forms using large language models (LLMs). The system combines a multi-layer data lake, hybrid relational and vector storage, retrieval-augmented generation (RAG) and a rule-driven adaptive form model to transform unstructured clinical documentation into structured and standardised tumour board records. We assess the performance of six LLMs deployed through AWS Bedrock on ten lung cancer cases, measuring both completion form accuracy and end-to-end latency. The results demonstrate high performance across models, with the best performing configuration achieving an 80% of correct field completion and clinically acceptable response time for most LLMs. Larger and more recent models exhibit best accuracies without incurring prohibitive latency. These findings provide empirical evidence that LLM- assisted autocompletion form is technically feasible and operationally viable in multidisciplinary lung cancer workflows and support its potential to significantly reduce documentation burden while preserving data quality.

zh

[NLP-1] Post-LayerNorm Is Back: Stable ExpressivE and Deep

【速读】: 该论文旨在解决大语言模型(Large Language Model, LLM)在深度扩展(depth scaling)时面临的训练不稳定性问题。当前主流的预层归一化(Pre-LayerNorm, Pre-LN)Transformer架构虽能稳定训练,但在极端深度下仍受限于表达能力提升的瓶颈;而回溯至后层归一化(Post-LayerNorm, Post-LN)架构虽理论上具有更优的表达性,却因ResNet风格的残差路径导致梯度消失,难以实现可靠训练。论文的关键解决方案是提出Keel架构,其核心在于用高速公路连接(Highway-style connection)替代传统残差路径,从而保持梯度在残差分支中的有效流动,避免信号从顶层向底层衰减。这一改进使Post-LN Transformer能够在超过1000层的极端深度下稳定训练,并显著优于Pre-LN架构在困惑度(perplexity)和深度缩放特性上的表现,为构建无限深度的大语言模型提供了新的可行路径。

链接: https://arxiv.org/abs/2601.19895

作者: Chen Chen,Lai Wei

机构: ByteDance Seed(字节跳动种子项目)

类目: Machine Learning (cs.LG); Computation and Language (cs.CL)

备注:

Abstract:Large language model (LLM) scaling is hitting a wall. Widening models yields diminishing returns, and extending context length does not improve fundamental expressivity. In contrast, depth scaling offers theoretically superior expressivity, yet current Transformer architectures struggle to train reliably at extreme depths. We revisit the Post-LayerNorm (Post-LN) formulation, whose instability at scale caused its replacement by Pre-LN in modern LLMs. We show that the central failure mode of Post-LN arises from the ResNet-style residual pathway, which introduces gradient vanishing in deep networks. We present Keel, a Post-LN Transformer that replaces this residual path with a Highway-style connection. This modification preserves the gradient flow through the residual branch, preventing signal vanishing from the top layers to the bottom. Unlike prior methods, Keel enables stable training at extreme depths without requiring specialized initialization or complex optimization tricks. Keel trains robustly at depths exceeding 1000 layers and consistently improves perplexity and depth-scaling characteristics over Pre-LN. These findings indicate that Post-LN, when paired with a Highway-style connection, provides a simple and effective foundation for building deeply scalable LLMs, opening the possibility for future infinite-depth architectures.

zh

[NLP-2] Reflective Translation: Improving Low-Resource Machine Translation via Structured Self-Reflection NEURIPS2025 AAAI2025

【速读】: 该论文旨在解决低资源语言(如祖鲁语 isiZulu 和科萨语 isiXhosa)在机器翻译中因平行数据和语言资源匮乏而导致的翻译质量低下问题。其解决方案的关键在于提出了一种基于提示(prompt-based)的“反思式翻译”(Reflective Translation)框架,该框架通过引导模型生成初始译文后,进一步进行结构化的自我批判(self-critique),并利用这一反思信息对译文进行优化重构,从而实现无需微调、模型无关的翻译质量提升。实验证明,该方法在 BLEU 和 COMET 评分上均取得显著且一致的改进,验证了结构化自省机制在低资源场景下的有效性。

链接: https://arxiv.org/abs/2601.19871

作者: Nicholas Cheng

机构: 未知

类目: Computation and Language (cs.CL)

备注: 12 pages, 3 figures, 6 tables. Accepted to the NeurIPS 2025 Workshop on Multilingual Representation Learning (Mexico City) and the AAAI 2025 Workshop on Language Models for Under-Resourced Communities (LM4UC). Code and data available at: this https URL

Abstract:Low-resource languages such as isiZulu and isiXhosa face persistent challenges in machine translation due to limited parallel data and linguistic resources. Recent advances in large language models suggest that self-reflection, prompting a model to critique and revise its own outputs, can improve reasoning quality and factual consistency. Building on this idea, this paper introduces Reflective Translation, a prompt-based framework in which a model generates an initial translation, produces a structured self-critique, and then uses this reflection to generate a refined translation. The approach is evaluated on English-isiZulu and English-isiXhosa translation using OPUS-100 and NTREX-African, across multiple prompting strategies and confidence thresholds. Results show consistent improvements in both BLEU and COMET scores between first- and second-pass translations, with average gains of up to +0.22 BLEU and +0.18 COMET. Statistical significance testing using paired nonparametric tests confirms that these improvements are robust. The proposed method is model-agnostic, requires no fine-tuning, and introduces a reflection-augmented dataset that can support future supervised or analysis-driven work. These findings demonstrate that structured self-reflection is a practical and effective mechanism for improving translation quality in low-resource settings.

zh

[NLP-3] Identifying and Transferring Reasoning -Critical Neurons: Improving LLM Inference Reliability via Activation Steering

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)在复杂推理任务中可靠性不足的问题,尤其是在不依赖后训练或高计算成本采样策略的前提下提升推理准确性。其解决方案的关键在于提出一种轻量级的测试时干预框架AdaRAS(Adaptive Reasoning Activation Steering),该框架通过识别出对推理正确性具有强预测能力的“推理关键神经元”(Reasoning-Critical Neurons, RCNs),并基于极性感知的均值差异准则进行选择,随后在推理过程中自适应地调整这些神经元的激活状态,从而增强错误推理路径的同时避免对已正确的推理过程造成性能下降。

链接: https://arxiv.org/abs/2601.19847

作者: Fangan Dong,Zuming Yan,Xuri Ge,Zhiwei Xu,Mengqi Zhang,Xuanang Chen,Ben He,Xin Xin,Zhumin Chen,Ying Zhou

机构: Shandong University (山东大学); Institute of Software, Chinese Academy of Sciences (中国科学院软件研究所); University of Chinese Academy of Sciences (中国科学院大学)

类目: Computation and Language (cs.CL)

备注:

Abstract:Despite the strong reasoning capabilities of recent large language models (LLMs), achieving reliable performance on challenging tasks often requires post-training or computationally expensive sampling strategies, limiting their practical efficiency. In this work, we first show that a small subset of neurons in LLMs exhibits strong predictive correlations with reasoning correctness. Based on this observation, we propose AdaRAS (Adaptive Reasoning Activation Steering), a lightweight test-time framework that improves reasoning reliability by selectively intervening on neuron activations. AdaRAS identifies Reasoning-Critical Neurons (RCNs) via a polarity-aware mean-difference criterion and adaptively steers their activations during inference, enhancing incorrect reasoning traces while avoiding degradation on already-correct cases. Experiments on 10 mathematics and coding benchmarks demonstrate consistent improvements, including over 13% gains on AIME-24 and AIME-25. Moreover, AdaRAS exhibits strong transferability across datasets and scalability to stronger models, outperforming post-training methods without additional training or sampling cost.

zh

[NLP-4] Neural Neural Scaling Laws

【速读】: 该论文旨在解决现有神经缩放定律(Neural Scaling Laws)在预测下游任务性能时的局限性问题,即传统方法依赖验证困惑度(validation perplexity)作为输入,存在两个关键缺陷:一是对词级别损失进行平均会掩盖任务特异性信号,二是无法用简单的参数化函数族描述多样化的缩放行为。其解决方案的核心在于提出一种名为NeuNeu的神经网络模型,将缩放定律预测建模为时间序列外推问题,通过融合观测到的准确率轨迹与词级别验证损失,学习无需假设瓶颈机制或特定函数形式即可预测未来性能的能力。该方法在66个下游任务上实现了2.04%的平均绝对误差(MAE),相比逻辑缩放定律(3.29% MAE)提升38%,且具备零样本泛化能力,适用于未见过的模型家族、参数量和任务。

链接: https://arxiv.org/abs/2601.19831

作者: Michael Y. Hu,Jane Pan,Ayush Rajesh Jhaveri,Nicholas Lourie,Kyunghyun Cho

机构: 未知

类目: Machine Learning (cs.LG); Computation and Language (cs.CL)

备注:

Abstract:Neural scaling laws predict how language model performance improves with increased compute. While aggregate metrics like validation loss can follow smooth power-law curves, individual downstream tasks exhibit diverse scaling behaviors: some improve monotonically, others plateau, and some even degrade with scale. We argue that predicting downstream performance from validation perplexity suffers from two limitations: averaging token-level losses obscures signal, and no simple parametric family can capture the full spectrum of scaling behaviors. To address this, we propose Neural Neural Scaling Laws (NeuNeu), a neural network that frames scaling law prediction as time-series extrapolation. NeuNeu combines temporal context from observed accuracy trajectories with token-level validation losses, learning to predict future performance without assuming any bottleneck or functional form. Trained entirely on open-source model checkpoints from HuggingFace, NeuNeu achieves 2.04% mean absolute error in predicting model accuracy on 66 downstream tasks – a 38% reduction compared to logistic scaling laws (3.29% MAE). Furthermore, NeuNeu generalizes zero-shot to unseen model families, parameter counts, and downstream tasks. Our work suggests that predicting downstream scaling laws directly from data outperforms parametric alternatives.

zh

[NLP-5] When Iterative RAG Beats Ideal Evidence: A Diagnostic Study in Scientific Multi-hop Question Answering

【速读】: 该论文旨在解决生成式 AI(Generative AI)在科学领域中,通过检索增强生成(Retrieval-Augmented Generation, RAG)实现多跳推理时,迭代式检索-推理循环是否能超越理想静态RAG(即一次性提供全部黄金证据的“Gold Context”)的问题。其核心挑战在于:在稀疏知识、异质证据和复杂推理路径的情境下,如何有效设计机制以提升模型性能。解决方案的关键在于提出一种无需训练的控制器——“Iterative RAG”,它通过交替执行检索、假设精炼与基于证据的停止决策,形成阶段化的检索-推理流程,从而显著优于静态RAG,在化学问答数据集ChemKGMultiHopQA上实现最高达25.6个百分点的性能提升,尤其对未进行推理微调的模型效果更明显。该方法通过动态调整检索节奏与控制推理路径,缓解早期假设漂移、上下文过载等问题,揭示了阶段性检索本身比单纯拥有理想证据更具影响力。

链接: https://arxiv.org/abs/2601.19827

作者: Mahdi Astaraki,Mohammad Arshi Saloot,Ali Shiraee Kasmaee,Hamidreza Mahyar,Soheila Samiee

机构: McMaster University (麦克马斯特大学); BASF Canada Inc. (巴斯夫加拿大公司)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Information Retrieval (cs.IR)

备注: 27 pages, 15 figures

Abstract:Retrieval-Augmented Generation (RAG) extends large language models (LLMs) beyond parametric knowledge, yet it is unclear when iterative retrieval-reasoning loops meaningfully outperform static RAG, particularly in scientific domains with multi-hop reasoning, sparse domain knowledge, and heterogeneous evidence. We provide the first controlled, mechanism-level diagnostic study of whether synchronized iterative retrieval and reasoning can surpass an idealized static upper bound (Gold Context) RAG. We benchmark eleven state-of-the-art LLMs under three regimes: (i) No Context, measuring reliance on parametric memory; (ii) Gold Context, where all oracle evidence is supplied at once; and (iii) Iterative RAG, a training-free controller that alternates retrieval, hypothesis refinement, and evidence-aware stopping. Using the chemistry-focused ChemKGMultiHopQA dataset, we isolate questions requiring genuine retrieval and analyze behavior with diagnostics spanning retrieval coverage gaps, anchor-carry drop, query quality, composition fidelity, and control calibration. Across models, Iterative RAG consistently outperforms Gold Context, with gains up to 25.6 percentage points, especially for non-reasoning fine-tuned models. Staged retrieval reduces late-hop failures, mitigates context overload, and enables dynamic correction of early hypothesis drift, but remaining failure modes include incomplete hop coverage, distractor latch trajectories, early stopping miscalibration, and high composition failure rates even with perfect retrieval. Overall, staged retrieval is often more influential than the mere presence of ideal evidence; we provide practical guidance for deploying and diagnosing RAG systems in specialized scientific settings and a foundation for more reliable, controllable iterative retrieval-reasoning frameworks.

zh

[NLP-6] Zero-Shot Stance Detection in the Wild: Dynamic Target Generation and Multi-Target Adaptation

【速读】: 该论文旨在解决真实社交媒体场景中目标(target)非预定义且动态变化的问题,传统立场检测方法依赖于静态目标假设,难以适应复杂多变的实际应用环境。其解决方案的关键在于提出一种全新的任务范式——零样本立场检测(zero-shot stance detection)与动态目标生成及多目标适配(Dynamic Target Generation and Multi-Target Adaptation, DGTA),通过自动从文本中识别多个目标-立场对,无需预先设定目标类别。研究构建了中文社交媒体立场检测数据集,并设计多维评估指标,同时探索了大语言模型(Large Language Models, LLMs)的集成式与两阶段微调策略,实验表明两阶段微调的Qwen2.5-7B在目标识别上达到66.99%的综合得分,而集成微调的DeepSeek-R1-Distill-Qwen-7B在立场检测F1分数上达到79.26%,验证了所提方法的有效性。

链接: https://arxiv.org/abs/2601.19802

作者: Aohua Li,Yuanshuo Zhang,Ge Gao,Bo Chen,Xiaobing Zhao

机构: Minzu University of China (民族大学); National Language Resource Monitoring and Research Center of Minority Languages (少数民族语言资源监测与研究中心); Institute of National Security (国家安全研究院); School of Minority Languages and Literatures (少数民族语言文学学院)

类目: Computation and Language (cs.CL)

备注:

Abstract:Current stance detection research typically relies on predicting stance based on given targets and text. However, in real-world social media scenarios, targets are neither predefined nor static but rather complex and dynamic. To address this challenge, we propose a novel task: zero-shot stance detection in the wild with Dynamic Target Generation and Multi-Target Adaptation (DGTA), which aims to automatically identify multiple target-stance pairs from text without prior target knowledge. We construct a Chinese social media stance detection dataset and design multi-dimensional evaluation metrics. We explore both integrated and two-stage fine-tuning strategies for large language models (LLMs) and evaluate various baseline models. Experimental results demonstrate that fine-tuned LLMs achieve superior performance on this task: the two-stage fine-tuned Qwen2.5-7B attains the highest comprehensive target recognition score of 66.99%, while the integrated fine-tuned DeepSeek-R1-Distill-Qwen-7B achieves a stance detection F1 score of 79.26%.

zh

[NLP-7] LVLMs and Humans Ground Differently in Referential Communication

【速读】: 该论文旨在解决生成式 AI (Generative AI) 在与人类用户协作时,因缺乏对共同认知基础(common ground)建模能力而导致的人类意图预测不准的问题。其解决方案的关键在于设计了一项因子实验,通过多轮交互的指称沟通任务(referential communication),在人类-人类、人类-AI、AI-人类和AI-AI配对中系统比较不同交互模式下的对话表现,并构建了一个包含356段对话的语料库,以揭示大型视觉语言模型(LVLMs)在动态互动中解析指称表达(referring expressions)的能力局限,从而为提升AI与人类的协同理解能力提供实证依据与工具支持。

链接: https://arxiv.org/abs/2601.19792

作者: Peter Zeng,Weiling Li,Amie Paige,Zhengxiang Wang,Panagiotis Kaliosis,Dimitris Samaras,Gregory Zelinsky,Susan Brennan,Owen Rambow

机构: Stony Brook University (纽约州立大学石溪分校); Institute for Advanced Computational Science (先进计算科学研究所)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Human-Computer Interaction (cs.HC)

备注: 24 pages, 16 figures, preprint

Abstract:For generative AI agents to partner effectively with human users, the ability to accurately predict human intent is critical. But this ability to collaborate remains limited by a critical deficit: an inability to model common ground. Here, we present a referential communication experiment with a factorial design involving director-matcher pairs (human-human, human-AI, AI-human, and AI-AI) that interact with multiple turns in repeated rounds to match pictures of objects not associated with any obvious lexicalized labels. We release the online pipeline for data collection, the tools and analyses for accuracy, efficiency, and lexical overlap, and a corpus of 356 dialogues (89 pairs over 4 rounds each) that unmasks LVLMs’ limitations in interactively resolving referring expressions, a crucial skill that underlies human language use.

zh

[NLP-8] Strong Reasoning Isnt Enough: Evaluating Evidence Elicitation in Interactive Diagnosis

【速读】: 该论文旨在解决交互式医疗问诊中代理(agent)在不确定性下主动获取缺失临床证据的能力评估问题,现有评估方法多为静态或结果导向,忽视了信息收集过程本身。其解决方案的关键在于提出一个基于模拟患者和原子证据驱动的模拟报告者的交互评估框架,并引入信息覆盖率(Information Coverage Rate, ICR)指标量化代理在互动过程中对必要证据的挖掘完整性。此外,作者构建了EviMed基准数据集以支持系统性研究,并提出REFINE策略,通过诊断验证引导代理主动消除不确定性,从而显著提升信息收集效率与模型性能,尤其在小模型受强推理监督时表现优异。

链接: https://arxiv.org/abs/2601.19773

作者: Zhuohan Long,Zhijie Bao,Zhongyu Wei

机构: Fudan University (复旦大学); Shanghai Innovation Institute (上海创新研究院)

类目: Computation and Language (cs.CL)

备注:

Abstract:Interactive medical consultation requires an agent to proactively elicit missing clinical evidence under uncertainty. Yet existing evaluations largely remain static or outcome-centric, neglecting the evidence-gathering process. In this work, we propose an interactive evaluation framework that explicitly models the consultation process using a simulated patient and a \revsimulated reporter grounded in atomic evidences. Based on this representation, we introduce Information Coverage Rate (ICR) to quantify how completely an agent uncovers necessary evidence during interaction. To support systematic study, we build EviMed, an evidence-based benchmark spanning diverse conditions from common complaints to rare diseases, and evaluate 10 models with varying reasoning abilities. We find that strong diagnostic reasoning does not guarantee effective information collection, and this insufficiency acts as a primary bottleneck limiting performance in interactive settings. To address this, we propose REFINE, a strategy that leverages diagnostic verification to guide the agent in proactively resolving uncertainties. Extensive experiments demonstrate that REFINE consistently outperforms baselines across diverse datasets and facilitates effective model collaboration, enabling smaller agents to achieve superior performance under strong reasoning supervision. Our code can be found at this https URL .

zh

[NLP-9] okenSeek: Memory Efficient Fine Tuning via Instance-Aware Token Ditching ICLR2026

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)在下游任务微调过程中因激活值(activation)占用大量训练内存而导致的效率低下问题。现有高效内存优化方法虽已针对激活进行改进,但因其数据无关性(data-agnostic nature)常导致微调效果不稳定且不充分。论文提出TokenSeek这一通用插件式解决方案,其核心创新在于引入实例感知的token选择与舍弃机制(instance-aware token seeking and ditching),通过动态识别并保留对任务关键的token,显著降低微调内存消耗(如在Llama3.2 1B模型上仅需原内存的14.8%),同时保持或提升性能,并提供了可解释的token筛选过程,为未来token效率研究提供理论依据。

链接: https://arxiv.org/abs/2601.19739

作者: Runjia Zeng,Qifan Wang,Qiang Guan,Ruixiang Tang,Lifu Huang,Zhenting Wang,Xueling Zhang,Cheng Han,Dongfang Liu

机构: Rochester Institute of Technology (罗切斯特理工学院); Meta AI (Meta人工智能实验室); Kent State University (肯特州立大学); Rutgers University (罗格斯大学); UC Davis (加州大学戴维斯分校); Accenture (埃森哲); University of Missouri-Kansas City (密苏里大学堪萨斯城分校)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注: ICLR 2026

Abstract:Fine tuning has been regarded as a de facto approach for adapting large language models (LLMs) to downstream tasks, but the high training memory consumption inherited from LLMs makes this process inefficient. Among existing memory efficient approaches, activation-related optimization has proven particularly effective, as activations consistently dominate overall memory consumption. Although prior arts offer various activation optimization strategies, their data-agnostic nature ultimately results in ineffective and unstable fine tuning. In this paper, we propose TokenSeek, a universal plugin solution for various transformer-based models through instance-aware token seeking and ditching, achieving significant fine-tuning memory savings (e.g., requiring only 14.8% of the memory on Llama3.2 1B) with on-par or even better performance. Furthermore, our interpretable token seeking process reveals the underlying reasons for its effectiveness, offering valuable insights for future research on token efficiency. Homepage: this https URL

zh

[NLP-10] RvB: Automating AI System Hardening via Iterative Red-Blue Games

【速读】: 该论文旨在解决大型语言模型(Large Language Models, LLMs)在攻防双重用途下所暴露的AI安全关键问题:缺乏统一的框架来实现动态、迭代式的对抗适应性加固。其解决方案的核心在于提出Red Team vs. Blue Team (RvB) 框架,该框架被建模为一种无需训练、顺序进行且信息不完善的博弈过程;其中红队暴露漏洞,蓝队则在不更新参数的前提下学习有效的防御策略,从而推动系统自动识别并固化基础防御原则,而非仅针对特定攻击手段过拟合。实证结果表明,RvB在CVE动态代码加固和对抗越狱(jailbreak)防护任务中分别实现了90%和45%的防御成功率,同时保持接近0%的误报率,显著优于基线方法。

链接: https://arxiv.org/abs/2601.19726

作者: Lige Huang,Zicheng Liu,Jie Zhang,Lewen Yan,Dongrui Liu,Jing Shao

机构: Shanghai Artificial Intelligence Laboratory (上海人工智能实验室); Institute of Information Engineering, Chinese Academy of Sciences (中国科学院信息工程研究所); Shanghai Jiao Tong University (上海交通大学)

类目: Cryptography and Security (cs.CR); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注:

Abstract:The dual offensive and defensive utility of Large Language Models (LLMs) highlights a critical gap in AI security: the lack of unified frameworks for dynamic, iterative adversarial adaptation hardening. To bridge this gap, we propose the Red Team vs. Blue Team (RvB) framework, formulated as a training-free, sequential, imperfect-information game. In this process, the Red Team exposes vulnerabilities, driving the Blue Team to learning effective solutions without parameter updates. We validate our framework across two challenging domains: dynamic code hardening against CVEs and guardrail optimization against jailbreaks. Our empirical results show that this interaction compels the Blue Team to learn fundamental defensive principles, leading to robust remediations that are not merely overfitted to specific exploits. RvB achieves Defense Success Rates of 90% and 45% across the respective tasks while maintaining near 0% False Positive Rates, significantly surpassing baselines. This work establishes the iterative adversarial interaction framework as a practical paradigm that automates the continuous hardening of AI systems.

zh

[NLP-11] Component-Level Lesioning of Language Models Reveals Clinically Aligned Aphasia Phenotypes

【速读】: 该论文旨在解决如何利用大语言模型(Large Language Models, LLMs)系统性地模拟失语症(aphasia)的语言产出障碍问题,从而为语言康复假说提供可扩展的计算代理,并构建受临床启发的框架以探究语言功能的组织机制。其解决方案的关键在于提出一种基于临床分型的组件级扰动框架,通过选择性地干预LLMs中与特定失语亚型(如布洛卡氏失语和韦尼克氏失语)相关的功能模块,在模块化混合专家(Mixture-of-Experts, MoE)和密集Transformer架构上统一实施扰动操作;该方法不仅能识别出与失语亚型关联的功能组件,还能通过逐步扰动top-k组件诱导出梯度性的语言能力退化,且其结果可通过西方失语症成套测验(Western Aphasia Battery, WAB)的失语商(Aphasia Quotient, AQ)进行量化评估,从而实现对语言功能损伤模式的可控模拟与解析。

链接: https://arxiv.org/abs/2601.19723

作者: Yifan Wang,Jichen Zheng,Jingyuan Sun,Yunhao Zhang,Chunyu Ye,Jixing Li,Chengqing Zong,Shaonan Wang

机构: The University of Manchester (曼彻斯特大学); CAS (中国科学院); University of Chinese Academy of Sciences (中国科学院大学); City University of Hong Kong (香港城市大学); Hong Kong Polytechnic University (香港理工大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Large language models (LLMs) increasingly exhibit human-like linguistic behaviors and internal representations that they could serve as computational simulators of language cognition. We ask whether LLMs can be systematically manipulated to reproduce language-production impairments characteristic of aphasia following focal brain lesions. Such models could provide scalable proxies for testing rehabilitation hypotheses, and offer a controlled framework for probing the functional organization of language. We introduce a clinically grounded, component-level framework that simulates aphasia by selectively perturbing functional components in LLMs, and apply it to both modular Mixture-of-Experts models and dense Transformers using a unified intervention interface. Our pipeline (i) identifies subtype-linked components for Broca’s and Wernicke’s aphasia, (ii) interprets these components with linguistic probing tasks, and (iii) induces graded impairments by progressively perturbing the top-k subtype-linked components, evaluating outcomes with Western Aphasia Battery (WAB) subtests summarized by Aphasia Quotient (AQ). Across architectures and lesioning strategies, subtype-targeted perturbations yield more systematic, aphasia-like regressions than size-matched random perturbations, and MoE modularity supports more localized and interpretable phenotype-to-component mappings. These findings suggest that modular LLMs, combined with clinically informed component perturbations, provide a promising platform for simulating aphasic language production and studying how distinct language functions degrade under targeted disruptions.

zh

[NLP-12] SynCABEL: Synthetic Contextualized Augmentation for Biomedical Entity Linking

【速读】: 该论文旨在解决监督式生物医学实体链接(Biomedical Entity Linking, BEL)中专家标注训练数据稀缺的核心瓶颈问题。解决方案的关键在于提出SynCABEL(Synthetic Contextualized Augmentation for Biomedical Entity Linking)框架,该框架利用大语言模型(Large Language Models, LLMs)为目标知识库中的所有候选概念生成富含上下文的合成训练样本,从而在无需人工标注的情况下提供广泛的监督信号。这一方法显著提升了模型的数据效率,在三个主流多语言基准(MedMentions、QUAERO 和 SPACCC)上实现了新的最先进性能,并且仅需60%的标注数据即可达到全量人工标注的效果,大幅降低对高成本专家标注的依赖。

链接: https://arxiv.org/abs/2601.19667

作者: Adam Remaki,Christel Gérardin,Eulàlia Farré-Maduell,Martin Krallinger,Xavier Tannier

机构: Sorbonne Université (索邦大学); Inserm (法国国家健康与医学研究院); Université Sorbonne Paris Nord (索邦巴黎北大学); Limics (实验室名称,不翻译); Barcelona Supercomputing Center (巴塞罗那超级计算中心)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Information Retrieval (cs.IR); Machine Learning (cs.LG)

备注:

Abstract:We present SynCABEL (Synthetic Contextualized Augmentation for Biomedical Entity Linking), a framework that addresses a central bottleneck in supervised biomedical entity linking (BEL): the scarcity of expert-annotated training data. SynCABEL leverages large language models to generate context-rich synthetic training examples for all candidate concepts in a target knowledge base, providing broad supervision without manual annotation. We demonstrate that SynCABEL, when combined with decoder-only models and guided inference establish new state-of-the-art results across three widely used multilingual benchmarks: MedMentions for English, QUAERO for French, and SPACCC for Spanish. Evaluating data efficiency, we show that SynCABEL reaches the performance of full human supervision using up to 60% less annotated data, substantially reducing reliance on labor-intensive and costly expert labeling. Finally, acknowledging that standard evaluation based on exact code matching often underestimates clinically valid predictions due to ontology redundancy, we introduce an LLM-as-a-judge protocol. This analysis reveals that SynCABEL significantly improves the rate of clinically valid predictions. Our synthetic datasets, models, and code are released to support reproducibility and future research.

zh

[NLP-13] One Token Is Enough: Improving Diffusion Language Models with a Sink Token

【速读】: 该论文旨在解决扩散语言模型(Diffusion Language Models, DLMs)中存在的“移动汇点”(moving sink phenomenon)问题,即sink tokens在Transformer的值空间中表现为低范数表示,且其位置在扩散步骤中不稳定,导致推理过程缺乏鲁棒性。解决方案的关键在于引入一个额外的特殊sink token,通过修改注意力掩码使其仅能自注意(self-attend),同时对所有其他token全局可见;实验表明,这一设计能够稳定注意力汇点,显著提升模型性能,且该token的位置无关性和语义中立性验证了其作为结构化汇点的有效性与鲁棒性。

链接: https://arxiv.org/abs/2601.19657

作者: Zihou Zhang,Zheyong Xie,Li Zhong,Haifeng Liu,Shaosheng Cao

机构: Xiaohongshu.inc

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Diffusion Language Models (DLMs) have emerged as a compelling alternative to autoregressive approaches, enabling parallel text generation with competitive performance. Despite these advantages, there is a critical instability in DLMs: the moving sink phenomenon. Our analysis indicates that sink tokens exhibit low-norm representations in the Transformer’s value space, and that the moving sink phenomenon serves as a protective mechanism in DLMs to prevent excessive information mixing. However, their unpredictable positions across diffusion steps undermine inference robustness. To resolve this, we propose a simple but effective extra sink token implemented via a modified attention mask. Specifically, we introduce a special token constrained to attend solely to itself, while remaining globally visible to all other tokens. Experimental results demonstrate that introducing a single extra token stabilizes attention sinks, substantially improving model performance. Crucially, further analysis confirms that the effectiveness of this token is independent of its position and characterized by negligible semantic content, validating its role as a robust and dedicated structural sink.

zh

[NLP-14] RATE: Reviewer Profiling and Annotation-free Training for Expertise Ranking in Peer Review Systems

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)时代下论文审稿人分配(reviewer assignment)面临的评估瓶颈问题,即传统基准数据集因主题快速演变而过时,且代理信号难以准确反映审稿人对论文的真实熟悉度。其解决方案的关键在于提出LR-bench这一高保真、时效性强的基准数据集(覆盖2024–2025年AI/NLP领域稿件,并包含五级自评熟悉度标注),以及RATE框架——该框架通过将每位审稿人近期论文提炼为紧凑的关键词嵌入表示,并利用启发式检索信号构建弱偏好监督信号微调嵌入模型,从而实现论文与审稿人画像的直接匹配。实验表明,该方法在LR-bench和CMU黄金标准数据集上均显著优于现有嵌入基线。

链接: https://arxiv.org/abs/2601.19637

作者: Weicong Liu,Zixuan Yang,Yibo Zhao,Xiang Li

机构: 未知

类目: Computation and Language (cs.CL)

备注: 18 pages

Abstract:Reviewer assignment is increasingly critical yet challenging in the LLM era, where rapid topic shifts render many pre-2023 benchmarks outdated and where proxy signals poorly reflect true reviewer familiarity. We address this evaluation bottleneck by introducing LR-bench, a high-fidelity, up-to-date benchmark curated from 2024-2025 AI/NLP manuscripts with five-level self-assessed familiarity ratings collected via a large-scale email survey, yielding 1055 expert-annotated paper-reviewer-score annotations. We further propose RATE, a reviewer-centric ranking framework that distills each reviewer’s recent publications into compact keyword-based profiles and fine-tunes an embedding model with weak preference supervision constructed from heuristic retrieval signals, enabling matching each manuscript against a reviewer profile directly. Across LR-bench and the CMU gold-standard dataset, our approach consistently achieves state-of-the-art performance, outperforming strong embedding baselines by a clear margin. We release LR-bench at this https URL, and a GitHub repository at this https URL.

zh

[NLP-15] Up to 36x Speedup: Mask-based Parallel Inference Paradigm for Key Information Extraction in MLLM s ICASSP2026

【速读】: 该论文旨在解决关键信息提取(Key Information Extraction, KIE)任务中因依赖自回归推理(autoregressive inference)导致的效率瓶颈问题,尤其是在处理视觉丰富文档(Visually-rich Documents, VrDs)时,传统方法需逐个生成语义独立的字段,造成显著延迟。解决方案的关键在于提出一种并行推理范式(Parallel Inference Paradigm, PIP),通过将所有目标字段用“[mask]”标记作为占位符,实现多字段在单次前向传播中的同步生成,从而大幅提升推理效率;同时,研究设计了定制化的掩码预训练策略和大规模监督数据集以支持该范式的有效实施,实验表明该方法可在保持性能几乎不变的前提下实现5–36倍的推理加速。

链接: https://arxiv.org/abs/2601.19613

作者: Xinzhong Wang,Ya Guo,Jing Li,Huan Chen,Yi Tu,Yijie Hong,Gongshen Liu,Huijia Zhu

机构: 未知

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注: Accepted by ICASSP 2026

Abstract:Key Information Extraction (KIE) from visually-rich documents (VrDs) is a critical task, for which recent Large Language Models (LLMs) and Multi-Modal Large Language Models (MLLMs) have demonstrated strong potential. However, their reliance on autoregressive inference, which generates outputs sequentially, creates a significant efficiency bottleneck, especially as KIE tasks often involve extracting multiple, semantically independent fields. To overcome this limitation, we introduce PIP: a Parallel Inference Paradigm for KIE. Our approach reformulates the problem by using “[mask]” tokens as placeholders for all target values, enabling their simultaneous generation in a single forward pass. To facilitate this paradigm, we develop a tailored mask pre-training strategy and construct large-scale supervised datasets. Experimental results show that our PIP-models achieve a 5-36x inference speedup with negligible performance degradation compared to traditional autoregressive base models. By substantially improving efficiency while maintaining high accuracy, PIP paves the way for scalable and practical real-world KIE solutions.

zh

[NLP-16] Explicit Multi-head Attention for Inter-head Interaction in Large Language Models

【速读】: 该论文旨在解决大型语言模型中注意力机制因头间交互不足而导致性能受限的问题,特别是如何更有效地建模多头注意力(Multi-head Attention)中的跨头交互以提升模型表现。其解决方案的关键在于提出一种名为多头显式注意力(Multi-head Explicit Attention, MEA)的新机制,核心包含两个组件:一是头级线性组合(Head-level Linear Composition, HLC)模块,通过可学习的线性变换对各头的键(Key)和值(Value)向量进行独立重组,从而增强跨头信息传递;二是头级组归一化(Head-level Group Normalization)层,用于统一重组后各头的统计特性,提高训练稳定性。该设计显著提升了预训练阶段的鲁棒性,支持更大学习率、更快收敛,并在减少注意力头数量时仍能通过低秩“虚拟头”重建实现高效KV缓存压缩,降低50%内存占用而保持任务性能稳定。

链接: https://arxiv.org/abs/2601.19611

作者: Runyu Peng,Yunhua Zhou,Demin Song,Kai Lv,Bo Wang,Qipeng Guo,Xipeng Qiu

机构: Shanghai AI Laboratory(上海人工智能实验室); Fudan University(复旦大学)

类目: Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注:

Abstract:In large language models built upon the Transformer architecture, recent studies have shown that inter-head interaction can enhance attention performance. Motivated by this, we propose Multi-head Explicit Attention (MEA), a simple yet effective attention variant that explicitly models cross-head interaction. MEA consists of two key components: a Head-level Linear Composition (HLC) module that separately applies learnable linear combinations to the key and value vectors across heads, thereby enabling rich inter-head communication; and a head-level Group Normalization layer that aligns the statistical properties of the recombined heads. MEA shows strong robustness in pretraining, which allows the use of larger learning rates that lead to faster convergence, ultimately resulting in lower validation loss and improved performance across a range of tasks. Furthermore, we explore the parameter efficiency of MEA by reducing the number of attention heads and leveraging HLC to reconstruct them using low-rank “virtual heads”. This enables a practical key-value cache compression strategy that reduces KV-cache memory usage by 50% with negligible performance loss on knowledge-intensive and scientific reasoning tasks, and only a 3.59% accuracy drop for Olympiad-level mathematical benchmarks.

zh

[NLP-17] Decompose-and-Formalise: Recursively Verifiable Natural Language Inference

【速读】: 该论文旨在解决生成式 AI(Generative AI)在自然语言推理(Natural Language Inference, NLI)任务中,将自然语言前提与假设自动形式化为逻辑表达式时存在的两个核心问题:一是长且语法复杂的输入易导致自动形式化错误(autoformalisation errors),进而引发证明失败;二是现有方法在处理失败时通常采用全局重新生成解释的策略,效率低下且难以定位错误来源。解决方案的关键在于提出一种“分解-形式化”框架(decompose-and-formalise framework),通过将前提-假设对分解为蕴含树(entailment tree)的原子步骤,自底向上验证并精准定位失败节点,并基于诊断信息进行局部修正而非全局重生成;同时引入基于事件的逻辑形式中的 θ-替换机制(θ-substitution),以强制绑定论元角色的一致性,提升自动形式化的忠实度(faithfulness)。该方案显著提升了解释验证率(最高优于SOTA 48.9%),并减少了迭代次数和运行时间,同时保持了NLI准确率。

链接: https://arxiv.org/abs/2601.19605

作者: Xin Quan,Marco Valentino,Louise A. Dennis,André Freitas

机构: University of Manchester (曼彻斯特大学); University of Sheffield (谢菲尔德大学); Idiap Research Institute (Idiap 研究所); National Biomarker Centre, CRUK-MI (国家生物标志物中心,英国癌症研究基金会-曼彻斯特研究所)

类目: Computation and Language (cs.CL)

备注:

Abstract:Recent work has shown that integrating large language models (LLMs) with theorem provers (TPs) in neuro-symbolic pipelines helps with entailment verification and proof-guided refinement of explanations for natural language inference (NLI). However, scaling such refinement to naturalistic NLI remains difficult: long, syntactically rich inputs and deep multi-step arguments amplify autoformalisation errors, where a single local mismatch can invalidate the proof. Moreover, current methods often handle failures via costly global regeneration due to the difficulty of localising the responsible span or step from prover diagnostics. Aiming to address these problems, we propose a decompose-and-formalise framework that (i) decomposes premise-hypothesis pairs into an entailment tree of atomic steps, (ii) verifies the tree bottom-up to isolate failures to specific nodes, and (iii) performs local diagnostic-guided refinement instead of regenerating the whole explanation. Moreover, to improve faithfulness of autoformalisation, we introduce \theta -substitution in an event-based logical form to enforce consistent argument-role bindings. Across a range of reasoning tasks using five LLM backbones, our method achieves the highest explanation verification rates, improving over the state-of-the-art by 26.2%, 21.7%, 21.6% and 48.9%, while reducing refinement iterations and runtime and preserving strong NLI accuracy.

zh

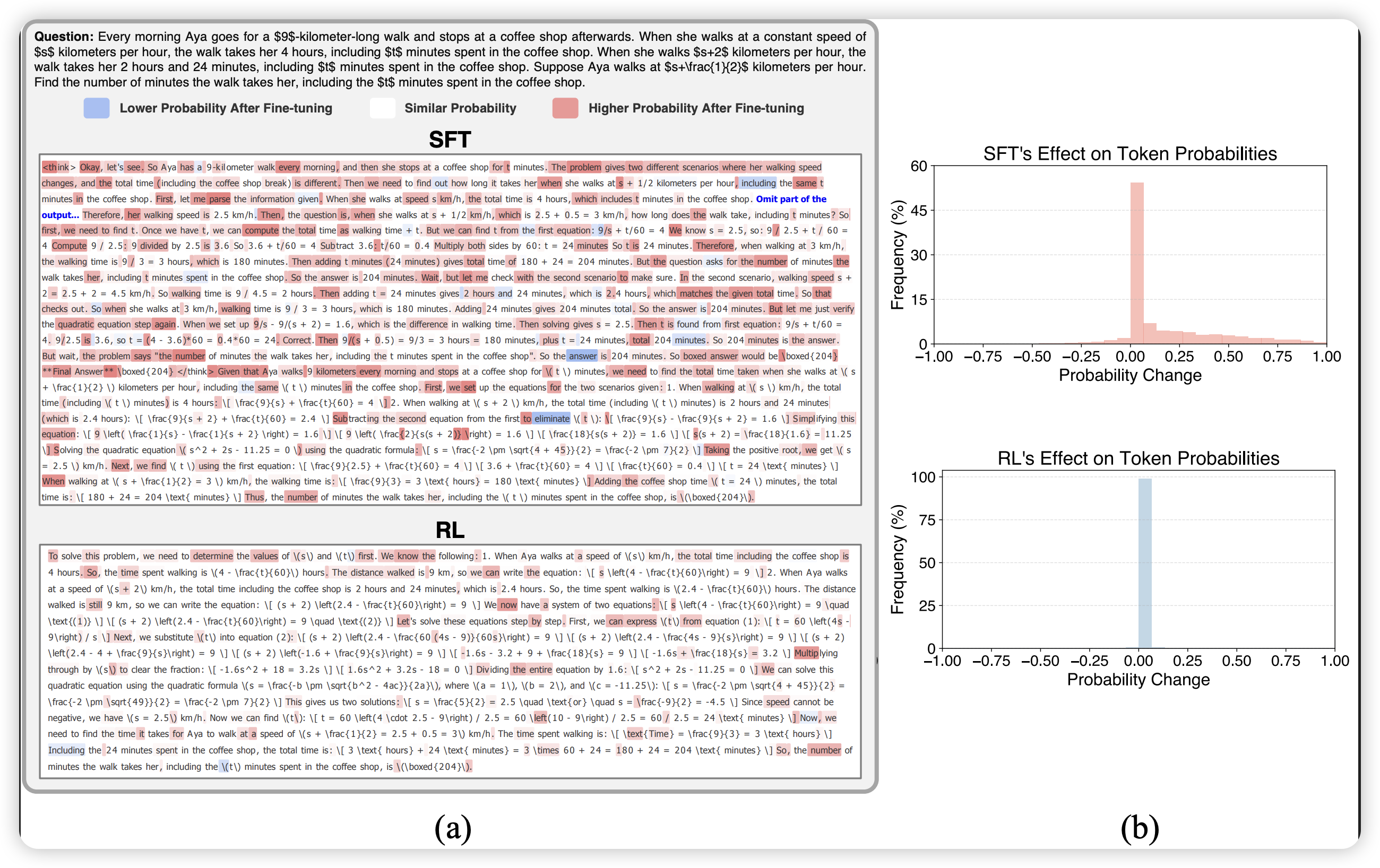

[NLP-18] Yunque DeepResearch Technical Report

【速读】: 该论文旨在解决深度研究(Deep Research)在自主代理中面临的三大核心挑战:长期任务中的上下文噪声加剧、系统脆弱性导致的错误级联,以及缺乏模块化可扩展性。为应对这些问题,作者提出了一种名为Yunque DeepResearch的分层、模块化且鲁棒的框架,其关键在于三个组件:(1) 中心化的多智能体编排系统,负责将子任务分配给原子能力池中的工具和专用子代理;(2) 动态上下文管理机制,通过语义摘要结构化已完成的子目标以缓解信息过载;(3) 主动监督模块,通过异常检测与上下文剪枝实现系统韧性保障。该方案在GAIA、BrowseComp、BrowseComp-ZH及Humanity’s Last Exam等多个基准测试中达到当前最优性能。

链接: https://arxiv.org/abs/2601.19578

作者: Yuxuan Cai,Xinyi Lai,Peng Yuan,Weiting Liu,Huajian Li,Mingda Li,Xinghua Wang,Shengxie Zheng,Yanchao Hao,Yuyang Yin,Zheng Wei

机构: Tencent BAC (腾讯BAC); Tsinghua University (清华大学); Fudan University (复旦大学)

类目: Computation and Language (cs.CL)

备注:

Abstract:Deep research has emerged as a transformative capability for autonomous agents, empowering Large Language Models to navigate complex, open-ended tasks. However, realizing its full potential is hindered by critical limitations, including escalating contextual noise in long-horizon tasks, fragility leading to cascading errors, and a lack of modular extensibility. To address these challenges, we introduce Yunque DeepResearch, a hierarchical, modular, and robust framework. The architecture is characterized by three key components: (1) a centralized Multi-Agent Orchestration System that routes subtasks to an Atomic Capability Pool of tools and specialized sub-agents; (2) a Dynamic Context Management mechanism that structures completed sub-goals into semantic summaries to mitigate information overload; and (3) a proactive Supervisor Module that ensures resilience through active anomaly detection and context pruning. Yunque DeepResearch achieves state-of-the-art performance across a range of agentic deep research benchmarks, including GAIA, BrowseComp, BrowseComp-ZH, and Humanity’s Last Exam. We open-source the framework, reproducible implementations, and application cases to empower the community.

zh

[NLP-19] Benchmarks Saturate When The Model Gets Smarter Than The Judge

【速读】: 该论文旨在解决当前大型语言模型(Large Language Models, LLMs)评估中因数据集不准确和评测方法缺陷导致的基准测试有效性不足的问题。其核心解决方案是构建并发布Omni-MATH-2数据集,通过人工修订确保问题的LaTeX可编译性、可解性和可验证性,剔除冗余信息并标注需要证明、估算或图像支持的问题类型,从而显著降低数据噪声;同时利用专家标注对judge模型进行校准,发现原Omni-Judge在96.4%的判断分歧中出错,揭示其无法有效区分模型能力,尤其是在模型性能接近饱和前即已失效。研究强调:高质量数据与可靠评判机制共同构成精准评估LLM性能的关键前提。

链接: https://arxiv.org/abs/2601.19532

作者: Marthe Ballon,Andres Algaba,Brecht Verbeken,Vincent Ginis

机构: Data Analytics Lab, Vrije Universiteit Brussel, Pleinlaan 5, 1050 Brussel, Belgium; imec-SMIT, Vrije Universiteit Brussel, Pleinlaan 9, 1050 Brussels, Belgium; School of Engineering and Applied Sciences, Harvard University, Cambridge, Massachusetts 02138, USA

类目: Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Machine Learning (cs.LG)

备注: 17 pages, 10 figures, 3 tables

Abstract:Benchmarks are important tools to track progress in the development of Large Language Models (LLMs), yet inaccuracies in datasets and evaluation methods consistently undermine their effectiveness. Here, we present Omni-MATH-2, a manually revised version of the Omni-MATH dataset comprising a clean, exact-answer subset ( n=4181 ) and a tagged, non-standard subset ( n=247 ). Each problem was audited to ensure LaTeX compilability, solvability and verifiability, which involved adding missing figures or information, labeling problems requiring a proof, estimation or image, and removing clutter. This process significantly reduces dataset-induced noise, thereby providing a more precise assessment of model performance. The annotated dataset also allows us to evaluate judge-induced noise by comparing GPT-5 mini with the original Omni-Judge, revealing substantial discrepancies between judges on both the clean and tagged problem subsets. Expert annotations reveal that Omni-Judge is wrong in 96.4% of the judge disagreements, indicating its inability to differentiate between models’ abilities, even well before saturation of the benchmark occurs. As problems become more challenging, we find that increasingly competent judges become essential in order to prevent judge errors from masking genuine differences between models. Finally, neither judge identifies the present failure modes for the subset of tagged problems, demonstrating that dataset quality and judge reliability are both critical to develop accurate benchmarks of model performance.

zh

[NLP-20] Enhancing Academic Paper Recommendations Using Fine-Grained Knowledge Entities and Multifaceted Document Embeddings

【速读】: 该论文旨在解决当前学术文献推荐系统在应对学者研究过程中细粒度需求时的不足问题,例如难以精准定位使用特定研究方法或解决特定子任务的文献。其解决方案的关键在于构建一种融合多维信息的推荐方法:通过引入新型细粒度知识实体(fine-grained knowledge entities),整合文档标题与摘要以及引用数据,生成综合性的论文向量,并基于这些向量计算相似度进行推荐。该方法显著提升了推荐精度,在STM-KG数据集上的Top-50推荐平均精确率达到27.3%,较现有方法提升6.7%。

链接: https://arxiv.org/abs/2601.19513

作者: Haixu Xi,Heng Zhang,Chengzhi Zhang

机构: Jiangsu University of Science and Technology (江苏科技大学); Central China Normal University (华中师范大学); Nanjing University of Science and Technology (南京理工大学)

类目: Information Retrieval (cs.IR); Computation and Language (cs.CL); Digital Libraries (cs.DL)

备注:

Abstract:In the era of explosive growth in academic literature, the burden of literature review on scholars are increasing. Proactively recommending academic papers that align with scholars’ literature needs in the research process has become one of the crucial pathways to enhance research efficiency and stimulate innovative thinking. Current academic paper recommendation systems primarily focus on broad and coarse-grained suggestions based on general topic or field similarities. While these systems effectively identify related literature, they fall short in addressing scholars’ more specific and fine-grained needs, such as locating papers that utilize particular research methods, or tackle distinct research tasks within the same topic. To meet the diverse and specific literature needs of scholars in the research process, this paper proposes a novel academic paper recommendation method. This approach embeds multidimensional information by integrating new types of fine-grained knowledge entities, title and abstract of document, and citation data. Recommendations are then generated by calculating the similarity between combined paper vectors. The proposed recommendation method was evaluated using the STM-KG dataset, a knowledge graph that incorporates scientific concepts derived from papers across ten distinct domains. The experimental results indicate that our method outperforms baseline models, achieving an average precision of 27.3% among the top 50 recommendations. This represents an improvement of 6.7% over existing approaches.

zh

[NLP-21] ALRM: Agent ic LLM for Robotic Manipulation

【速读】: 该论文旨在解决当前基于大语言模型(Large Language Models, LLMs)的机器人操作框架中存在的两大问题:一是现有方法缺乏模块化、可闭环执行的代理机制,难以实现计划、反思与动作修正的循环过程;二是现有操作任务基准测试多聚焦于低层控制,未能系统评估多步骤推理能力和语言多样性。其解决方案的关键在于提出一种名为“代理式大语言模型用于机器人操作”(Agentic LLM for Robot Manipulation, ALRM)的框架,该框架通过类ReAct的推理循环将策略生成与代理执行相融合,支持两种互补模式:Code-as-Policy(CaP)直接生成可执行控制代码,以及Tool-as-Policy(TaP)通过迭代规划和工具调用实现动作执行。这一设计实现了自然语言推理到可靠机器人执行的有效衔接,并构建了一个包含56个任务的新型仿真基准以系统评估性能。

链接: https://arxiv.org/abs/2601.19510

作者: Vitor Gaboardi dos Santos,Ibrahim Khadraoui,Ibrahim Farhat,Hamza Yous,Samy Teffahi,Hakim Hacid

机构: 未知

类目: Robotics (cs.RO); Computation and Language (cs.CL)

备注:

Abstract:Large Language Models (LLMs) have recently empowered agentic frameworks to exhibit advanced reasoning and planning capabilities. However, their integration in robotic control pipelines remains limited in two aspects: (1) prior \acllm-based approaches often lack modular, agentic execution mechanisms, limiting their ability to plan, reflect on outcomes, and revise actions in a closed-loop manner; and (2) existing benchmarks for manipulation tasks focus on low-level control and do not systematically evaluate multistep reasoning and linguistic variation. In this paper, we propose Agentic LLM for Robot Manipulation (ALRM), an LLM-driven agentic framework for robotic manipulation. ALRM integrates policy generation with agentic execution through a ReAct-style reasoning loop, supporting two complementary modes: Code-asPolicy (CaP) for direct executable control code generation, and Tool-as-Policy (TaP) for iterative planning and tool-based action execution. To enable systematic evaluation, we also introduce a novel simulation benchmark comprising 56 tasks across multiple environments, capturing linguistically diverse instructions. Experiments with ten LLMs demonstrate that ALRM provides a scalable, interpretable, and modular approach for bridging natural language reasoning with reliable robotic execution. Results reveal Claude-4.1-Opus as the top closed-source model and Falcon-H1-7B as the top open-source model under CaP.

zh

[NLP-22] Automated Safety Benchmarking: A Multi-agent Pipeline for LVLMs

【速读】: 该论文旨在解决大视觉语言模型(Large Vision-Language Models, LVLMs)在跨模态任务中面临的安全性挑战,尤其是现有安全评估基准因人工构建成本高、复杂度静态且区分能力有限,难以适应快速演进的模型和新兴风险的问题。解决方案的关键在于提出首个自动化LVLM安全评估系统VLSafetyBencher,其核心是引入四个协同工作的智能代理:数据预处理(Data Preprocessing)、生成(Generation)、增强(Augmentation)和选择(Selection)代理,通过自动化流程高效构建高质量安全测试样本,从而在低成本下实现对不同模型安全性差异的有效区分,实验表明该系统可在一周内完成基准构建,并使最安全与最不安全模型之间的安全率差距达70%。

链接: https://arxiv.org/abs/2601.19507

作者: Xiangyang Zhu,Yuan Tian,Zicheng Zhang,Qi Jia,Chunyi Li,Renrui Zhang,Heng Li,Zongrui Wang,Wei Sun

机构: Shanghai AI Lab (上海人工智能实验室); PolyU HK (香港理工大学); East China Normal University (华东师范大学)

类目: Computation and Language (cs.CL)

备注:

Abstract:Large vision-language models (LVLMs) exhibit remarkable capabilities in cross-modal tasks but face significant safety challenges, which undermine their reliability in real-world applications. Efforts have been made to build LVLM safety evaluation benchmarks to uncover their vulnerability. However, existing benchmarks are hindered by their labor-intensive construction process, static complexity, and limited discriminative power. Thus, they may fail to keep pace with rapidly evolving models and emerging risks. To address these limitations, we propose VLSafetyBencher, the first automated system for LVLM safety benchmarking. VLSafetyBencher introduces four collaborative agents: Data Preprocessing, Generation, Augmentation, and Selection agents to construct and select high-quality samples. Experiments validates that VLSafetyBencher can construct high-quality safety benchmarks within one week at a minimal cost. The generated benchmark effectively distinguish safety, with a safety rate disparity of 70% between the most and least safe models.

zh

[NLP-23] GradPruner: Gradient-Guided Layer Pruning Enabling Efficient Fine-Tuning and Inference for LLM s ICLR2026

【速读】: 该论文旨在解决大语言模型(Large Language Models, LLMs)在下游任务微调过程中训练效率低、计算成本高的问题,同时兼顾推理阶段的高效性。传统结构化剪枝方法虽能提升推理效率,但通常需要额外的训练、知识蒸馏或结构搜索步骤,进一步增加资源消耗。其解决方案的关键在于提出GradPruner,该方法利用微调初期各参数累积梯度构建初始梯度信息累积矩阵(Initial Gradient Information Accumulation Matrix, IGIA-Matrix),据此评估网络层的重要性并进行剪枝;随后通过仅合并同号元素的方式稀疏化剪枝层并与保留层融合,以最小化符号差异带来的干扰。实验表明,该方法可在保持高精度的前提下实现高达40%的参数压缩。

链接: https://arxiv.org/abs/2601.19503

作者: Wei Huang,Anda Cheng,Yinggui Wang

机构: Ant Group (蚂蚁集团)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注: Accepted by ICLR2026

Abstract:Fine-tuning Large Language Models (LLMs) with downstream data is often considered time-consuming and expensive. Structured pruning methods are primarily employed to improve the inference efficiency of pre-trained models. Meanwhile, they often require additional time and memory for training, knowledge distillation, structure search, and other strategies, making efficient model fine-tuning challenging to achieve. To simultaneously enhance the training and inference efficiency of downstream task fine-tuning, we introduce GradPruner, which can prune layers of LLMs guided by gradients in the early stages of fine-tuning. GradPruner uses the cumulative gradients of each parameter during the initial phase of fine-tuning to compute the Initial Gradient Information Accumulation Matrix (IGIA-Matrix) to assess the importance of layers and perform pruning. We sparsify the pruned layers based on the IGIA-Matrix and merge them with the remaining layers. Only elements with the same sign are merged to reduce interference from sign variations. We conducted extensive experiments on two LLMs across eight downstream datasets. Including medical, financial, and general benchmark tasks. The results demonstrate that GradPruner has achieved a parameter reduction of 40% with only a 0.99% decrease in accuracy. Our code is publicly available.

zh

[NLP-24] ClaimPT: A Portuguese Dataset of Annotated Claims in News Articles

【速读】: 该论文旨在解决低资源语言(特别是欧洲葡萄牙语)在事实核查(fact-checking)任务中因缺乏高质量标注数据而导致的研究进展滞后问题。当前事实核查高度依赖人工验证,难以应对网络虚假信息的快速传播,而自动化方法在英语等高资源语言中已取得一定成果,但在葡萄牙语等语言中受限于数据稀缺。解决方案的关键在于构建并发布ClaimPT——一个包含1,308篇新闻文章和6,875个事实性断言(factual claims)标注的数据集,其内容来源于葡萄牙通讯社LUSA提供的新闻稿件,而非社交媒体或议会记录,从而更贴近真实新闻环境;同时通过双标注员协作与校对机制确保标注质量,并提供基线模型以建立初始性能基准,为后续低资源场景下的自然语言处理(NLP)与信息检索(IR)应用奠定基础。

链接: https://arxiv.org/abs/2601.19490

作者: Ricardo Campos,Raquel Sequeira,Sara Nerea,Inês Cantante,Diogo Folques,Luís Filipe Cunha,João Canavilhas,António Branco,Alípio Jorge,Sérgio Nunes,Nuno Guimarães,Purificação Silvano

机构: 未知

类目: Computation and Language (cs.CL)

备注:

Abstract:Fact-checking remains a demanding and time-consuming task, still largely dependent on manual verification and unable to match the rapid spread of misinformation online. This is particularly important because debunking false information typically takes longer to reach consumers than the misinformation itself; accelerating corrections through automation can therefore help counter it more effectively. Although many organizations perform manual fact-checking, this approach is difficult to scale given the growing volume of digital content. These limitations have motivated interest in automating fact-checking, where identifying claims is a crucial first step. However, progress has been uneven across languages, with English dominating due to abundant annotated data. Portuguese, like other languages, still lacks accessible, licensed datasets, limiting research, NLP developments and applications. In this paper, we introduce ClaimPT, a dataset of European Portuguese news articles annotated for factual claims, comprising 1,308 articles and 6,875 individual annotations. Unlike most existing resources based on social media or parliamentary transcripts, ClaimPT focuses on journalistic content, collected through a partnership with LUSA, the Portuguese News Agency. To ensure annotation quality, two trained annotators labeled each article, with a curator validating all annotations according to a newly proposed scheme. We also provide baseline models for claim detection, establishing initial benchmarks and enabling future NLP and IR applications. By releasing ClaimPT, we aim to advance research on low-resource fact-checking and enhance understanding of misinformation in news media.

zh

[NLP-25] Dynamic Multi-Expert Projectors with Stabilized Routing for Multilingual Speech Recognition

【速读】: 该论文旨在解决多语言自动语音识别(Multilingual Automatic Speech Recognition, Multilingual ASR)中单一投影层(projector)难以建模不同语言间复杂声学到语义映射的问题。其解决方案的关键在于提出一种稳定化的专家混合(Mixture-of-Experts, MoE)投影结构——SMEAR-MoE,通过确保所有专家均能获得密集梯度流,有效防止专家坍塌(expert collapse),同时支持跨语言知识共享,从而实现更高效、鲁棒的多语言语音理解。

链接: https://arxiv.org/abs/2601.19451

作者: Isha Pandey,Ashish Mittal,Vartul Bahuguna,Ganesh Ramakrishnan

机构: 未知

类目: Computation and Language (cs.CL)

备注:

Abstract:Recent advances in LLM-based ASR connect frozen speech encoders with Large Language Models (LLMs) via lightweight projectors. While effective in monolingual settings, a single projector struggles to capture the diverse acoustic-to-semantic mappings required for multilingual ASR. To address this, we propose SMEAR-MoE, a stabilized Mixture-of-Experts projector that ensures dense gradient flow to all experts, preventing expert collapse while enabling cross-lingual sharing. We systematically compare monolithic, static multi-projector, and dynamic MoE designs across four Indic languages (Hindi, Marathi, Tamil, Telugu). Our SMEAR-MoE achieves strong performance, delivering upto a 7.6% relative WER reduction over the single-projector baseline, while maintaining comparable runtime efficiency. Analysis of expert routing further shows linguistically meaningful specialization, with related languages sharing experts. These results demonstrate that stable multi-expert projectors are key to scalable and robust multilingual ASR.

zh

[NLP-26] KG-CRAFT: Knowledge Graph-based Contrastive Reasoning with LLM s for Enhancing Automated Fact-checking EACL2026

【速读】: 该论文旨在解决自动化事实核查系统中**声明验证(claim verification)**的准确性问题,即如何更有效地利用可靠证据源(如文档或知识库)来判断陈述的真实性。其解决方案的关键在于提出KG-CRAFT方法,该方法通过构建基于声明及其相关报告的知识图谱(knowledge graph),生成结构化且语境相关的对比性问题(contrastive questions),从而引导从证据报告中提炼出关键信息,并将其合成简洁摘要供大语言模型(LLMs)进行真伪判定。此知识图谱驱动的对比推理机制显著提升了LLMs在事实核查任务中的表现,在两个真实数据集(LIAR-RAW和RAWFC)上达到了新的最先进性能。

链接: https://arxiv.org/abs/2601.19447

作者: Vítor N. Lourenço,Aline Paes,Tillman Weyde,Audrey Depeige,Mohnish Dubey

机构: Universidade Federal Fluminense(弗洛里亚诺波利斯联邦大学); Amazon(亚马逊); City St George’s, University of London(伦敦城市圣乔治大学)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注: Accepted to publication at the 19th Conference of the European Chapter of the Association for Computational Linguistics, EACL 2026

Abstract:Claim verification is a core component of automated fact-checking systems, aimed at determining the truthfulness of a statement by assessing it against reliable evidence sources such as documents or knowledge bases. This work presents KG-CRAFT, a method that improves automatic claim verification by leveraging large language models (LLMs) augmented with contrastive questions grounded in a knowledge graph. KG-CRAFT first constructs a knowledge graph from claims and associated reports, then formulates contextually relevant contrastive questions based on the knowledge graph structure. These questions guide the distillation of evidence-based reports, which are synthesised into a concise summary that is used for veracity assessment by LLMs. Extensive evaluations on two real-world datasets (LIAR-RAW and RAWFC) demonstrate that our method achieves a new state-of-the-art in predictive performance. Comprehensive analyses validate in detail the effectiveness of our knowledge graph-based contrastive reasoning approach in improving LLMs’ fact-checking capabilities.

zh

[NLP-27] Ad Insertion in LLM -Generated Responses

【速读】: 该论文旨在解决大型语言模型(Large Language Models, LLMs)可持续 monetization 的关键挑战,即如何在保障用户交互体验、伦理合规与计算效率的前提下,实现广告插入的语义一致性与经济激励相容性。传统基于静态关键词的搜索广告难以捕捉对话流中瞬时且上下文依赖的用户意图(user intents),导致广告相关性低、隐私风险高且计算开销大。其解决方案的核心在于两个解耦策略:一是将广告插入过程与响应生成分离,以确保内容安全与显式广告披露;二是将竞价机制从具体用户查询中解耦,转而基于“类型”(genres,即高层语义聚类)进行投标,从而降低实时响应敏感性带来的隐私和计算负担。进一步地,作者提出在该框架下应用 VCG 拍卖机制,可近似满足主导策略激励相容(DSIC)与个体理性(IR),并保持较高的社会福利最优性与计算效率。

链接: https://arxiv.org/abs/2601.19435

作者: Shengwei Xu,Zhaohua Chen,Xiaotie Deng,Zhiyi Huang,Grant Schoenebeck

机构: University of Michigan (密歇根大学); Peking University (北京大学); The University of Hong Kong (香港大学)

类目: Computer Science and Game Theory (cs.GT); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注: 31 pages, 8 figures

Abstract:Sustainable monetization of Large Language Models (LLMs) remains a critical open challenge. Traditional search advertising, which relies on static keywords, fails to capture the fleeting, context-dependent user intents–the specific information, goods, or services a user seeks–embedded in conversational flows. Beyond the standard goal of social welfare maximization, effective LLM advertising imposes additional requirements on contextual coherence (ensuring ads align semantically with transient user intents) and computational efficiency (avoiding user interaction latency), as well as adherence to ethical and regulatory standards, including preserving privacy and ensuring explicit ad disclosure. Although various recent solutions have explored bidding on token-level and query-level, both categories of approaches generally fail to holistically satisfy this multifaceted set of constraints. We propose a practical framework that resolves these tensions through two decoupling strategies. First, we decouple ad insertion from response generation to ensure safety and explicit disclosure. Second, we decouple bidding from specific user queries by using ``genres’’ (high-level semantic clusters) as a proxy. This allows advertisers to bid on stable categories rather than sensitive real-time response, reducing computational burden and privacy risks. We demonstrate that applying the VCG auction mechanism to this genre-based framework yields approximately dominant strategy incentive compatibility (DSIC) and individual rationality (IR), as well as approximately optimal social welfare, while maintaining high computational efficiency. Finally, we introduce an “LLM-as-a-Judge” metric to estimate contextual coherence. Our experiments show that this metric correlates strongly with human ratings (Spearman’s \rho\approx 0.66 ), outperforming 80% of individual human evaluators. Comments: 31 pages, 8 figures Subjects: Computer Science and Game Theory (cs.GT); Artificial Intelligence (cs.AI); Computation and Language (cs.CL) Cite as: arXiv:2601.19435 [cs.GT] (or arXiv:2601.19435v1 [cs.GT] for this version) https://doi.org/10.48550/arXiv.2601.19435 Focus to learn more arXiv-issued DOI via DataCite (pending registration)

zh

[NLP-28] Do LLM s Truly Benefit from Longer Context in Automatic Post-Editing?

【速读】: 该论文旨在解决生成式 AI (Generative AI) 在文档级自动后编辑(Automatic Post-Editing, APE)任务中的性能与效率问题,特别是大语言模型(Large Language Models, LLMs)在利用文档上下文进行错误修正方面的有效性不足。其关键解决方案在于系统性地比较专有模型与开源权重模型在简单的一次提示(one-shot prompting)设置下,于文档级上下文中执行APE的表现差异,揭示了专有模型虽能达到接近人类水平的翻译质量,但对文档级语境依赖弱、鲁棒性强却难以有效利用上下文信息,且存在显著计算成本和延迟问题。研究强调了当前LLM-based APE方法在实用性上的局限,并指出未来需发展更高效的长文本建模技术以提升翻译精修效果。

链接: https://arxiv.org/abs/2601.19410

作者: Ahrii Kim,Seong-heum Kim

机构: 未知

类目: Computation and Language (cs.CL)

备注:

Abstract:Automatic post-editing (APE) aims to refine machine translations by correcting residual errors. Although recent large language models (LLMs) demonstrate strong translation capabilities, their effectiveness for APE–especially under document-level context–remains insufficiently understood. We present a systematic comparison of proprietary and open-weight LLMs under a naive document-level prompting setup, analyzing APE quality, contextual behavior, robustness, and efficiency. Our results show that proprietary LLMs achieve near human-level APE quality even with simple one-shot prompting, regardless of whether document context is provided. While these models exhibit higher robustness to data poisoning attacks than open-weight counterparts, this robustness also reveals a limitation: they largely fail to exploit document-level context for contextual error correction. Furthermore, standard automatic metrics do not reliably reflect these qualitative improvements, highlighting the continued necessity of human evaluation. Despite their strong performance, the substantial cost and latency overheads of proprietary LLMs render them impractical for real-world APE deployment. Overall, our findings elucidate both the promise and current limitations of LLM-based document-aware APE, and point toward the need for more efficient long-context modeling approaches for translation refinement. Subjects: Computation and Language (cs.CL) Cite as: arXiv:2601.19410 [cs.CL] (or arXiv:2601.19410v1 [cs.CL] for this version) https://doi.org/10.48550/arXiv.2601.19410 Focus to learn more arXiv-issued DOI via DataCite (pending registration) Related DOI: https://doi.org/10.36227/techrxiv.176107895.57699371/v1 Focus to learn more DOI(s) linking to related resources

zh

[NLP-29] Binary Token-Level Classification with DeBERTa for All-Type MWE Identification: A Lightweight Approach with Linguistic Enhancement EACL2026

【速读】: 该论文旨在解决多词表达(Multiword Expression, MWE)识别任务中的性能瓶颈问题,尤其是在数据稀疏和类别不平衡场景下如何提升模型精度。其解决方案的关键在于三点:一是将MWE检测重构为基于token级别的二分类任务(START/END/INSIDE),替代传统的跨度预测方式,从而更高效地捕捉MWE边界;二是融合名词短语(NP)分块和依存句法特征,显著增强对离散型及名词类MWE的识别能力;三是采用过采样策略缓解训练数据中严重的类别不平衡问题。通过上述设计,作者使用参数量仅为Qwen-72B的1/165的DeBERTa-v3-large模型,在CoAM数据集上达到69.8% F1,远超现有最佳结果,并在STREUSLE数据集上验证了方法的良好泛化性。

链接: https://arxiv.org/abs/2601.19360

作者: Diego Rossini,Lonneke van der Plas

机构: Università della Svizzera Italiana(瑞士意大利语大学)

类目: Computation and Language (cs.CL)

备注: Accepted at Findings of EACL 2026

Abstract:We present a comprehensive approach for multiword expression (MWE) identification that combines binary token-level classification, linguistic feature integration, and data augmentation. Our DeBERTa-v3-large model achieves 69.8% F1 on the CoAM dataset, surpassing the best results (Qwen-72B, 57.8% F1) on this dataset by 12 points while using 165x fewer parameters. We achieve this performance by (1) reformulating detection as binary token-level START/END/INSIDE classification rather than span-based prediction, (2) incorporating NP chunking and dependency features that help discontinuous and NOUN-type MWEs identification, and (3) applying oversampling that addresses severe class imbalance in the training data. We confirm the generalization of our method on the STREUSLE dataset, achieving 78.9% F1. These results demonstrate that carefully designed smaller models can substantially outperform LLMs on structured NLP tasks, with important implications for resource-constrained deployments.

zh

[NLP-30] Cross-Examination Framework: A Task-Agnostic Diagnostic for Information Fidelity in Text-to-Text Generation

【速读】: 该论文旨在解决传统文本生成评估指标(如BLEU和BERTScore)在衡量生成文本语义保真度(semantic fidelity)方面的不足,尤其是在翻译、摘要生成和临床笔记生成等任务中难以识别内容遗漏和事实性矛盾等问题。其解决方案的关键在于提出一种无需参考文本的多维评估框架——交叉检验框架(Cross-Examination Framework, CEF),该框架将源文本与候选文本视为独立的知识库,通过生成可验证的问题并进行交叉检验,从而得到三个可解释的评分维度:覆盖度(Coverage)、一致性(Conformity)和一致性(Consistency)。其中,关键创新点包括:系统性地开展鲁棒性分析以选择稳定的判别模型,并通过与有参考文本模式的强相关性验证了无参考模式下的可靠性;同时,人类专家验证表明,CEF识别出的不一致问题更倾向于指向语义层面的错误(尤其是实体和关系扭曲),而非非语义错误,显著提升了评估的准确性与实用性。

链接: https://arxiv.org/abs/2601.19350

作者: Tathagata Raha,Clement Christophe,Nada Saadi,Hamza A Javed,Marco AF Pimentel,Ronnie Rajan,Praveenkumar Kanithi

机构: M42 Health(医疗健康公司)

类目: Computation and Language (cs.CL)

备注:

Abstract:Traditional metrics like BLEU and BERTScore fail to capture semantic fidelity in generative text-to-text tasks. We adapt the Cross-Examination Framework (CEF) for a reference-free, multi-dimensional evaluation by treating the source and candidate as independent knowledge bases. CEF generates verifiable questions from each text and performs a cross-examination to derive three interpretable scores: Coverage, Conformity, and Consistency. Validated across translation, summarization and clinical note-generation, our framework identifies critical errors, such as content omissions and factual contradictions, missed by standard metrics. A key contribution is a systematic robustness analysis to select a stable judge model. Crucially, the strong correlation between our reference-free and with-reference modes validates CEF’s reliability without gold references. Furthermore, human expert validation demonstrates that CEF mismatching questions align with meaning-altering semantic errors higher than with non-semantic errors, particularly excelling at identifying entity-based and relational distortions.

zh

[NLP-31] When Benchmarks Leak: Inference-Time Decontamination for LLM s

【速读】: 该论文旨在解决大规模语言模型(Large Language Models, LLMs)在基准测试(benchmark-based evaluation)中因测试集污染(test set contamination)而导致评估结果不可靠的问题。测试集污染指测试样本或其近似变体泄露至训练数据中,从而人为提升模型在基准上的表现。现有解决方案分为两类:一类是提前识别并移除污染项,但会改变原始评估集且在中重度污染下失效;另一类是在评估时抑制污染行为,却常干扰正常推理并导致干净输入性能显著下降。本文提出DeconIEP框架,其关键在于在评估阶段通过施加小而有界的输入嵌入空间扰动(input embedding space perturbations),引导模型避开由记忆驱动的捷径路径(memorization-driven shortcut pathways)。该方法借助一个相对较少污染的参考模型(reference model)学习实例自适应的扰动生成器(instance-adaptive perturbation generator),实现无需修改基准、不破坏原始模型结构即可有效去污,同时对良性任务性能影响最小。

链接: https://arxiv.org/abs/2601.19334

作者: Jianzhe Chai,Yu Zhe,Jun Sakuma

机构: Institute of Science Tokyo (东京科学研究所); RIKEN AIP (理化学研究所人工智能中心)

类目: Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

备注:

Abstract:Benchmark-based evaluation is the de facto standard for comparing large language models (LLMs). However, its reliability is increasingly threatened by test set contamination, where test samples or their close variants leak into training data and artificially inflate reported performance. To address this issue, prior work has explored two main lines of mitigation. One line attempts to identify and remove contaminated benchmark items before evaluation, but this inevitably alters the evaluation set itself and becomes unreliable when contamination is moderate or severe. The other line preserves the benchmark and instead suppresses contaminated behavior at evaluation time; however, such interventions often interfere with normal inference and lead to noticeable performance degradation on clean inputs. We propose DeconIEP, a decontamination framework that operates entirely during evaluation by applying small, bounded perturbations in the input embedding space. Guided by a relatively less-contaminated reference model, DeconIEP learns an instance-adaptive perturbation generator that steers the evaluated model away from memorization-driven shortcut pathways. Across multiple open-weight LLMs and benchmarks, extensive empirical results show that DeconIEP achieves strong decontamination effectiveness while incurring only minimal degradation in benign utility.

zh

[NLP-32] Formula-One Prompting: Adaptive Reasoning Through Equations For Applied Mathematics

【速读】: 该论文旨在解决当前大语言模型(Large Language Models, LLMs)在处理应用数学问题时,如金融、物理和密码学等领域,缺乏显式建模 governing equations(控制方程)的问题。现有提示技术如 Chain-of-Thought (CoT) 和 Program-of-Thought (PoT) 虽能通过自然语言或代码结构化中间步骤提升数学推理能力,但未明确利用从问题描述中提取或推导控制方程这一关键环节。解决方案的核心是提出 Formula-One Prompting (F-1),其采用两阶段策略:首先从问题描述中生成控制方程作为中间表示,随后基于生成的方程自适应选择 CoT、PoT 或直接计算三种求解策略之一,整个过程仅需一次 LLM 调用。实验证明 F-1 在多个基准测试上显著优于 CoT 和 PoT,尤其在应用数学领域提升更明显,验证了将方程作为中间表征对复杂问题求解的有效性。

链接: https://arxiv.org/abs/2601.19302

作者: Natapong Nitarach,Pittawat Taveekitworachai,Kunat Pipatanakul

机构: SCB 10X, SCBX Group

类目: Computation and Language (cs.CL)

备注:

Abstract:Prompting techniques such as Chain-of-Thought (CoT) and Program-of-Thought (PoT) improve LLM mathematical reasoning by structuring intermediate steps in natural language or code. However, applied mathematics problems in domains like finance, physics, and cryptography often require recalling or deriving governing equations, a step that current approaches do not explicitly leverage. We propose Formula-One Prompting (F-1), a two-phase approach that uses mathematical equations as an intermediate representation before adaptive solving. F-1 first formulates governing equations from problem descriptions, then selects a solving strategy among CoT, PoT, or direct computation based on the generated equations, all within a single LLM call. Results across five models and four benchmarks show F-1 outperforms CoT by +5.76% and PoT by +8.42% on average. Crucially, gains are largest in applied domains: +13.30% on FinanceMath over CoT, and within OlympiadBench, larger gains on physics (+2.55%) than pure math (+0.44%). This demonstrates that F-1 is more effective than CoT in applied mathematics problems.

zh

[NLP-33] MetaGen: Self-Evolving Roles and Topologies for Multi-Agent LLM Reasoning

【速读】: 该论文旨在解决多智能体系统(Multi-Agent Systems)在执行复杂任务时因固定角色库和静态交互拓扑导致的任务不匹配、难以适应新证据以及推理成本过高的问题。其解决方案的关键在于提出一种无需训练的框架MetaGen,该框架在推理阶段动态调整角色空间与协作拓扑:通过生成和重写条件感知的角色规范来维护可控的动态角色池,并围绕最小骨干结构实例化受限执行图;同时,在执行过程中利用轻量级反馈信号迭代更新角色提示并调整结构决策,从而实现高效且自适应的任务求解。

链接: https://arxiv.org/abs/2601.19290

作者: Yimeng Wang,Jiaxing Zhao,Hongbin Xie,Hexing Ma,Yuzhen Lei,Shuangxue Liu,Xuan Song,Zichen Zhang,Haoran Zhang

机构: School of Artificial Intelligence, Jilin University (吉林大学人工智能学院); Department of Computer Science and Engineering, Southern University of Science and Technology (南方科技大学计算机科学与工程系); School of Urban Planning and Design, Peking University (北京大学城市规划与设计学院)

类目: Computation and Language (cs.CL)

备注:

Abstract:Large language models are increasingly deployed as multi-agent systems, where specialized roles communicate and collaborate through structured interactions to solve complex tasks that often exceed the capacity of a single agent. However, most existing systems still rely on a fixed role library and an execution-frozen interaction topology, a rigid design choice that frequently leads to task mismatch, prevents timely adaptation when new evidence emerges during reasoning, and further inflates inference cost. We introduce MetaGen, a training-free framework that adapts both the role space and the collaboration topology at inference time, without updating base model weights. MetaGen generates and rewrites query-conditioned role specifications to maintain a controllable dynamic role pool, then instantiates a constrained execution graph around a minimal backbone. During execution, it iteratively updates role prompts and adjusts structural decisions using lightweight feedback signals. Experiments on code generation and multi-step reasoning benchmarks show that MetaGen improves the accuracy and cost tradeoff over strong multi-agent baselines.

zh

[NLP-34] ReToP: Learning to Rewrite Electronic Health Records for Clinical Prediction WSDM2026

【速读】: 该论文旨在解决电子健康记录(Electronic Health Records, EHRs)在临床预测任务中因高维性、异质性和稀疏性导致的建模挑战,尤其针对现有基于大语言模型(Large Language Models, LLMs)的方法普遍缺乏任务感知性的问题——即这些方法通常将LLMs作为EHR编码器或补全模块使用,未能充分整合预测任务信号,从而限制了预测性能。解决方案的关键在于提出一个端到端训练的框架Rewrite-To-Predict (ReToP),其核心创新包括:1)通过临床驱动的特征选择策略生成合成伪标签,以构建多样化的患者EHR重写样本用于微调EHR重写器;2)引入一种新型的Classifier Supervised Contribution (CSC)评分机制,使EHR重写器能够生成与预测目标对齐的临床相关重写内容,从而直接提升预测准确性。实验证明,ReToP在MIMIC-IV数据集上的三个临床任务中均优于强基线模型,并展现出良好的跨数据集和跨任务泛化能力。

链接: https://arxiv.org/abs/2601.19286

作者: Jesus Lovon-Melgarejo(IRIT),Jose G. Moreno(IRIT-IRIS),Christine Damase-Michel,Lynda Tamine(IRIT-IRIS)

机构: 未知

类目: Computation and Language (cs.CL)

备注: Accepted by WSDM 2026

Abstract:Electronic Health Records (EHRs) provide crucial information for clinical decision-making. However, their high-dimensionality, heterogeneity, and sparsity make clinical prediction challenging. Large Language Models (LLMs) allowed progress towards addressing this challenge by leveraging parametric medical knowledge to enhance EHR data for clinical prediction tasks. Despite the significant achievements made so far, most of the existing approaches are fundamentally task-agnostic in the sense that they deploy LLMs as EHR encoders or EHR completion modules without fully integrating signals from the prediction tasks. This naturally hinders task performance accuracy. In this work, we propose Rewrite-To-Predict (ReToP), an LLM-based framework that addresses this limitation through an end-to-end training of an EHR rewriter and a clinical predictor. To cope with the lack of EHR rewrite training data, we generate synthetic pseudo-labels using clinical-driven feature selection strategies to create diverse patient rewrites for fine-tuning the EHR rewriter. ReToP aligns the rewriter with prediction objectives using a novel Classifier Supervised Contribution (CSC) score that enables the EHR rewriter to generate clinically relevant rewrites that directly enhance prediction. Our ReToP framework surpasses strong baseline models across three clinical tasks on MIMIC-IV. Moreover, the analysis of ReToP shows its generalizability to unseen datasets and tasks with minimal fine-tuning while preserving faithful rewrites and emphasizing task-relevant predictive features.

zh

[NLP-35] Group Distributionally Robust Optimization-Driven Reinforcement Learning for LLM Reasoning

【速读】: 该论文旨在解决当前大型语言模型(Large Language Model, LLM)后训练阶段中,基于强化学习(Reinforcement Learning, RL)的优化策略(如Group Relative Policy Optimization, GRPO)因静态均匀采样和固定rollout数量而导致的计算资源分配 inefficiency 问题。具体而言,在具有异质性和长尾分布特征的推理数据上,传统方法会浪费算力在已解决的简单模式上,同时对困难样本训练不足。其解决方案的关键在于提出一种以优化为核心的多对抗分布鲁棒优化框架(Multi-Adversary Group Distributionally Robust Optimization, GDRO),通过引入在线难度分类器动态划分提示(prompt)为不同难度组,并设计两个独立的GDRO机制:(1) Prompt-GDRO利用EMA去偏乘法权重bandit采样器,聚焦于高难度边缘并避免频率偏差地提升难例权重;(2) Rollout-GDRO采用影子价格控制器,在固定平均计算预算下重新分配rollouts,最大化梯度方差减少以增强困难任务的学习效率。两者均提供无遗憾保证,并在DAPO 14.1k数据集上验证了显著性能提升。

链接: https://arxiv.org/abs/2601.19280

作者: Kishan Panaganti,Zhenwen Liang,Wenhao Yu,Haitao Mi,Dong Yu

机构: Tencent AI Lab in Bellevue (腾讯AI实验室)

类目: Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

备注: Keywords: Large Language Models, Reasoning Models, Reinforcement Learning, Distributionally Robust Optimization, GRPO

Abstract:Recent progress in Large Language Model (LLM) reasoning is increasingly driven by the refinement of post-training loss functions and alignment strategies. However, standard Reinforcement Learning (RL) paradigms like Group Relative Policy Optimization (GRPO) remain constrained by static uniformity: uniform prompt sampling and a fixed number of rollouts per prompt. For heterogeneous, heavy-tailed reasoning data, this creates structural inefficiencies that waste compute on already-solved patterns while under-training the long tail of hard problems. To address this, we propose Multi-Adversary Group Distributionally Robust Optimization (GDRO), an optimization-first framework that moves beyond uniform reasoning models by dynamically adapting the training distribution. We introduce an Online Difficulty Classifier that partitions prompts into dynamic pass@k difficulty groups. We then propose two independent GDRO games for post-training: (1) Prompt-GDRO, which employs an EMA-debiased multiplicative-weights bandit sampler to target the intensive difficulty margin and upweight persistently hard groups without frequency bias; and (2) Rollout-GDRO, which uses a shadow-price controller to reallocate rollouts across groups, maximizing gradient variance reduction on hard tasks under a fixed mean budget (compute-neutral). We provide no-regret guarantees for both controllers and additionally a variance-proxy analysis motivating a square-root optimal rollout allocation for Rollout-GDRO. We validate our framework on the DAPO 14.1k dataset using Qwen3-Base models. Prompt-GDRO and Rollout-GDRO achieve average relative gains of +10.6% and +10.1%, respectively, in pass@8 accuracy across 1.7B, 4B, and 8B scales compared to the GRPO baseline. Qualitative analysis shows an emergent curriculum: the adversaries shift resources to the evolving reasoning frontier, enhancing the reasoning model’s performance. Comments: Keywords: Large Language Models, Reasoning Models, Reinforcement Learning, Distributionally Robust Optimization, GRPO Subjects: Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL) Cite as: arXiv:2601.19280 [cs.LG] (or arXiv:2601.19280v1 [cs.LG] for this version) https://doi.org/10.48550/arXiv.2601.19280 Focus to learn more arXiv-issued DOI via DataCite (pending registration)

zh

[NLP-36] DART: Diffusion-Inspired Speculative Decoding for Fast LLM Inference

【速读】: 该论文旨在解决现有基于模型的推测解码(speculative decoding)方法中draft阶段延迟过高导致性能瓶颈的问题,尤其是像EAGLE3这类方案因多步自回归推理而引入显著延迟。其解决方案的关键在于提出DART框架,通过利用扩散式大语言模型(dLLMs)的思想,实现并行生成(parallel generation):在单次前向传播中预测多个未来掩码位置的logits,从而消除draft模型中的自回归滚动(autoregressive rollout),同时保持轻量化设计;此外,结合N-gram约束的高效树剪枝算法构建语义连续性良好的draft token树,显著降低draft阶段开销并维持高准确率,最终实现端到端解码速度大幅提升。

链接: https://arxiv.org/abs/2601.19278

作者: Fuliang Liu,Xue Li,Ketai Zhao,Yinxi Gao,Ziyan Zhou,Zhonghui Zhang,Zhibin Wang,Wanchun Dou,Sheng Zhong,Chen Tian

机构: 未知

类目: Computation and Language (cs.CL)

备注:

Abstract:Speculative decoding is an effective and lossless approach for accelerating LLM inference. However, existing widely adopted model-based draft designs, such as EAGLE3, improve accuracy at the cost of multi-step autoregressive inference, resulting in high drafting latency and ultimately rendering the drafting stage itself a performance bottleneck. Inspired by diffusion-based large language models (dLLMs), we propose DART, which leverages parallel generation to reduce drafting latency. DART predicts logits for multiple future masked positions in parallel within a single forward pass based on hidden states of the target model, thereby eliminating autoregressive rollouts in the draft model while preserving a lightweight design. Based on these parallel logit predictions, we further introduce an efficient tree pruning algorithm that constructs high-quality draft token trees with N-gram-enforced semantic continuity. DART substantially reduces draft-stage overhead while preserving high draft accuracy, leading to significantly improved end-to-end decoding speed. Experimental results demonstrate that DART achieves a 2.03x–3.44x wall-clock time speedup across multiple datasets, surpassing EAGLE3 by 30% on average and offering a practical speculative decoding framework. Code is released at this https URL.

zh